This afternoon Microsoft announced Brainwave, an FPGA-based system for ultra-low latency deep learning in the cloud. Early benchmarking indicates that when using Intel Stratix 10 FPGAs, Brainwave can sustain 39.5 Teraflops on a large gated recurrent unit without any batching.

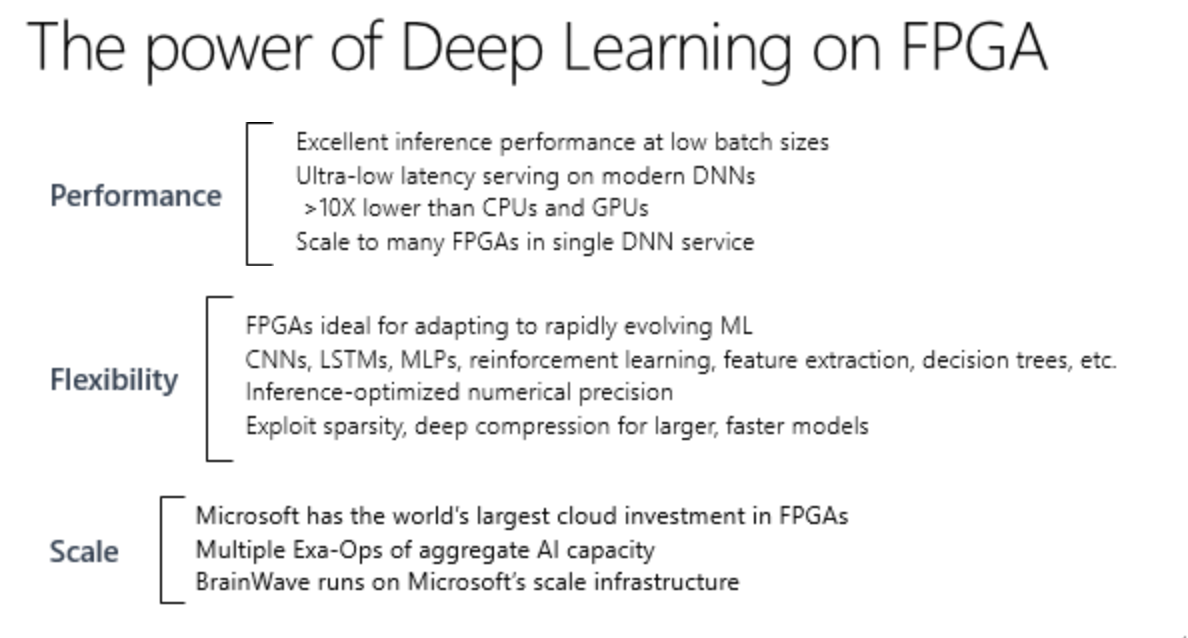

Microsoft has been pouring resources into FPGAs for a while now, deploying large clusters of the field-programmable gate arrays into its data centers. Algorithms are written into FPGAs, making them quite efficient and easily reprogrammable. This specialization makes them ideal for machine learning, specifically parallel computing.

Building on this work, Microsoft has synthesized DPU or DNN processing units into its FPGAs. The hope is that by focusing on deep neural nets, Microsoft can adapt its infrastructure faster to keep up with research and offer near real-time processing.

For as retro of a technology as FPGAs are, the nifty chips have been having something of a renaissance in recent years. Mipsology, an FPGA-obsessed startup, has been working closely with Amazon to make the technology accessible over Amazon Web Services and other platforms.

If the last few decades were about creating generalized CPUs to handle a broad array of processes, the last few months have been about building custom chips that excel at specific tasks. A solid chunk of these efforts have gone toward dedicated chips for machine learning.

The most notable effort by far has been Google’s Tensor Processing Unit. The chips are optimized around TensorFlow, and early benchmarking completed has been very promising. That said, most major tech companies have side projects around the future of computing — quantum chips, FPGAs, etc. And when there’s interest from tech giants, startups are willing to make a game of it — Rigetti, Mythic and Wave to name a few.

No word on when Brainwave will be available for Microsoft Azure customers. As of now the system works with Google’s popular TensorFlow and Microsoft’s own CNTK. The company is setting ambitious expectations for deep learning performance using the technology, so there will undoubtedly be plenty more benchmarking news in the coming months.