Apple and the future of photography in Depth: part 2, iPhone X

When iPhone launched ten years ago it took basic photos and no videos. Apple has since rapidly advanced its capabilities in mobile photography to the point where iPhone is now globally famous for its billboard-sized artwork and has been used to shoot feature length films for cinematic release. iOS 11 now achieves a new level of image capture with Depth. Here's a look at the future of photos, focusing on new features in the upcoming iPhone X.

The previous segment of this series looking at depth-based photography focused on Portrait features in iPhone 7 Plus and Portrait Lighting features new to iPhone 8 Plus (as well as the upcoming iPhone X). This segment will look at an expansion of TrueDepth sensing specific to iPhone X, as well as new camera vision and machine learning features common across iOS 11 devices.

iPhone X: selfie depth using TrueDepth sensors

At WWDC17 this summer, Apple presented iOS 11's new Depth API for using the differential depth data captured by the dual rear cameras of iPhone 7 Plus. It also hinted at an alternative technology that could enable even more accurate depth maps than the iPhone 7 Plus dual cameras captured.

By then, we were well aware of the work the company was doing with 3D structure sensors since its $345 million acquisition of PrimeSense back in 2013, making it obvious that Apple had created its Depth API to handle a range of sophisticated imaging capabilities that took advantage of the camera not just as an aperture used to electronically expose digital film, but as a machine eye.

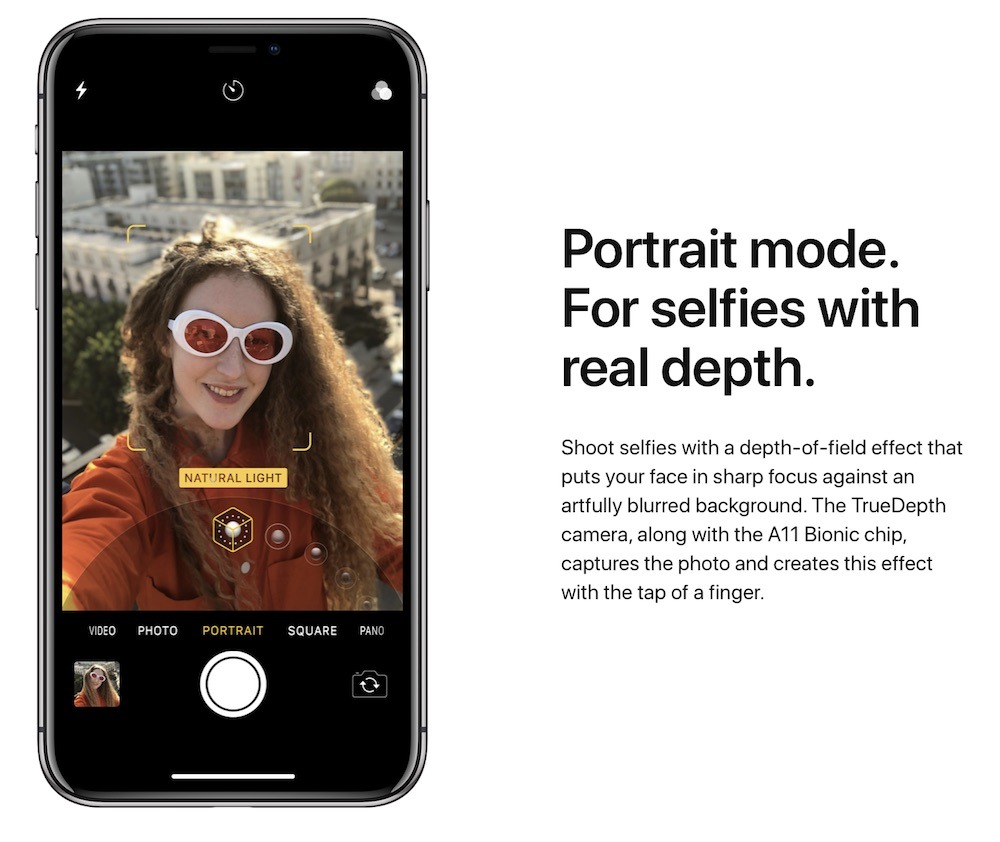

However, one of the first applications of the new TrueDepth sensor is again Portrait Lighting, this time for use in front facing "selfie" shots. This effectively holds out to the millions of iPhone 7 Plus and 8 Plus users a recognizable extension of a feature they're already familiar with using on the rear dual lens camera.

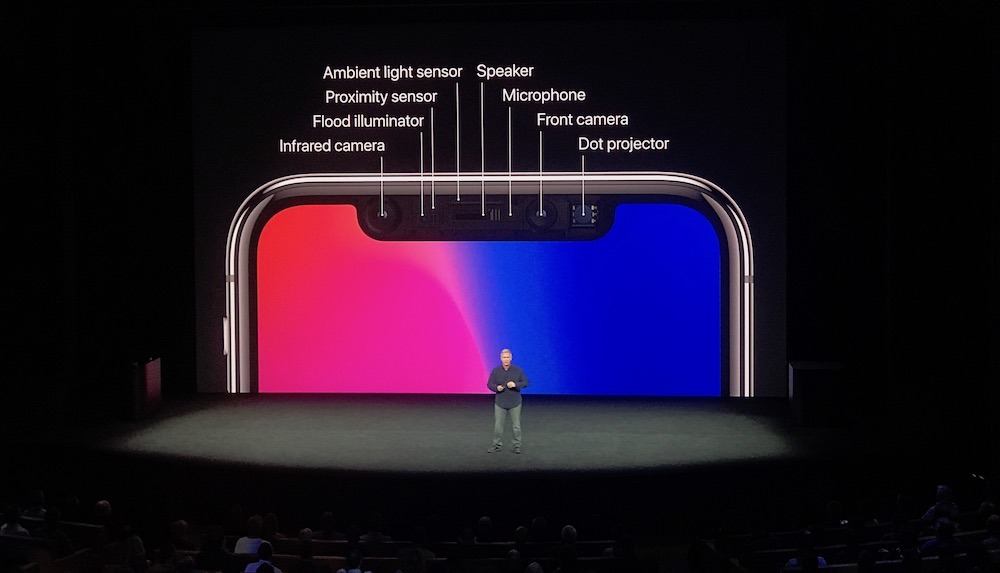

While the rear dual camera calculates a depth map using differential math on various points of two images taken by the two cameras, the TrueDepth sensor array uses a pattern of reflected invisible light, giving it a more detailed depth map to compare to the color image taken by the camera.

iPhone X: Animoji using TrueDepth

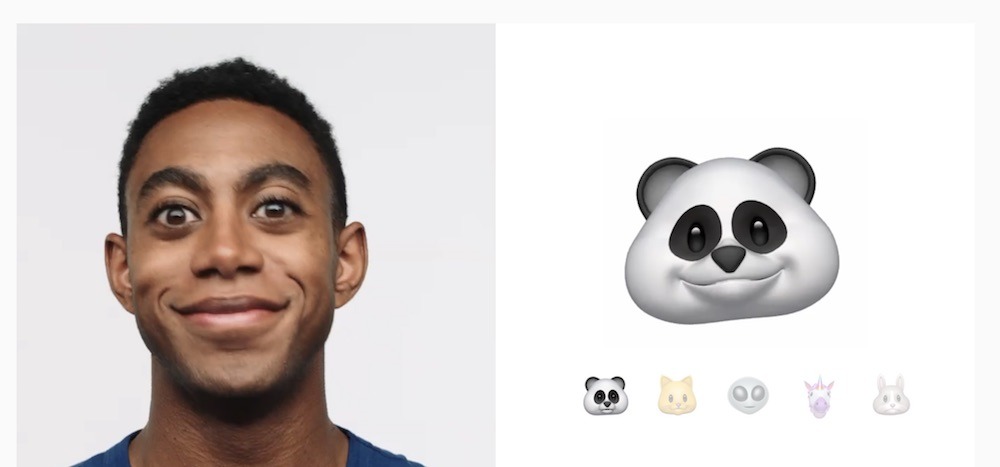

In addition to being able to handle depth data similar to the dual lens 7/8 Plus models to perform Portrait and Lighting features on still images, the TrueDepth sensors on iPhone X can also track the more detailed depth information over time to create an animated representation of the user's face, creating an avatar that mimics their movements. Apple delivered this in the form of new "animoji," patterned after its existing, familiar emoji, now brought to life as animated 3D models.

Apple could have created DIY cartoonish animated avatars, patterned after Snapchat's acquired Bitmoji or Nintendo's Wii Mii avatars. Instead it took a selection of the iconic emojis it created internally and turned them into 3D masks that anyone can "wear" to communicate visually in iMessage. Google's latest Pixel 2 is currently only attempting to copy Portrait mode from last year and Live Photos from two years ago. It doesn't yet even know about Portrait Lighting yet, let alone Animoji, and lacks a real depth camera to support such capabilities

In iOS 11, Apple is also making the underlying TrueDepth technology available to third parties to use to create their own custom effects, as demonstrated with Snapchat filters that apply detailed skin-tight effects to a user in real-time. App and game developers can similarly build their own avatars synced with the user's facial expressions.

It will be a little harder for Android licensees to copy Apple's Animoji, because the emoji created by Google, Samsung and others are generally terrible. Google has dumped its oddball emojis in favor of iOS-like designs for Android 8 "Oreo," but it will take a long time for the new software to trickle out.

Additionally, there is no centralized focus in Android for deploying the kind of features Apple can deploy rapidly. Hardware licensees are all trying out their own hardware and software ideas, and even Google's "do it like this" Pixel project lags far behind Apple.

Google's latest Pixel 2 is currently only attempting to copy Portrait mode from last year and Live Photos from two years ago. It doesn't yet even know about Portrait Lighting yet, let alone Animoji, and lacks a real depth camera to support such capabilities.

iPhone X: Face ID using TrueDepth

In parallel, Apple is also doing its own facial profile of the enrolled user to support Face ID, the modern new alternative to Touch ID. Critics and skeptics started complaining about Face ID before having even tried it, but the new system provides an even greater set of unique biometric data to evaluate than the existing, small Touch ID sensor— which only looks at part of your fingerprint when you touch it.

Face ID is not really "using your face as your password." Your security password remains the passcode you select and change as needed. Remote attackers have no way to attempt to present a 3D picture of your face or a fingerprint scan to log in as you remotely. In fact, our initial attempts to unlock iPhone X by holding it up to an enrolled presenter's face failed to unlock the device, even when standing next to the person.

"There's a distance component. It's hard to do it when somebody else is holding it," the presenter noted before taking the phone and promptly unlocking it at a natural arms' length. In the video clip below, we had present Face ID authentication in slo-mo because it was so fast once it was in the hand of the enrolled user.

Face ID (like Touch ID before it) simply provides a more secure way to conveniently skip over entering your passcode by proving to the device who you are in a way that's difficult to fake— or to coerce from another person, as we discovered in Apple's hands-on area in September. Apple has made this easy to disable, so anyone trying to exploit the biometric system on a stolen phone would quickly run out of time and chances to do so.

Biometric ID can also be turned off entirely on iOS. However, it's important to recognize that the result of Apple's biometric ID system since iPhone 5s has not been an increase in vulnerability or stolen data that some pundits once predicted, but rather a massive decrease in stolen phones and widespread, effective protection of users' personal data, to the point where national law enforcement has raised concerns about iOS's ability to protect saved content on a phone using very effective encryption.

Despite all the sweaty handwringing, biometric ID on iOS has been implemented correctly due to the thoughtful consideration invested in its technical design and engineering.

The same can't be said of Android, where leading, major licensees including Samsung and HTC first screwed up their own implementations of fingerprint authentication, then rushed out gimmicky face-photo recognition schemes that were not effective and easy to exploit.

Using its unique, custom designed and calibrated TrueDepth camera system on iPhone X, Apple has far more data to evaluate to reject fakes and verify the user for convenient yet secure biometric identification. The cost of the sensor system will prevent most Android licensees from being able to adopt similar technology. Google recently noted that it expects one third of the Android phones sold this year to cost less than $100.

In dramatic contrast, Apple is expected to sell its $1,000-and-up iPhone X to more than a third— and perhaps as much as half— of its new buyers this year. There are a number of reasons for users to want to upgrade to Apple's newest iPhone, but the fact that all of them will also get the TrueDepth sensor means that it will immediately get a very large installed base in the tens of millions, enough to attract the attention of third party developers.

In addition to obtaining functional 3D sensing hardware and deploying in their new phones, Android licensees also face another issue Apple has already solved: building an ecosystem and demonstrating real-world applications for the technology.

A report stating that Chinese vendors were struggling to source 3D sensors built by Qualcomm/Himax also noted that "it would take a longer time for smartphone vendors to establish the relevant application ecosystem, involving firmware, software and apps, needed to support the performance of 3D sensing modules than to support the function of fingerprint recognition or touch control," concluding that "this will constitute the largest barrier to the incorporation of 3D sensors into smartphones."

Android's shallow depth of platform

Other attempts to sell third party 3D camera sensors for mobile devices have made little impact on the market. Google has worked with PrimeSense depth-based imaging for years under its Project Tango, but hasn't been able to convince its price-sensitive Android licensees to adopt the necessary technology in a way that matters. Google has worked with PrimeSense depth-based imaging for years under its Project Tango, but hasn't been able to convince its price-sensitive Android licensees to adopt the necessary technology in a way that matters

Once Apple demonstrated ARKit, Google renamed portions of Tango platform as "ARCore" to piggyback on Apple's publicity, but again there is not an installed base of supported Androids capable of functionally using AR, and even fewer have the capacity to work with real depth data, whether from dual cameras or any type of depth sensor.

Also, the very decentralized nature of Android not only introduces problems with fragmentation and device calibration, but also trends toward building super cheap devices, not powerful hardware with specialized cameras and high performance, local computational power that is required to handle sophisticated AR and depth-based camera imaging.

Rather than developing powerful hardware, Google has for years promoted the idea that Android, Chrome and Pixel products could be cheap devices powered by its sophisticated cloud services. That vision is oriented around the idea that Google wants users' data, not that it's the best way to deploy advanced technology to individuals.

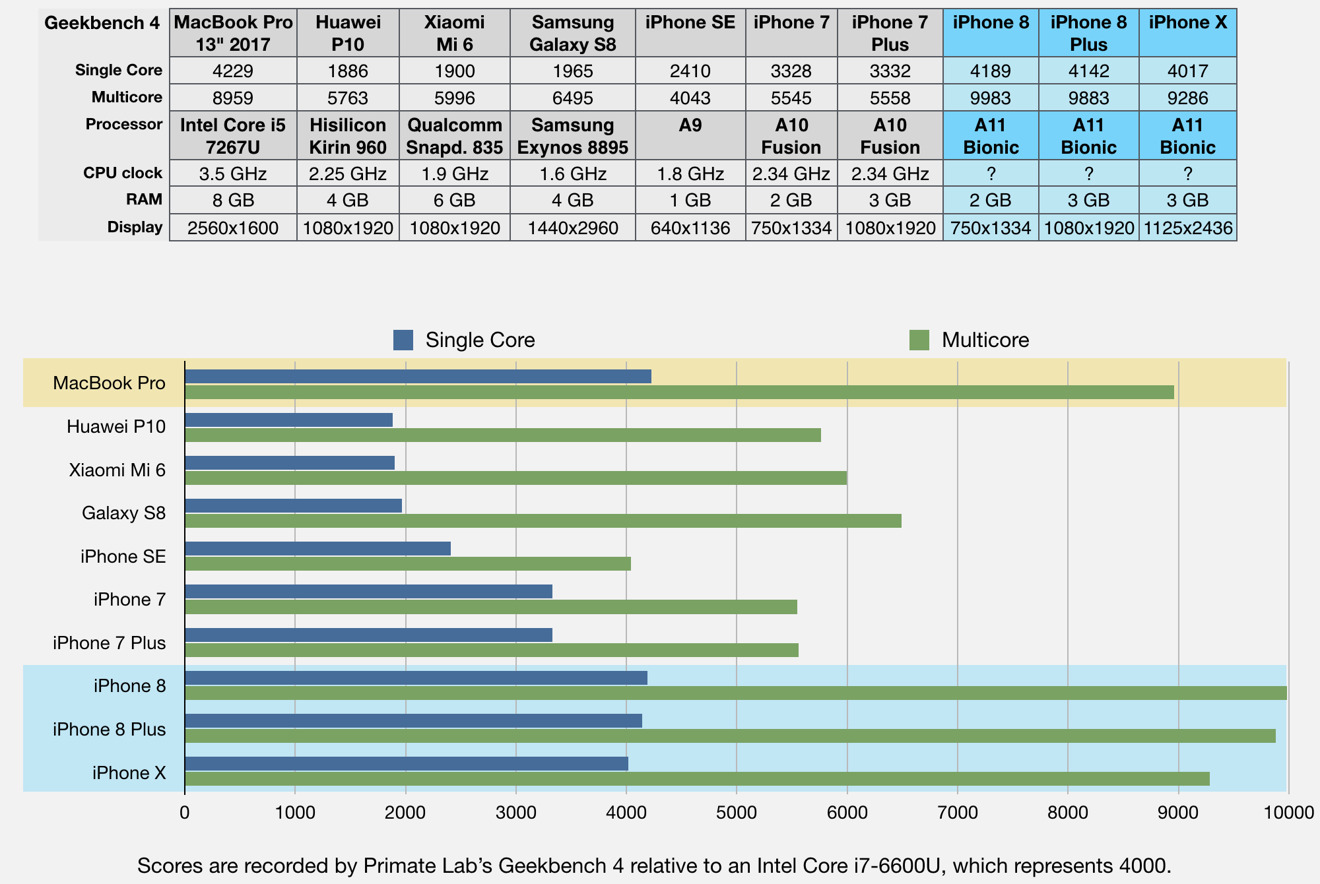

Apple has increasingly widened its lead in on-device sophistication and processing power, meaning that iOS devices can do more without needing a fast network connection to the cloud. Critical features like biometric authentication can be handled locally with greater security, and other processing of user data and images can be done without any fear of interception.Apple has increasingly widened its lead in on-device sophistication and processing power, meaning that iOS devices can do more without needing a fast network connection to the cloud

For years, even premium-priced Android devices remained too slow to perform Full Disk Encryption because Google focused on a slow, software-based implementation aimed at making Android work across lots of different devices rather than designing hardware accelerated encryption that would require better hardware on the same level as Apple's iPhones.

That was bad for users, but Google didn't care because it wasn't making money selling quality hardware to users; instead it was focused on just building out a broad advertising platform that would not benefit from users having effective encryption of their personal content.

Further, Android's implementation of Full Disk Encryption delegated security oversight to Qualcomm, which dropped the ball by storing disk encryption keys in software. Last summer, Dan Goodin wrote for Ars Technica that this "leaves the keys vulnerable to a variety of attacks that can pull a key off a device."

If Google can't manage to get basic device encryption working on Android, what hope is there that its platform of low-end hardware and partner-delegated software engineering will get its platform's camera features up to speed with Apple, a company with a clear history of delivering substantial, incremental progress rather than just an occasional pep rally for vanity hardware releases that do not actually sell in meaningful volumes?

Beyond deep: Vision and CoreML

On-device machine vision is now becoming possible as Apple's mobile devices are packed with lots of computational power with the capability to discern objects, position, movement and even recognize faces and specific people— technology Apple had already been applying to static photographs in its Photos app, but is now doing live in-camera during composition.

Depth aware camera capture certainly isn't the only frontier in iOS imaging. In addition to the new features that require a dual camera Plus or the new iPhone X TrueDepth camera sensor, Apple's iOS 11 also introduces new camera intelligence that benefits previous generations of iOS devices.

Apple's new iOS 11 Vision framework provides high-performance image analysis, using computer vision techniques to recognize faces, detect facial features and identify scenes in images and video.

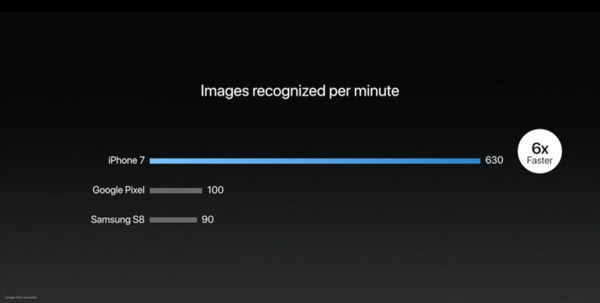

The Vision framework can be used along with CoreML, which uses Machine Learning to apply a trained model against incoming data to recognize and correlate patterns, to suggest what the camera is looking at with a given degree of certainty. Apple noted this summer that CoreML was already six times faster than existing Androids, and that was on iPhone 7, before it released the radically enhanced A11 Bionic powering iPhone 8 and iPhone X.

Apple has already been using ML in its camera, as well as in Siri and the QuickType keyboard. Now it's opening that capability up to third party developers as well.

CoreML is built on top of iOS 11's Metal 2 and Accelerate frameworks, so its tasks are already optimized for crunching on all available processors. And paired with Vision, it already knows how to do face detection, face tracking (in video), find landmarks, text detection, rectangle detection, bar code detection, object tracking, and image registration.

Those sophisticated features are things users can feel comfortable with their personal device doing internally, rather than being cloud services that require exposing what your camera sees to a network service that might collect and track them or expose sensitive details to corporate hackers or nefarious governments.

There's also another layer of camera-based imaging technology Apple is introducing in iOS 11, described in the next upcoming article segment.

Daniel Eran Dilger

Daniel Eran Dilger

Andrew Orr

Andrew Orr

Wesley Hilliard

Wesley Hilliard

Amber Neely

Amber Neely

William Gallagher

William Gallagher

Malcolm Owen

Malcolm Owen