When you use code to control network infrastructure, you need tools to evaluate the potential impact of a change before you apply it in production, because even a simple error can cause an outage. But when you operate a hyper-scale cloud platform, the expression “test network” has a meaning of its own. You just can’t replicate your entire network for testing. The next best option, Microsoft figured, is simulating the network, which is what it’s done with CrystalNet, a virtual copy of the entire Azure network infrastructure, named after the crystal ball a fortune teller might use.

The Azure team has been using CrystalNet in production for six months, and it’s already prevented several potential incidents, according to the company. Testing tools and scripts before migrating Azure data centers to new regional backbone networks, for example, found more than 50 bugs, several of which could have triggered an outage. These networks interconnect data centers inside a region and bypass Microsoft’s existing data center WAN.

The team working on Microsoft’s SONiC open source switch OS also uses CrystalNet to find bugs (like the ones causing crashes after several BGP sessions flapped) that they missed with unit testing and even in a small testbed test. Those bugs showed up quickly when emulating the full production environment.

Firmware Bugs, Configuration Errors, and Typos

“Our network consists of tens of thousands of devices, sourced from numerous vendors, and deployed across the globe,” Microsoft’s Azure team explained in a whitepaper announced at the recent ACM Symposium on Operating Systems and Principles. “These devices run complex (and hence bug-prone) routing software, controlled by complex (and hence bug-prone) configurations. Furthermore, churn is ever-present in our network: apart from occasional hardware failures, upgrades, new deployments and other changes are always ongoing.”

The Azure team worked with Microsoft Research for two years analyzing all documented cloud outages suffered by all major cloud providers including its own. While some form of hardware failure was the root cause of 29 percent of all significant incidents that affected customers, they found, software bugs caused 36 percent of the outages. They were mostly bugs in router and switch software and firmware and occasionally in network management tools. On one occasion, an unhandled exception in a network automation tool ended up shutting down the whole router instead of a single BGP session.

Usually though, the problem was device firmware; one update stopped the router from announcing certain IP prefixes (so traffic would either flood that network or not get to the right place), another left routes out of date, because it didn’t refresh the ARP cache when the peering configuration was updated. One vendor changed the format for ACLs in a new version of its software but forgot to document it.

Devices from different vendors or devices from a single vendor but with different firmware versions can implement the same routing protocols slightly differently. One scenario the paper described was when two vendors calculated aggregated network paths differently, causing one high-level router to send packets down a single path rather than splitting them between the two available paths, making for unbalanced network traffic overall.

Configuration bugs like overlapping IP assignments, incorrect AS numbers, or missing ACLs caused 27 percent of the failures, according to the researchers. Usually, those configurations are managed automatically; the bugs happen when ad hoc changes are made to handle planned updates or to work around other failures. Being able to test those changes before the live network is updated catches the kinds of mistakes that are easy to make in the middle of an update or an outage.

The team found that 6 percent of the outages were caused by human errors, where someone typed the wrong thing, but they weren’t the result of people being careless. “Operators do not have a good environment to test their plans and practice their operations with actual device command interfaces,” the authors wrote.

Existing network verification tools don’t work the way network configuration tools do; the workflow is very different, so testing changes in advance doesn’t work as a practice run. CrystalNet is designed to give operators the same workflow for validating changes as the workflow they use for making them. It can get routing tables, packet traces, and login to emulated devices and check their status, so operators can inject test traffic and verify routing tables the same way they would on the physical network. The approach has already resulted in eliminating typos during major changes to the Azure network.

500 Cloud VMs and a Few Minutes to Set Up

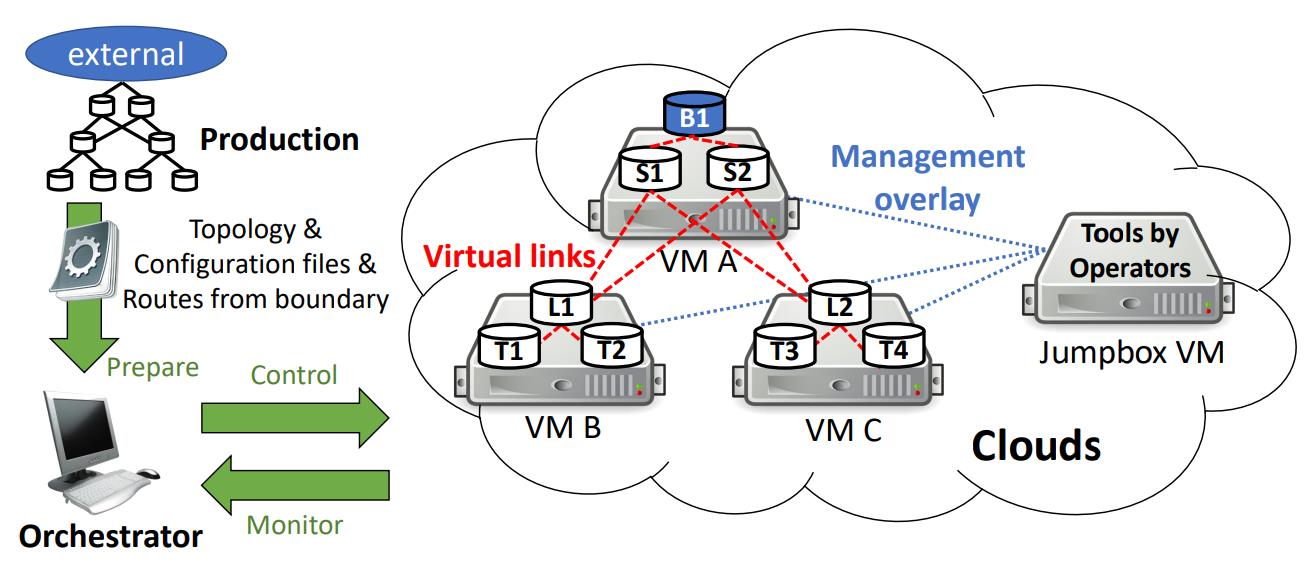

CrystalNet is an accurate copy of the network with the same interfaces and workflow because it runs real device firmware in Docker containers and virtual machines. “Each device's network namespace has the same Ethernet interfaces as in the real hardware; the interfaces are connected to remote ends via virtual links, which transfer Ethernet packets just like real physical links; and the topology of the overlay network is identical with the real network it emulates.” It loads real configurations into emulated devices and injects real routing states into the emulated network, while management tools run in VMs and connect to devices the same way they do in the production network.

CrystalNet architecture

And all that runs in Azure VMs (using nested virtualization and a KVM hypervisor to run VMs of device firmware that’s not available as a containerized image inside a container). To keep the resources down, there are multiple devices in each VM, and external devices are modelled as simple, static devices instead of running the firmware or configuration. Emulating a network of 5,000 devices takes 500 VMs (with 4 cores and 8GB RAM each), which costs $100 an hour on Azure and takes only a few minutes to set up.

Testing Complex Live Network Migrations

CrystalNet isn't the only tool Azure network engineers use. It won’t find memory leaks or timing bugs, and while what-if analysis and verification tools like Batfish and MineSweeper don’t reflect the live network as precisely, they are more efficient at answering questions like, “Is there a scenario where one or more links fail and send excessive traffic through this particular switch?”

Formal verification tools aren’t as useful as you might expect, because they assume that devices work correctly. “In reality, device behavior is far from ideal.” But they’re a good thing to run first as a low-fidelity check, because they don’t need as many resources as CrystalNet.

The technology could be useful for emulating enterprise networks and for router and switch vendors to test devices and firmware. Microsoft hasn’t committed to making it available, but the company’s distinguished scientist and director Victor Bahl dropped a strong hint that this is likely in a blog post, saying “this genuine interest in CrystalNet is driving us to continuously work with Azure engineers to perfect the technology.”