When I started at @WalmartLabs I was placed on team that was tasked with creating a new web framework from scratch that could power large public facing web sites.

I recently had the opportunity to speak about this experience at OSCON. The title of the talk was “Satisfying Business and Engineering Requirements: Client-server JavaScript, SEO, and Optimized Page Load”, which is quite the mouthful.

What the title attempted to encapsulate and the talk communicated was how we solved the SEO and optimized page load issue for public facing web sites while keeping UI engineers, myself included, happy and productive. Let’s take a look at how we achieved this with the creation of a new isomorphic JavaScript web framework, LazoJS.

Requirements

There were three main requirements that the framework had to support:

- SEO – Sites must be crawlable by all search engine indexers

- Optimized Page Load – Pages must load quickly; No spinners for primary/indexed content

- Optimized Page Transitions – Users must be able to navigate quickly between pages

With these guidelines in hand we set out to identify and collaborate with stakeholders.

Stakeholders

In addition to meeting the minimum requirements it was important to our team to account for each stakeholder’s needs. During the discovery process we identified three classifications of stakeholders, and some general needs and goals for each group.

- Product Managers – Primary concern is meeting business needs by creating innovative products

- Engineers – Primary concerns are clean and efficient code, and working in productive, engaging environments

- Engineering Managers – Delivering quality code as quickly as possible for the lowest cost possible while balancing the needs of engineers and product managers

Now that we had identified the primary stakeholders and had a better understanding of their needs we decided to take a look at industry trends.

Forward Thinking

It is no secret that once you create software it is destined to die. It will eventually be replaced by a better solution1. Knowing this, it was important that we created a framework with the longest shelf life possible to ensure maximum return on the development cost. So we decided to take a look at recent trends in the industry. We didn’t discover anything shocking – JavaScript and the Web as a platform are a big deal – that is not news to anyone who has been paying attention to the industry for the past few years. Node.js adoption and modules have been increasing at an alarming rate and the web is being used to power computer and phone operating systems, and other applications across varying devices. We wanted to factor in this trend in addition to the requirements and stakeholder needs to help ensure the longevity of the framework.

Evaluating Solutions

Now that we had all the requirements our next step was to begin the evaluation process. We examined three primary solutions – classic web application, single page application, and hybrid web application models.

Classic Web Application

In this model the content is rendered on the server, a chunk of HTML is sent to the browser, the browser parses the HTML, and finally the client life cycle is initialized. This would meet the main requirements, but the problem we found is that over time you end up duplicating logic across the client and server, which is inefficient.

For example, if a product page has product reviews as secondary content then pagination is required to navigate through the reviews. Refreshing the entire page is extremely inefficient, so the typical solution is to use AJAX to fetch the review page sets when paginating. The next optimization would be to only get the data required to render the page set, which would require duplicating templates, models, assets, and rendering on the client. You will also have the need for more unit tests. This is a very simple example, but if you take the concept and extrapolate it over a large application, it makes the application difficult to follow and maintain – one cannot easily derive how an application ended up in a given state. Additionally, the duplication is a waste of resources and it opens up an application to the possibility of bugs being introduced across two UI codebases when a feature is added or modified.

Single Page Application

The next solution we evaluated was the single page application (SPA) model. This solution eliminates the issues that plague classic web applications by shifting the responsibility of rendering entirely to the client. We really liked this model, because it separates application logic from data retrieval, consolidates UI code to a single language and run time, and significantly reduces the impact on the servers. However, it did not support the requirements.

For instance, in order for an SPA to fully support SEO it requires running the DOM on the server for search engine indexing2. SPA routing relies on the location hash in browsers that do not support the browser history API, which does not lend itself well to SEO either. SPAs also need to fetch data and resources prior to rendering content, so this solution did not meet the optimized page requirement unless the DOM was run on the server to serve first page loads, which is slow and requires additional serving resources. We did not want to create a framework that required additional effort3 just to meet the minimum requirements, so we passed on the SPA approach.

Hybrid Web Application

The final model we evaluated was the hybrid web application model4. It is similar to the SPA in the sense that it consolidates UI code to a single code base, but the code can run on the client or the server. A good example is Airbnb’s Rendr. This model provides the flexibility for an application to render the first page response on the server fully supporting SEO and optimized page loads, which would help meet our minimum requirements without any additional efforts.

The Selection

We decided to go with the hybrid web application model because it met the requirements; best served all stakeholders while still being forward thinking. Below are a few thoughts that arose during our decision making process.

- We thought that the UI should belong to the UI engineers, be it on the server or the client, and liked the clear lines of separation between the back and front ends; This separation makes both engineering teams happier and development cleaner.

- We liked the distributed rendering of the SPA model for subsequent client page requests that support the history API; this approach also lessens server loads.

- We liked the idea that we could support fully qualified URLs out of the box and gracefully fallback to server rendering for clients that didn’t support the history API; this made SEO support effortless.

- We liked that there would be a single code base for the UI with a common rendering life cycle. This meant that there would not be any duplication of efforts, thus reducing the UI development costs.

- Having a single UI code base would be easier to maintain, which meant that we could ship features faster.

The End Result

After evaluating what currently existed in the market, we ended up creating a new hybrid web framework, LazoJS. Let’s take a high level look of the primary pieces and life cycles of LazoJS. More information can be found on the LazoJS wiki.

Why Something New

We built something new because nothing existed that met our requirements. We definitely learned from other libraries and extended them, but in the end we needed a production ready, full-fledged isomorphic JavaScript framework designed to power large public facing websites with an interface tailored towards front-end engineers.

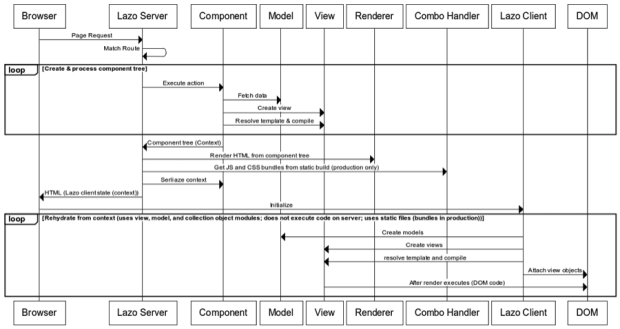

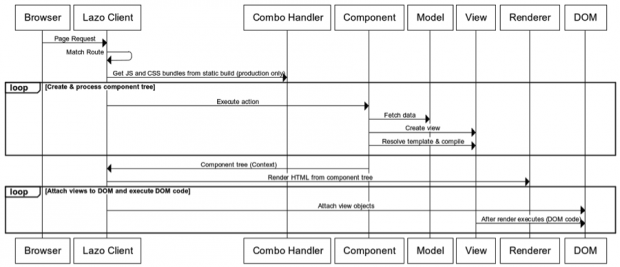

Request Life Cycles

Routes are mapped to component actions. These actions are responsible for returning a page response.

The initial page request for a given client is rendered on the server in order to provide SEO support and an optimized page load.

First page response for a client is rendered on the server for SEO support and an optimized page load.

Subsequent page requests for a given client are executed on the client if it supports the history API. This lessens the load on servers, and improves the responsiveness of the client.

Subsequent page requests for a client on rendered on client in order to distribute rendering and improve performance.

Core Pieces

LazoJS was built on top of a proven stack of existing open source technologies:

- Node.js – serving platform

- Hapi – powers the application server

- Backbone.js – base for isomorphic views, models, and collections

- RequireJS – isomorphic, asynchronous module system

- jQuery – DOM utility library

Application Structure

We wanted LazoJS applications to be very easy to understand at a glance, so we settled on a simple structure. Environment specific code is placed in “server” and “client” directories. A custom RequireJS loader automatically noops server modules on the client and client modules on the server.

Example LazoJS application directory structure

This structure makes it easier to onboard new developers, add new features, and debug issues because a developer knows exactly where to place and locate code.

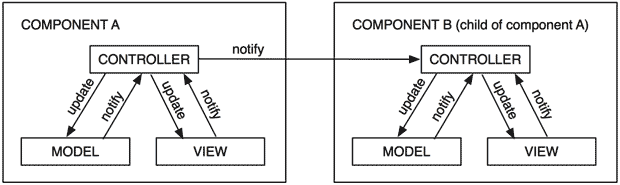

Components

Components in this sense are the realization of reusability, encapsulation, and composition in LazoJS. They allow a page request to broken into multiple, parameterized MVC micro applications. LazoJS components can be reused and composed in single page request and across multiple page requests.

Components encapsulate MVC pattern into reusable micro applications with their own life cycles.

Components execute action functions. The end result of a component action is markup and DOM bindings on the client.

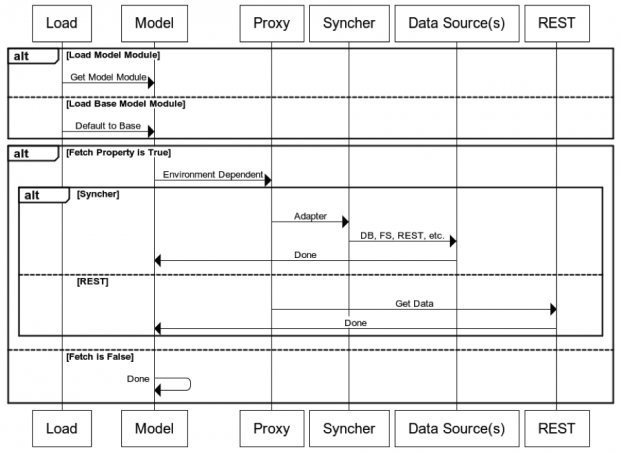

Models

LazoJS extends Backbone Models and Collections allowing them to run on the client and the server. In addition to this isomorphic functionality, LazoJS also has server data aggregators, synchers, which back model and collections CRUD operations. This layer is beneficial for, among other things, stubbing out services when developing and for mashing up service end points5 to meet UI requirements.

LazoJS model, collection life cycle

Roadmap

LazoJS is still in its infancy, but it has a stable API and is powering applications in production. We run the bleeding edge in master while developing, and release new versions once they have been fully vetted and are running in production. There are lists of enhancements on Github and internally that are currently being prioritized. Additionally, I am working on an application generator to make it easier to quickly spin up applications. I don’t see any breaking changes in the API, only enhancements, so it is ready for consumption!

-

Death of software is progress, and it is a good thing. However, it is also important to ensure that what you create is not a flash in the pan otherwise it creates unnecessary instability in the applications that rely on it when it has to be replaced prematurely.↩

-

Google recently announced indexing support for JavaScript applications. However, they state that “Sometimes things don’t go perfectly during rendering”, and that “It’s always a good idea to have your site degrade gracefully” in case the process fails and to support other search engines.↩

-

It is understandable that if you already have heavy investment in an existing SPA solution that running the DOM or emulating the DOM on the server might be a viable option.↩

-

This model is sometimes referred to as isomorphic JavaScript or the “Holy Grail”.↩

-

This translates to a single a network request from the client because it makes a call for the model, which proxies its backing syncher.↩

Editor’s note: If you’re looking to dig deeper into front-end JavaScript, you’ll want to check out Web Components by Jarrod Overson and Jason Strimpel.

Public domain crystallography illustration courtesy of Internet Archive.