-

-

-

-

-

-

-

-

-

-

-

-

-

-

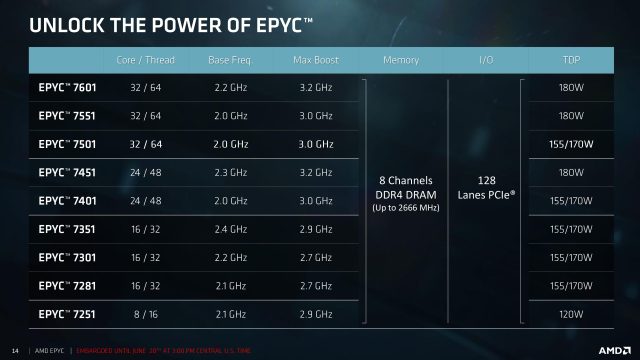

Most of the Epyc SKUs.

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

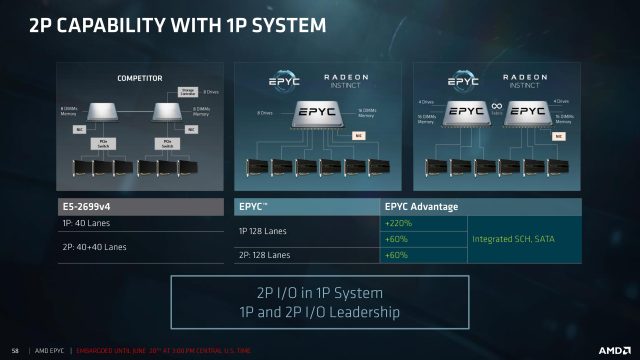

Epyc has tons of I/O.

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

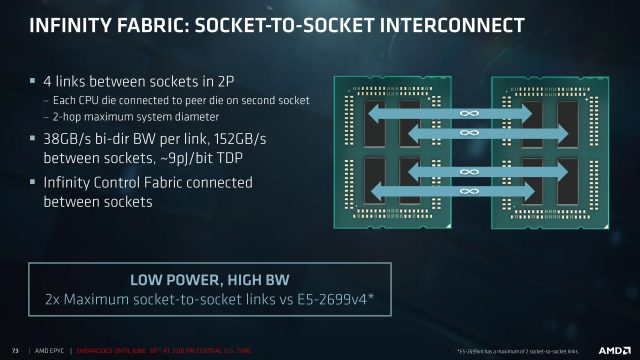

Infinity Fabric interconnects.

-

-

-

-

-

-

-

-

AUSTIN—Today, AMD unveiled the first generation of Epyc, its new range of server processors built around its Zen architecture. Processors will range from the Epyc 7251—an eight-core, 16-thread chip running at 2.1 to 2.9GHz in a 120W power envelope—up to the Epyc 7601: a 32-core, 64-thread monster running at 2.2 to 3.2GHz, with a 180W design power.

AMD initially revealed its server chips, codenamed "Naples," earlier this year. Since then, we've known the basics of the new chips: they'll have 128 PCIe lanes and eight DDR4 memory controllers and will support one or two socket configurations. With today's announcement, we now know much more about how the processors are put together and what features they'll offer.

The basic building block of all of AMD's Zen processors, both Ryzen on the desktop and Epyc in the server, is the eight-core, 16-thread chip. Ryzen processors use one of these; the Threadripper high-end desktop chips use two; and Epyc uses four. Each chip includes two memory controllers, a bunch of PCIe lanes, power management, and, most important of all, Infinity Fabric, AMD's high-speed interconnect that is derived from coherent HyperTransport.

From our look at Ryzen, we already know that Infinity Fabric (IF) is used to connect two blocks of four cores (called "core complexes," CCXes) within each eight-core chip. IF is also used both to connect the chips within the multi-chip module (MCM), and, in two processor configurations, to connect the two sockets.

Within the processor, each chip has three IF links, one to each of the other three chips. Each link runs at up to 42GB/s in each direction. The speed of these links matches the 42GB/s of memory bandwidth offered by the two channels of 2,667MHz DDR4 memory that each individual chip supports, and what this means is that any one chip within the Epyc MCM can use the full memory bandwidth of the entire processor without bottlenecks. Accessing memory that's connected to a different chip will incur somewhat higher latency than accessing memory that's directly connected, but it comes at no bandwidth penalty.

In two socket configurations, there are four IF links between the sockets. Each chip in one socket is paired with a chip in the other socket, for four pairs total, with one IF link between each pair. This design means that accessing remote memory has, at most, a two-hop penalty and that there are multiple routes that data can use to move from a chip on one socket to a chip on the other. The cross-socket IF links are slightly slower than the internal ones, operating at 38GB/s bidirectional. This is because these links have higher error-checking overhead, which uses up some of their bandwidth.

Both the internal and external IF connections are power managed. If not much traffic is going across the links, the processor will cut back its performance and hence energy usage. Power not used on the links can instead be used for the cores themselves, with AMD saying that this power management can provide as much as an eight-percent improvement in performance per watt.

In total, each processor offers 128 I/O channels. In two socket configurations, 64 channels from each processor are used for Infinity Fabric connectivity, leaving an aggregate of 128 I/O channels still available. As such, both one socket and two socket configurations offer nearly identical I/O options. The main thing the I/O channels can be used for is PCIe connectivity, with up to eight PCIe 3.0x16 connections per system.

These can be subdivided all the way down to 128 PCIe 3.0 x1 links, and there's a good degree of flexibility to the possible configurations of PCIe lanes. Each chip can use eight of its links as SATA connections, too. This is one of the few areas where a two-socket system will give you more I/O capabilities; with two sockets, the chips would support a total of 16 SATA connections.

Epyc is designed as a system-on-chip. Many features that would typically need additional components on the motherboard have been integrated into what AMD calls the Server Controller Hub (SCH) within the Epyc processor itself. This includes four USB 3.0 controllers, serial port controllers, clock generation, and low-speed interfaces such as I2C. The one notable I/O component not in the processor is Ethernet; for that, you'll need a PCIe card or motherboard-integrated interface.

A scaled-up Ryzen?

In most other regards, Epyc is little different from a scaled-up Ryzen—not altogether surprising, given the common heritage. Ryzen features, such as individually adjusting the voltage on a per-core basis, are found in Epyc, for example.

Some of these features have an Epyc twist, however. Like Ryzen, Epyc can boost clock speeds depending on usage levels. The top-end 7601 part, for example, has a base speed of 2.2GHz, with an all-cores boost of 2.7GHz and a maximum boost of 3.2GHz. Ryzen's maximum boost is very limited, only applying with one or two cores active. Epyc's is a bit more versatile; that 3.2GHz can be reached with up to 12 cores active.

Epyc chips also offer two modes, set at boot time, that let you pick between consistent performance and consistent power usage. In performance mode, the chip will offer repeatable, consistent clock speeds and boosting, drawing more power as required. In power mode, the chip will tightly stick to an upper bound for power usage and cut performance, if necessary, to stay within that envelope. This isn't available on the desktop chips, where power constraints are relatively lax and governed more by the cooling system than anything else. But it is valuable in densely packed server racks, where the overall power draw of a rack is often constrained.

The on-chip power management will also strive to detect certain workload patterns and reduce clock speed accordingly. In workloads that cause bursts of activity followed by idle periods, Epyc will reduce the clock speed during those activity bursts. This will make them take a little longer and cut down the idle time. Because power usage tends to scale with the cube of the clock speed, AMD argues that this behavior can cause a net reduction in power usage; at maximum speed, any power saved during idle is more than offset by the extra power used during the activity bursts. So cutting that peak power draw will lead to an overall reduction in power usage, even if a core is idling less.

This stands in contrast to the normal "race to idle" behavior that's often used to reduce power usage, wherein a processor runs as fast as it can as briefly as it can, because idling is so overwhelmingly superior from a power-usage perspective. It might also have some latency impact since each burst of work will take a little longer to complete.

For Epyc, AMD is also promoting some features that appear to also be available in Ryzen (at least, there are firmware options to control them) but only make a great amount of sense in server configurations. For example, Epyc supports encrypted system memory. Each memory controller has an encryption engine, and it can transparently decrypt and encrypt everything it reads and writes from RAM. This can operate in two modes; a global mode, in which all memory is encrypted using keys generated by the processor, and a software-controlled mode that enables, for example, memory belonging to different virtual machines to use different encryption keys.

Epyc also supports data poisoning. Typically, when ECC memory finds an uncorrectable error, the default operating-system behavior is to bring down the entire machine. With data poisoning, the operating system can instead choose to crash only the process or virtual machine that contained the error, leaving the rest of the machine unaffected.

Compared to the Broadwell-based Xeons that are currently on the market, Epyc looks very compelling. It offers considerably more I/O than Intel's chips (which only offer 40 PCIe lanes per chip), and it offers considerably more cores per socket. AMD's line-up is also much more consistent, with the same set of features available across the entire range (with some small exceptions; the company will have three single-socket-only chips, with model numbers ending in P).

The low-end parts don't omit any reliability or security features found in the high-end parts, making the only choice the number of cores and clock speeds that you need or can afford. In the very limited benchmarks AMD has demonstrated, Epyc 7601 handily beats a pair of Xeon E5-2699A v4 processors, Intel's fastest two-socket Xeons.

But Intel's new generation of Xeons built around the Skylake SP core are right around the corner. AMD says that it built Epyc not simply to beat Broadwell, but also Skylake. That comparison looks like it's going to be far more complex. AMD will certainly offer more memory bandwidth—Skylake-SP has only six memory channels to Epyc's eight—and AMD will likely offer more cores and threads per socket than Intel. But Skylake-SP's single-threaded performance is better than Zen's, and Intel's use of monolithic dies, rather than multi-chip modules, should give Intel's chips lower latency access to memory. Skylake-SP also includes new features such as AVX512, which may provide a healthy boost to number-crunching applications.

How this will all turn out remains to be seen; until Skylake-SP hits the market we have no benchmarks between the two, and we might well expect to see different winners depending on the workload being run.

Either way, though, one thing is clear: Intel has a level of competition that it hasn't had for a while. Epyc may not be the best choice for every workload, but it's sure to be the right option for many. While pricing hasn't been announced yet, we expect AMD to continue its trend of undercutting its larger competitor. Just as Ryzen has done on the desktop, Epyc is creating options in the server room.

Listing image by AMD

reader comments

171