iPad Diaries is a regular series about using the iPad as a primary computer. You can find more installments here and subscribe to the dedicated RSS feed.

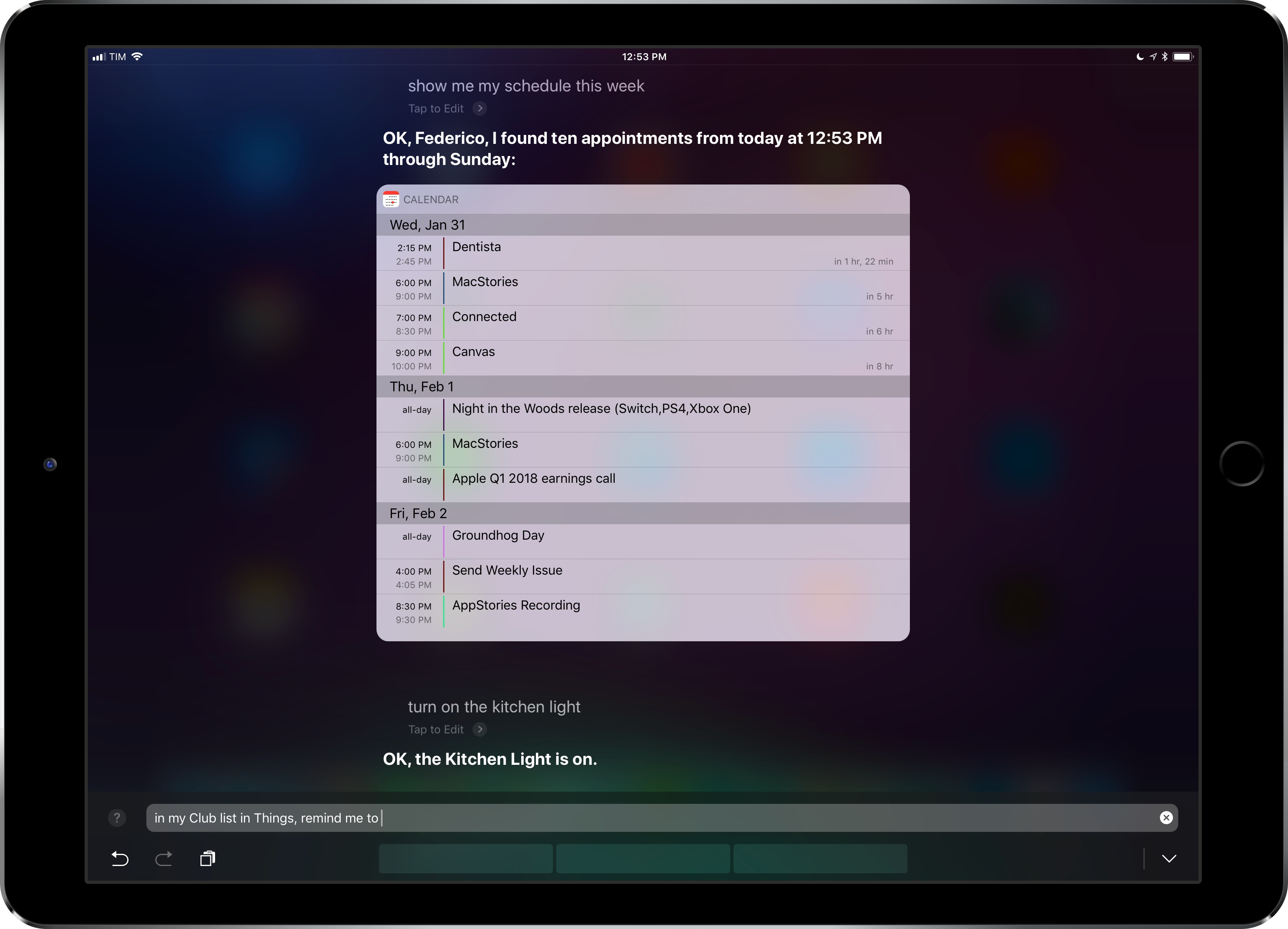

A couple of weeks ago, I shared a series of pictures on Twitter showing how I had been using iOS 11’s Type to Siri feature on my iPad Pro, which is always connected to an external keyboard when I’m working.

I left Type to Siri enabled on my iPad Pro since it’s always connected to a keyboard anyway, and it’s kind of like having a smart command line, which is pretty nice. pic.twitter.com/vRcGeAiHSX

— Federico Viticci (@viticci) January 20, 2018

I did not expect that offhanded tweet – and its “smart command line” description – to be so interesting for readers who replied or emailed me with a variety of questions about Type to Siri. Thus, as is customary for tweets that end up generating more questions than retweets, it’s time to elaborate with a blog post.

In this week’s iPad Diaries column, I’ll be taking a closer look at Type to Siri, my keyboard setup, and the commands I frequently use for Siri on my iPad; I will also detail some features that didn’t work as expected along with wishes for future updates to Siri.

Type to Siri

Introduced in iOS 11, Type to Siri is an accessibility setting that switches Siri’s primary input method from voice to keyboard. Arguably, this is the best and worst aspect of the feature depending on how you want to interact with Siri. On one hand, the current implementation of Type to Siri allows you to immediately start typing as soon as the Siri UI is displayed; on the other, once it is enabled, Type to Siri will no longer let you speak to the assistant if you invoke it by pressing the Home button.

As I noted in my review of iOS 11:

The problem is that Type to Siri and normal voice mode are mutually exclusive unless you use Hey Siri. If you prefer to invoke Siri with the press of a button and turn on Type to Siri, you won’t be able to switch back and forth between voice and keyboard input from the same screen. If you keep Hey Siri disabled on your device and activate Type to Siri, you’ll have no way to speak to the assistant in iOS 11.

I think Apple’s Siri team has been led by a misguided assumption. I hold my iPhone and open Siri using the Home button both when I’m home and when I’m out and about. Hey Siri is often unreliable in situations where I can speak to Siri; thus, I’d still like to keep voice interactions with Siri tied to pressing a button, but I’d also want a quick keyboard escape hatch for those times when I can’t speak to the assistant at all. Type to Siri should be a feature of the regular Siri experience, not a separate mode.

Despite my annoyance with Apple’s decision to make Type to Siri a simple on/off switch, I decided to give it a try on my iPad Pro last summer and, to my surprise, I’ve left it enabled for months now. Even with its current limitations – both in terms of the feature itself and Siri’s overall intelligence – Type to Siri has proven to be a useful addition to my average work day on the iPad Pro. In fact, I’d even argue that getting used to Type to Siri on my iPad has been a gateway to more frequent conversations with Siri on the iPhone and Apple Watch as well.

The “secret” to a productive Type to Siri experience is using an external iPad keyboard with a dedicated Home button key. Unfortunately, this excludes Apple’s Smart Keyboard, which doesn’t offer one.1 The good news is that plenty of third-party iPad keyboards do. My two top iPad keyboard picks – the Logitech Slim Combo for the iPad Pro 12.9” and the Brydge keyboard2 – come with a Home button key in the leftmost corner of the function row; the key can be clicked to go back Home, double-clicked to open multitasking, and long-pressed to open Siri. If you combine the convenience of a dedicated key with the fact that your hands stay on the keyboard when issuing commands to Siri, you can see why Type to Siri becomes an attractive proposition to control media and HomeKit devices, access personal data, and interact with SiriKit-enabled apps just by typing your requests.

The beauty of Type to Siri lies in the fact that it’s regular Siri, wrapped in a silent interaction method that leaves no room for misinterpretation. For the tasks Siri is good at, there’s a chance Type to Siri will perform better than its voice-based counterpart because typed sentences can’t be misheard.

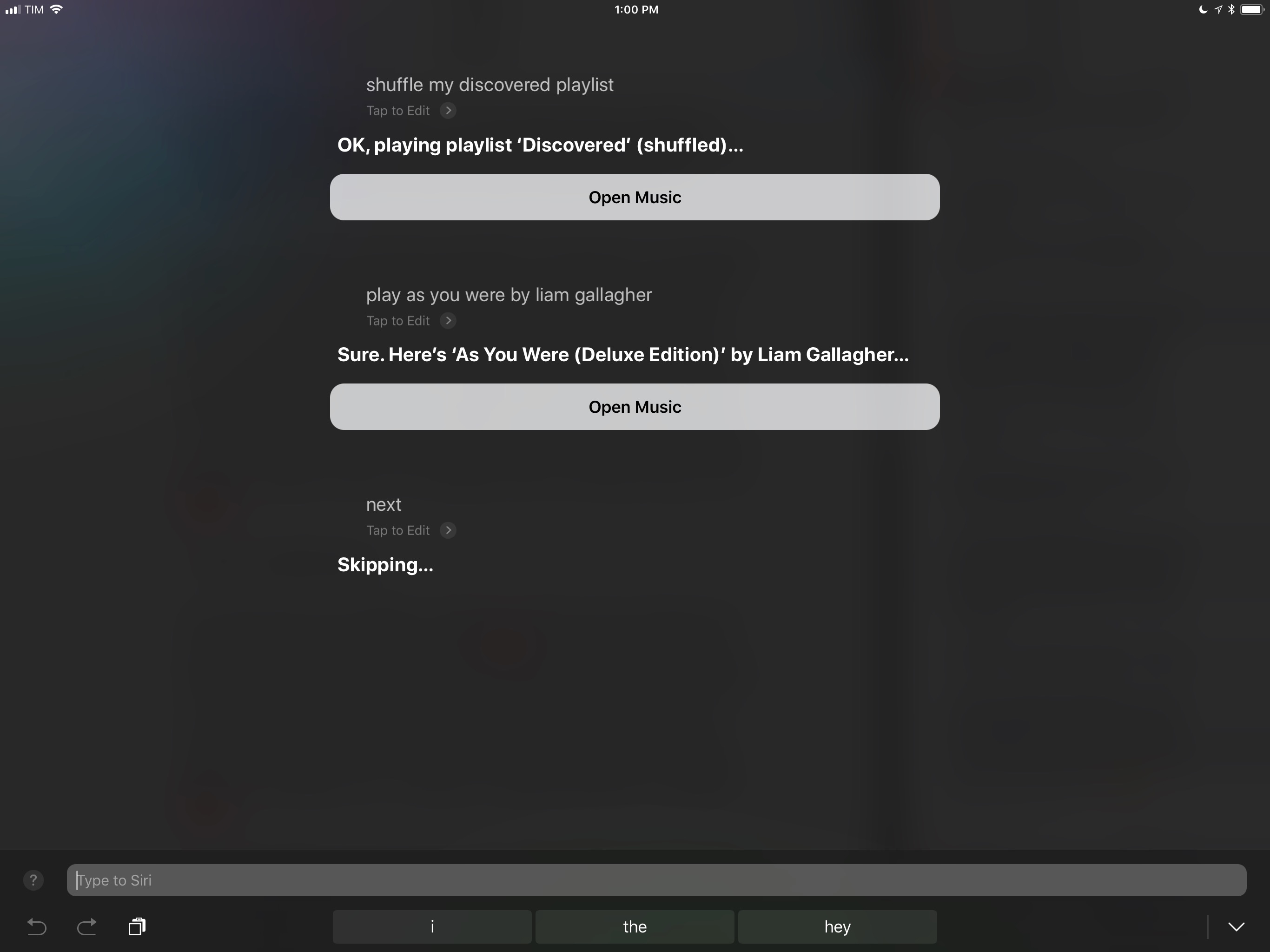

Queuing songs and controlling HomeKit accessories are examples of Siri functionality that keep me more focused on what I’m doing on the iPad while retaining the benefits of a digital assistant. While I’m writing an article for MacStories, I can quickly invoke Siri via the Home key and type “shuffle my Discovered playlist” to start playing some of the new songs I’ve recently come across. Another click on the Home key and I’m back into my text editor of choice; I didn’t have to leave Ulysses, speak to Siri, or interact with the Music app at all.3

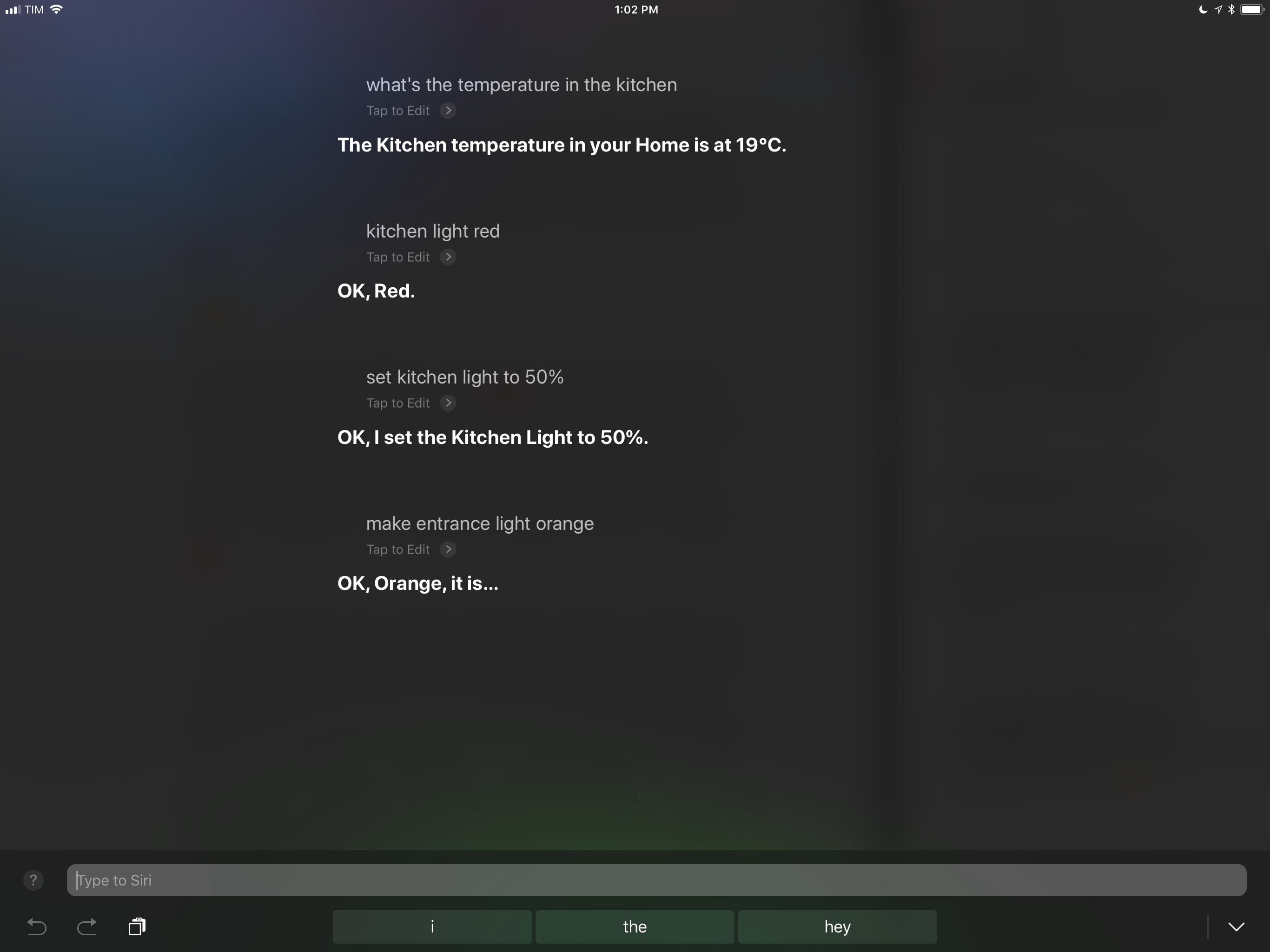

The same goes for HomeKit controls: rather than reaching for the screen to open Control Center4 and interact with its HomeKit shortcuts, I can text Siri which accessories or states it should turn on and off. This is surprisingly effective if you’re typing at night and need to ask Siri to turn off the lights or change their color without a) lifting your fingers from the keyboard or b) waking a sleeping partner or puppies.5

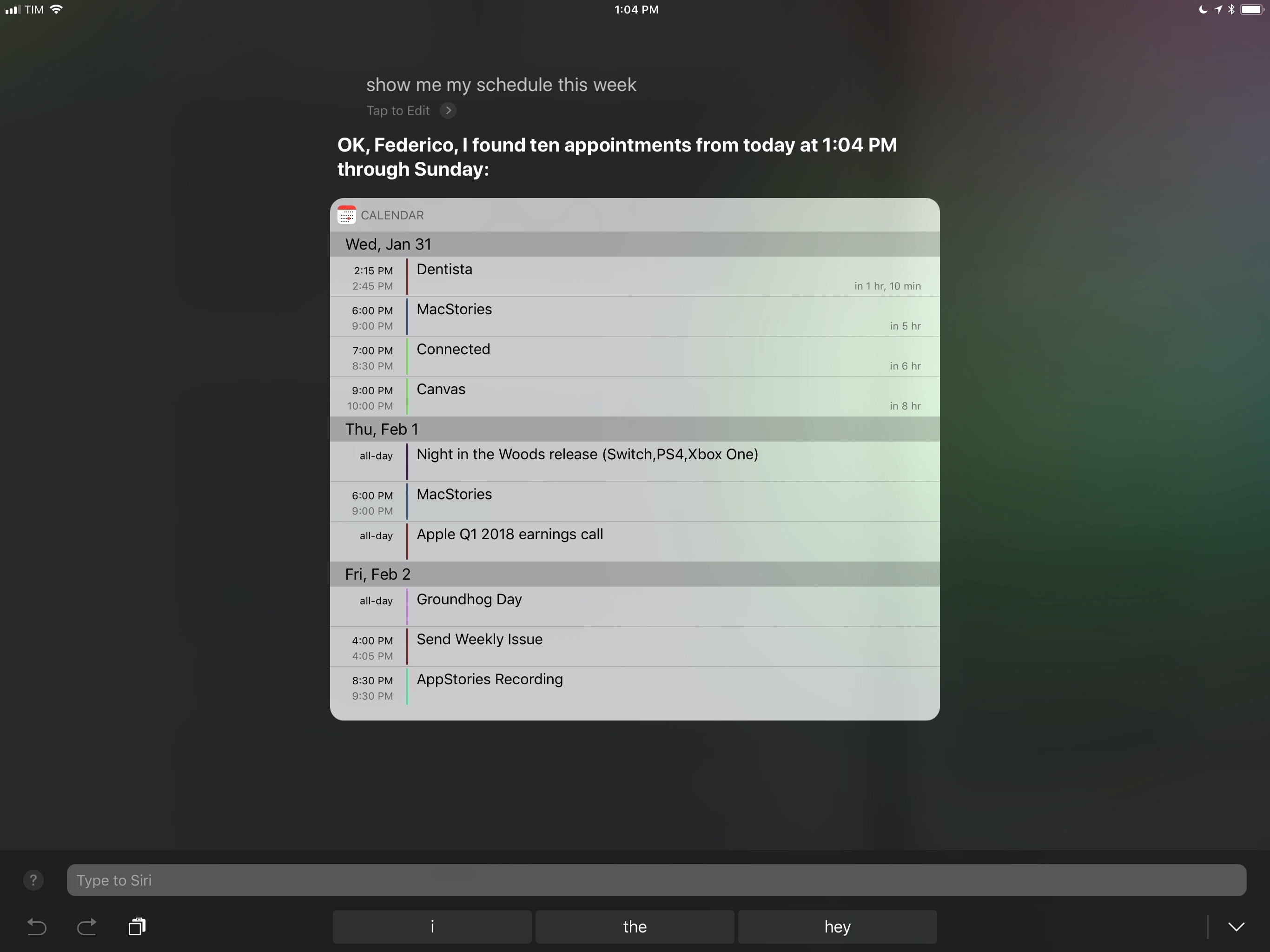

I’ve also gotten used to more productive aspects of Siri that do not involve music, HomeKit, or seeing a list of popular news from it. After typing “show me my schedule this week” (more on this below), I can follow-up with “Move [Event Name] to…” and quickly reschedule it to another day. Again: no interaction with the Calendar app needed and no miscommunication issues. I can convert between currencies and perform quick calculations with Type to Siri; both tasks are well suited for a keyboard because they involve entering numbers and signs. Results from the Calculator UI in Siri even support drag and drop on iPad so you can manually export them to other apps.

Any third-party app that integrates with SiriKit can be summoned with Type to Siri to access one of its available intents. I’ve found this to be especially convenient for SiriKit apps that deal with longer strings of text, such as task managers or note-taking apps, because Type to Siri supports ⌘ + V to paste the contents of the system clipboard. Just copied some text that you want to save as a note in DEVONthink? With Type to Siri, all you need to do is type out the appropriate syntax, paste, and hit Return.

How about adding a new task to a project in Things? With SiriKit and Type to Siri, you can specify a task name, list, and reminder date, saving the task into the app without dealing with workflows or URL schemes (which, unlike SiriKit, would open the main app to save the new item). Sometimes, it’s refreshing to have the ability to create data in a third-party app running in the background without having to switch between apps.

Type to Siri likely wasn’t designed with this productivity angle in mind, but the mix of a physical keyboard, system frameworks, and SiriKit turns Siri into a useful, quiet iPad sidekick that saves me a few seconds every day and lets me keep my hands on the keyboard at all times.

This discussion, however, wouldn’t be complete without a mention of the other key part of the experience: native iOS text replacements.

Text Replacements

Long maligned due to their iCloud sync issues, iOS’ native text replacements (first introduced as ‘Shortcuts’ in iOS 5) have become one of my most used features among the lesser known ones on the platform. While text replacements do not offer the versatility and power-user options of the premiere text expansion utility, TextExpander by Smile, they are a fantastic way to save time when typing text or emoji in any text field on iOS. I have dozens of text replacements set up on my devices, and I use them multiple times a day, every day. Plus, text replacement sync vastly improved since Apple started using CloudKit, which has been rock solid for me.

A great feature of the Google Assistant is the ability to associate shortcut commands to longer ones so you don’t have to speak entire sentences every time you want to trigger a verbose action. I mention this because Siri’s primary voice interactions do not support shortcuts, but text replacements can be used to quickly enter “command templates” while in Type to Siri mode.

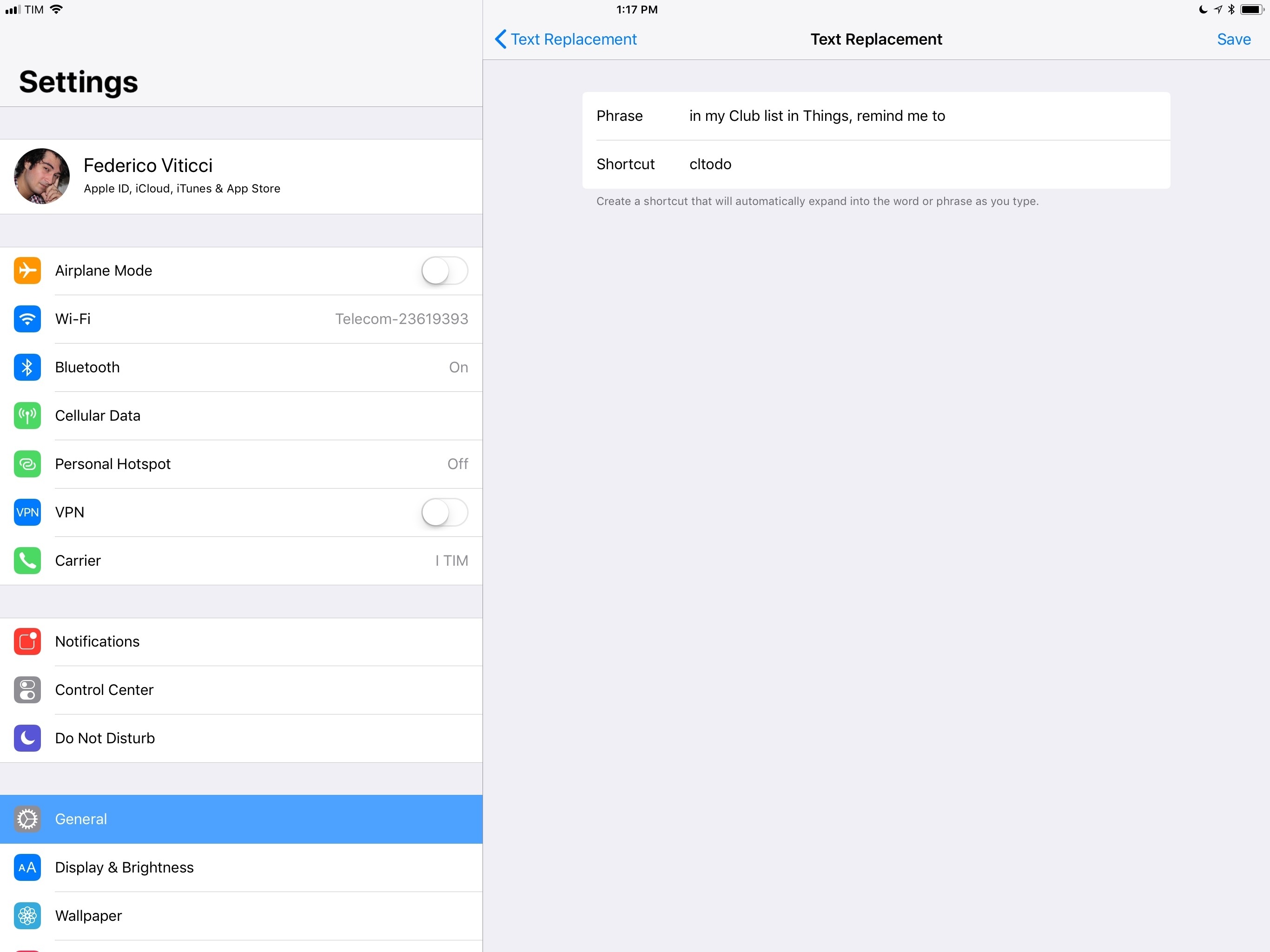

Some of the Siri actions I described above, as well as others I frequently use, require sentences that I don’t want to type out in their entirety every time. For instance:

show me my schedule this week– displays a nice Calendar snippet with color-coded events from all calendars on the system, abbreviated assmms;search the web for...– shows web results that can be tapped to open a webpage directly in Safari, abbreviated asstw;show me my today list in Things– brings up a Things snippet that neatly separates tasks due Today and This Evening, abbreviated assmmt;show me my Upcoming list in Things– brings up a (not-so-nice) Things UI that shows me all upcoming tasks, even though they’re not grouped by day, abbreviated assmmu;In my MacStories list in Things, remind me to...– the syntax to create a new task in a Things project, abbreviated asmstodo;

Text replacements make it much easier to access Siri actions that demand multiple parameters to save data in specific locations, such as a project in a third-party task manager. Instead of speaking these long commands6 or having to type them out fully, I can type mstodo, and it’ll be expanded in the complete Siri syntax necessary to create a new task in Things.

I have set up multiple variations of the same shortcut for different apps (for instance, I have three text replacements for three Things projects) and I’ve phrased these templates so that the actual contents of the request (like the title of a new task) are at the end of a sentence rather than in the middle. This way, I can invoke Siri, expand the shortcut, type, and hit Return without adjusting cursor position.

Siri request templates based on text replacements made me appreciate the flexibility of SiriKit’s language model; they’re also pushing me to prioritize apps that have rich Siri integrations over those that still don’t support the assistant.

Still, I’d like to see a lot more domains be supported by SiriKit; in the future, I’d love to have a way to look up definitions in Terminology, start a podcast in Overcast, or start a timer in Timelogger just by asking Siri. I hope to see some progress on this front at WWDC 2018.

What Didn’t Work

Using Type to Siri on a regular basis on my iPad Pro has turned out to be an insightful experience insofar as it showed me areas where Siri is most lacking, particularly in terms of inconsistencies with Siri for macOS.

Here are some examples.

Files

I expected Siri on iPad to support folder navigation in Files and allow me to open specific folders with a request. Unfortunately, this isn’t possible. To the question “Open my Finance folder in Files”, Siri responds with a list of results from the web – it simply can’t look into the folder structure of Files and navigate to a location.

Similarly, Siri can’t show you documents that have been assigned a specific tag in Files. This feature is available on the Mac thanks to Siri’s Finder integration, but the same isn’t true on iOS despite iCloud Drive tags being shared between the two operating systems.

Basic searches for file names and file types don’t work in Siri either. Asking the assistant to “show files named Invoice” or “search for PDF documents in Files” will return an ever unhelpful list of results from Google search.

For the past year, I’ve been using SaneBox to automatically categorize messages in mailboxes that filter unimportant messages, newsletters, and emails from certain senders configured on the SaneBox website. Alas, Siri can’t open my SaneLater or SaneNews folders if I ask it to open Mail into a specific mailbox.

Keyword-based searches fare slightly better, but they should be improved in the future. I can ask Siri to look for emails by a specific sender or with a keyword in the subject line, but only recent results are presented in the Siri UI; it would be useful to perform a deeper Mail search using Type to Siri, navigating results with the keyboard to pick a message to reopen in the Mail app. Searching for messages with a keyword in the body text isn’t supported at all.

Music

I’ve had mixed results with controlling music playback from Type to Siri, and I hope early signs of AirPlay 2 in the iOS 11.3 beta will grow into a new collection of music-related command for search, device connections, and playback controls.

Type to Siri, just like regular Siri, can search Apple Music to play songs, shuffle playlists, and more. Right now, however, if a song is already playing and you send a typed request to Siri, audio will be paused even though Siri is responding via text. This to me feels like a bug: Type to Siri should behave differently than voice-activated Siri when audio is playing, but it doesn’t. This is annoying as adding songs to your Up Next queue via Siri results in a dance of pause-type-wait-resume that shouldn’t be the case with Type to Siri.

What’s even worse is that, due to another technical issue, sometimes Type to Siri starts playing something completely random and unrelated to my question. I haven’t been able to pin down the reason why Siri – in spite of results having been typed exactly as they’d appear in Apple Music – can’t find what I’m looking for. I’ve also noticed that, occasionally, Siri can’t find a song (entered with the song name by artist syntax) unless I add the word “song” to my question.

Lastly, right now Siri can’t route playback to another device, such as Apple TV, AirPods, or Bluetooth speakers. If I ask Siri to “connect to my AirPods”, it says it can’t find a Wi-Fi network named “AirPods”. Ideally, Siri should be able to interact with all the output sources available in Control Center and intelligently connect to them, per my request.

As we enter a new era of Apple’s entertainment ecosystem – one that is made of iOS devices with powerful speakers, but also AirPods, HomePod, and AirPlay 2 accessories – I want to see Siri become capable of connecting to them at a moment’s notice. It even makes more sense for Type to Siri to support this, as it wouldn’t fail to hear you over loud music playing around the house. I hope that the advent of AirPlay 2 will bring deeper Siri integration to control individual speakers, multi-room audio, and combinations of specific speakers.

Other Siri Integrations I’d Like to See

My list of commands I knew Siri wouldn’t support but that I’d still like Apple to bring to iOS is long, but I thought I’d highlight those that really stood out in my Type to Siri tests.

- Chained commands. Siri, like Alexa, doesn’t understand multiple commands chained together in the same question.7 For instance, you can’t ask Siri to “turn on the kitchen light and make it orange” – you have to ask the first question, wait for the light to be on, then ask again to change its color. I want to ask Siri to do something like I’d ask a human assistant to do multiple things at once.

- Understand iPad multitasking. Wouldn’t it be great if Siri for iPad understood “open Mail and Safari in Split View”? Or perhaps “add Tweetbot to the left side of this space”? If the iPad ever gains the ability to create and retain favorite app pairs (effectively, named spaces), it would be terrific if Siri could “open my Team workspace”, with Slack and Safari in Split View, and Trello in Slide Over, ready and waiting for me.

- Display song lyrics. One of Apple Music’s biggest advantages over Spotify is native lyrics support in the Now Playing screen. And yet Siri can’t display lyrics for the currently playing song as an inline text snippet. This would be a relatively easy one to fix.

- Integrate third-party apps into the weekly schedule. As I noted above, Siri’s integration with the system calendar is a great way to get an overview of your schedule for the current week. Wouldn’t it be nice if the same command could coalesce todos and alarms set in SiriKit-compatible apps within the same view? A weekly overview that, in addition to calendar events, showed me upcoming tasks from Things and reminders from Due would be dramatically more useful, and a perfect fit for those who use an iPad as their main computer. There would be another benefit to this: the same system – a new SiriKit API that surfaces time-based items from apps – could be used on watchOS to extend the Siri watch face as well, opening it up to third-party access.

I originally enabled Type to Siri on my iPad Pro as an experiment; to my surprise, I’m still using it and continuing to optimize the experience with various shortcuts and app integrations.

I realize now that, thanks to iOS 11 and the iPad Pro, I effectively have two distinct types of Siri interactions every day: one that is spoken and casual, often about lifestyle requests, on my iPhone and Apple Watch; the other, on my iPad, silent, longer, and mostly about getting work done. The things Siri is good at and that are well suited for being delegated to an assistant (checking schedules, converting units and currencies, saving content into SiriKit-enabled apps) are sometimes more convenient via text than they are over voice commands.

With the HomePod arriving in a couple of weeks and possibly establishing itself as the central voice-based Siri experience in our apartment, I don’t think I will disable Type to Siri on my iPad anytime soon. Hopefully, we’re going to see some improvements to Siri’s iPad interface8 and system integrations with iOS 12 this year.

- On iOS, you can press ⌘ + H to go back to the Home screen from any app you’re in. Alas, you can’t hold this hotkey to simulate a long press of the Home button, so Siri won’t come up if you’re using the iPad with an Apple Smart Keyboard or Magic Keyboard. ↩︎

- I can’t fully recommend the Brydge keyboard because, as widely documented, getting a working unit has been a bit of a lottery in the past. I had to return two defective units before I lucked out on my third try and got a keyboard that wasn’t dropping keystrokes when typing on the iPad Pro. It’s unclear if Brydge has been able to solve these issues over time with firmware updates, but I’ve also heard from several people lately who took the risk of buying a Brydge keyboard and had no problems whatsoever. Thus, my suggestion would be: if you plan on purchasing a Brydge keyboard, make sure you buy it from a a reseller that has a clear return policy, such as Amazon. Overall, I agree with Jason: after I was able to get a working unit, I think the Brydge keyboard is the best one I’ve ever used because it turns the iPad Pro into a form factor I’d like Apple to seriously explore. ↩︎

- I hope that iOS 11.3 will add support for playing music in specific rooms and groups of speakers via Siri too. ↩︎

- Which still can’t be invoked with a keyboard shortcut. ↩︎

- My two puppies sometimes bark at Alexa. I would love to know what they think it is. ↩︎

- Which is even trickier for me given my Italian accent – and yes, I use all my devices in US English. ↩︎

- Google Assistant on the Google Home does. ↩︎

- I’d love for Siri on iPad to go back to the original floating UI of iOS 6. I think a full-screen view is a waste of space, especially when you’re working on an iPad with a keyboard. I’d like to see a contextual Siri that swoops in like a Slide Over app from the side. I would also love to have a new Apple Smart Keyboard that is backlit and has a dedicated Siri key, separate from the Home button one. An iPad user can dream. ↩︎