Microsoft today announced at its Build conference the preview launch of Project Brainwave, its platform for running deep learning models in its Azure cloud and on the edge in real time.

While some of Microsoft’s competitors, including Google, are betting on custom chips, Microsoft continues to bet on FPGAs to accelerate its models, and Brainwave is no exception. Microsoft argues that FPGAs give it more flexibility than designing custom chips and that the performance it achieves on standard Intel Stratix FPGAs is at least comparable to that of custom chips.

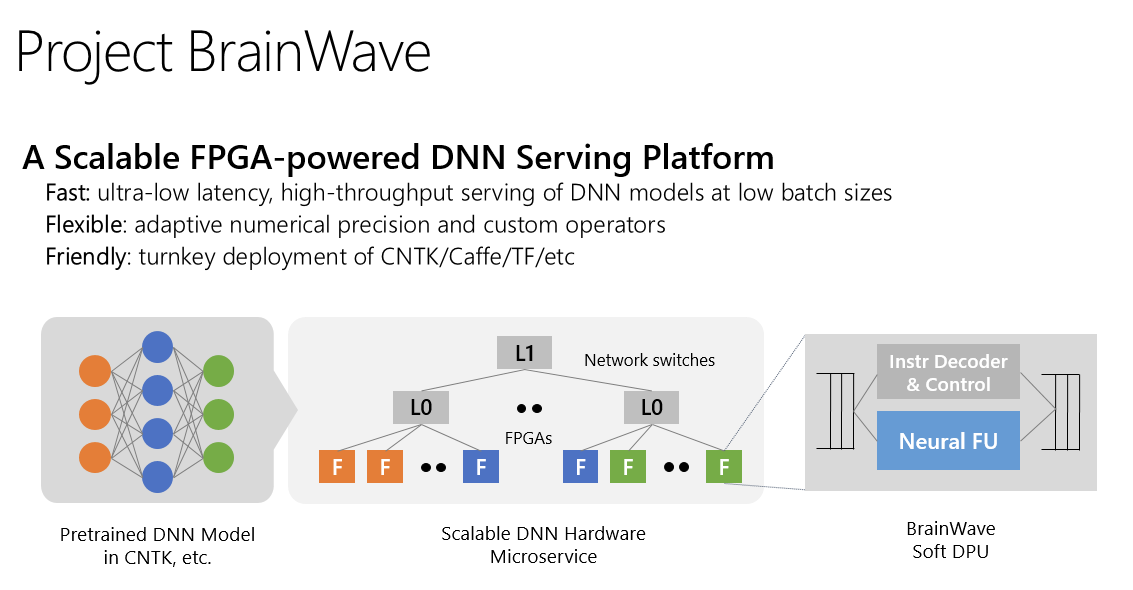

Last August, the company first detailed some aspects of Brainwave, which consists of three distinct layers: a high-performance distributed architecture; a hardware deep neural networking engine that has been synthesized onto the FPGAs; and a compiler and runtime for deploying the pre-trained models.

Microsoft is attaching the FPGAs right to its overall data center network, which allows them to become something akin to hardware microservices. The advantage here is high throughput and a large latency reduction because this architecture allows Microsoft to bypass the CPU of a traditional server to talk directly to the FPGAs. Indeed, Microsoft argues that Brainwave offers 5x less latency than Google’s TPUs.

When Microsoft first announced BrainWave, the software stack supported both the Microsoft Cognitive Toolkit and Google’s TensorFlow frameworks.

Brainwave is now in preview on Azure and Microsoft also promises to bring support for it to Azure Stack and the Azure Data Box appliance.