Mobileye, the Israeli self-driving technology company Intel acquired last year, announced on Thursday that it would begin testing up to 100 cars on the roads of Jerusalem. But in a demonstration with Israeli television journalists, the company's demonstration car blew through a red light.

Mobileye is a global leader in selling driver-assistance technology to automakers. With this week's announcement, Mobileye hoped to signal that it wasn't going to be left behind as the world shifts to fully self-driving vehicles. But the red-light blunder suggests that the company's technology may be significantly behind industry leaders like Waymo.

While most companies working on full self-driving technology have made heavy use of lidar sensors, Mobileye is testing cars that rely exclusively on cameras for navigation. Mobileye isn't necessarily planning to ship self-driving technology that works that way. Instead, testing a camera-only system is part of the company's unorthodox approach for verifying the safety of its technology stack. That strategy was first outlined in an October white paper, and Mobileye CTO Amnon Shashua elaborated on that strategy in a Thursday blog post.

"We target a vehicle that gets from point A to point B faster, smoother, and less-expensively than a human-driven vehicle; can operate in any geography; and achieves a verifiable, transparent 1,000-times safety improvement over a human-driven vehicle without the need for billions of miles of validation testing on public roads," Shashua wrote on Thursday.

It's a bold claim. We're skeptical it's actually true.

Mobileye hopes to prove safety with formal models

The industry leader, Waymo, has focused on racking up more than six million miles of on-road testing, supplemented by billions of miles of simulation based on data collected in those real-world tests. But Mobileye argues that this approach is simultaneously wasteful and unlikely to deliver sufficient evidence of safety.

Instead, Mobileye advocates a more formalistic approach to demonstrating that its cars are safe. Mobileye envisions dividing its self-driving system into two parts—perception and policy—and then testing and validating them separately.

The perception system takes raw sensor inputs and translates them into labeled objects with exact three-dimensional coordinates. Then the policy system takes this labeled three-dimensional world and plans how to navigate through it.

"Mistakes of the sensing system are easier to validate, since sensing can be independent of the vehicle actions, and therefore we can validate the probability of a severe sensing error using offline data," Shashua wrote with two colleagues in last October's white paper.

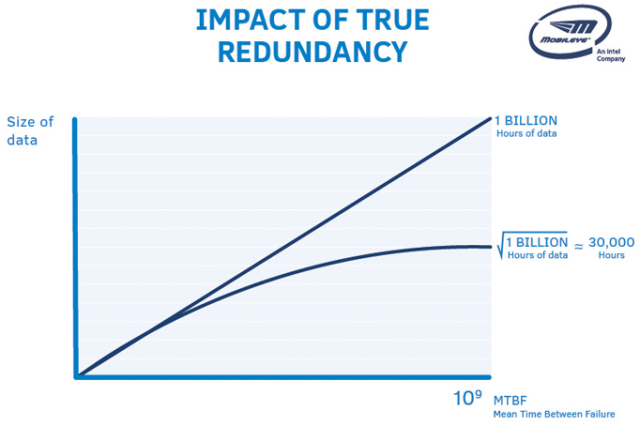

The company argues that validating the sensing system is made even easier by capitalizing on sensor redundancies. Mobileye's plan is to develop a system that can navigate safely using only cameras and then separately develop a system that can navigate safely using only lidar and radar. Mobileye argues that if the company can show that each system separately has a sensing error less often than once every 30,000 hours, then it can conclude that a system with both types of sensors should have an error no more often than 30,000*30,000=~1 billion hours.

But this argument relies on two big assumptions, and it's far from clear that either of them is true.

The first assumption is that the failure modes of the two sensing systems are independent—that is, that a situation that fools the camera-based system is no more likely than average to fool the lidar-based system, and vice versa. We didn't find Mobileye's argument for this proposition very compelling:

"Radar works as well in bad weather conditions but might fail due to non-relevant metal objects, as opposed to camera that is affected by bad weather but is not likely to be affected by metal objects," the white paper argues. "Seemingly, camera and lidar have common sources of mistakes—both are affected by foggy weather, heavy rain, and snow. However, the type of mistake for camera and lidar would be different—camera might miss objects due to bad weather while lidar might detect a ghost due to reflections from particles in the air. Since we have distinguished between the two types of mistakes, the approximate independency is still likely to hold."

It's obviously true that different types of sensors have different strengths and weaknesses, and so using multiple types of sensors gives you beneficial redundancy. But it's a much bigger leap to say that these sensors' failure modes are completely independent and uncorrelated. And if they're not, then Mobileye's billion-miles-per-error math doesn't work.

In an October interview with EE Times Carnegie Mellon safety expert Philip Koopman praised Mobileye for its transparency in laying out its assumptions. However, he was skeptical about the assumption that sensor failures are independent.

"It's hard to believe that lidar and radar failure independence will work out as well as the discussion assumes," Koopman said. "Someone will have to demonstrate they are true in practice, not just assume them. And almost certainly there are assumptions that are false that the authors didn't even realize they made."

Mobileye ignores real-world complexities

And it's that last point that should really worry us: that Mobileye's model likely makes assumptions that don't actually describe the real world.

For example, Mobileye is implicitly assuming that fusing the two sensor systems together won't introduce any new sources of error. But as Navigant Research analyst Sam Abuelsamid pointed out to us, that's not likely to be true.

"If you start combining multiple things, you start to multiply the potential failure modes," he said.

Melding together the lidar and camera data—a process known as sensor fusion—will require significant code in its own right. "More lines of code means more opportunities for bugs," Abuelsamid told us. "So the actual complexity of testing starts to explode back out again."

And a similar critique applies to Mobileye's plan for validating the other half of its self-driving technology—the planning software that starts with a three-dimensional model of the world and decides what to do.

Mobileye is developing a formal mathematical model that precisely defines which vehicle is at fault in any given crash. The bulk of Mobileye's October white paper is devoted to laying out the exact rules of this framework, which Mobileye calls the Responsibility-Sensitive Safety. It prescribes rules for things like following distance, right of way, and being cautious around occluded objects.

Mobileye says that, once it has this model, it can then mathematically demonstrate that its planning algorithm will never take an action that will cause it to be at fault in a crash.

But even assuming that the mathematics of this are impeccable, it doesn't prove that a car using that model will never cause an accident. The model may make assumptions about the real world that don't prove to hold in reality. Or engineers might make mistakes as they translate the theoretical model into working code.

This isn't to deny that the RSS framework could be a useful way to reason about the safety of autonomous systems. If that were all Mobileye was doing, it would be a valuable contribution.

But Mobileye positions this model as an alternative to extensive real-world testing, claiming that it can prove a car is 1,000 times safer than a human driver without requiring extensive testing. Yet there are lots of ways a self-driving car can fail that aren't covered by Mobileye's theoretical model.

Mobileye’s business model could be its Achilles’ heel

We suspect one reason Mobileye is looking for ways to minimize on-the-road testing is that extensive testing doesn't fit very well with Mobileye's business model. Waymo provides a helpful contrast here.

Waymo has logged more than six million miles of testing on public roads, and last fall it was confident enough in its technology to begin taking the safety drivers out of its cars. The company hopes to launch an automated taxi service in the Phoenix area later this year.

Waymo's solution to the safety-verification problem has been extreme gradualism. The company began testing in a few carefully selected areas with excellent weather and well-marked roads. Over time, Waymo has gradually upgraded its vehicles' software and sensors, collected more and more map data, and gradually expanded to new, more challenging operating environments.

This is an ongoing process that will continue long after Waymo launches its initial service in Phoenix. If Waymo isn't confident its cars can handle a particular situation (like snow or dense urban traffic), it simply won't offer service in areas that feature those conditions until it has time to do more development and testing.

This means Waymo doesn't have any particular need to mathematically prove that its technology will work flawlessly in every situation. It might take many years before Waymo cars can drive safely in a Manhattan traffic jam or a Minneapolis winter, but Waymo can afford to be patient. For the next few years, Waymo can easily stay busy building taxi services in predominantly suburban Sun Belt cities like Phoenix, Atlanta, Austin, and Las Vegas.

But this approach isn't really an option for Mobileye. The Israeli company is in the business of supplying chips, sensors, and software to conventional carmakers. According to Mobileye, there are already 15 million cars on the road with Mobileye technology, from 27 different carmakers.

Mobileye's technology powers Nissan's advanced driver-assistance product called ProPilot Assist, as well as the driver-assistance features in Audi's forthcoming A8. Mobileye supplies the cameras for GM's Super Cruise technology. And Mobileye has a wide-ranging alliance with BMW and Fiat Chrysler to develop self-driving technology.

On Thursday, Reuters reported Mobileye has signed a deal to supply technology for an additional eight million cars to a single unnamed carmaker starting in 2021.

So launching a taxi service, as Waymo is planning to do, would be a radical shift for Mobileye that would likely alienate its current customers. Mobileye needs a development and validation strategy that fits the conventional car business model of selling cars outright to customers.

Mobileye more or less acknowledges this issue in its white paper—but argues that it's a problem faced by the industry as a whole:

"If autonomous vehicles are to be mass manufactured, the cost of validation and the ability to drive 'everywhere' rather than in a select few cities is also a necessary requirement to sustain a business," the company writes.

What Mobileye should have said is that the ability to drive everywhere is a necessary requirement to sustain Mobileye's business, which is based around selling technology to carmakers who will ultimately sell cars to customers—it's not a requirement for a company like Waymo that's creating a taxi service.

And if Mobileye needs its technology to "drive everywhere" right out of the box, then it makes sense that the company would see incremental development and extensive real-world testing as impractical. Waymo, for example, has been testing its cars for the better part of a decade and only recently began serious work on mastering snow. If Mobileye follows the same trajectory, it might not be able to ship its first unit of fully self-driving technology until the second half of the 2020s.

So the company's leadership has convinced itself that it can rely heavily on formal mathematical proofs as a substitute for millions of miles of real-world testing, because its business model doesn't leave it with many good alternatives. But wishing this were true doesn't make it so.

reader comments

86