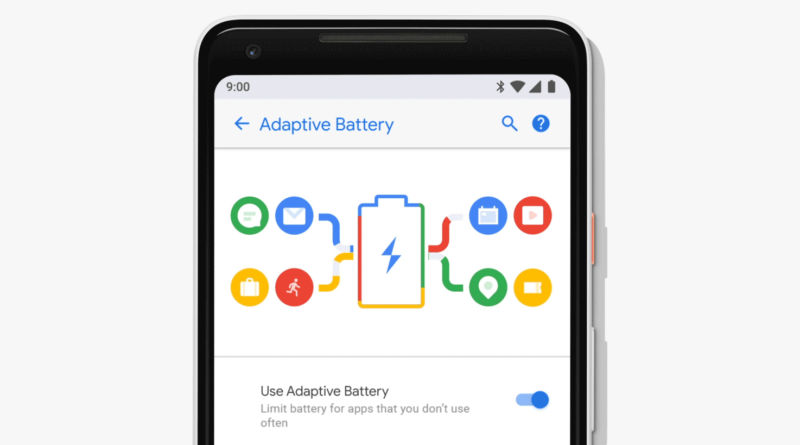

With the last version of the Android P Developer Preview released, we're quickly heading toward the final build of another major Android version. And for Android P—aka version 9.0—battery life is a major focus. The Adaptive Battery feature will dole out background access to only the apps you use, a new auto brightness scheme has been devised, and the Android team has made changes to how background work runs on the CPU. All together, battery life should be batter (err, better) than ever.

To get a bit more detail about how all this works, we sat down with a pair of Android engineers: Benjamin Poiesz, group product manager for the Android Framework, and Tim Murray, a senior staff software engineer for Android. And over the course of our second fireside Android chat, we learned a bit more about Android P overall and some specific things about how Google goes about diagnosing and tracking battery life across the range of the OS' install base.

What follows is a transcript with some of the interview lightly edited for clarity. We also included some topical background comments in italics.

The right CPU core for the right job

First up on the docket is talk about CPU core affinity. Multi-core CPUs are all over the place nowadays, and while on a desktop you would get a CPU with many cores that are all exactly the same, on a mobile phone you usually get cores that come in varying "sizes" meant for different workloads. In a typical eight-core ARM design, you get a chip with a "big.LITTLE" architecture. That's four "big" cores that are fast and power hungry and four "little" cores that are slower but easier on energy usage. Having processes run on a big or little core can greatly affect how much power they use and how quickly they run. Assigning a process to a certain CPU or core is called setting the CPU affinity.

Android P is changing how CPU core affinity works for background processes, which should save a decent amount of battery power. This was given a throwaway line during the Google I/O keynote, but I think this is the first time it has been talked about in detail.

Tim Murray: We've actually been doing core affinity work for big.LITTLE platforms since 2015. So, this is actually what got me working on performance, initially. Back in March 2015 or so, I actually read your article on the HTC One whatever-it-was, the first phone with Snapdragon 810.

Murray is talking about the HTC One M9 review, featuring the Snapdragon 810's infamous heat problems.

Murray: I read it, and I was kind of looking around at some performance stuff in general on Android, too, but I knew we were using Snapdragon 810 for the Nexus 6P that year. I read the article, and you said like, "Hey, it runs really hot." And I got to think, "I wonder if we could do better." So, I started thinking about that and working on that with people on our kernel team and on the framework team. What we came up with was a way to control the affinity of services and specific processes from ActivityManager.

In Android, an "Activity" is a single screen of an app, like say, your email inbox, so the system-level service "ActivityManager" does what it says on the tin, it manages activities (and background services)—opening and closing them as requested or as needed for memory usage.

Murray: From activity manager service, we track what's important to the user. Think of activity manager service as kind of a "macro-scheduler," I would say. While the kernel scheduler makes decisions on the millisecond or microsecond level, activity manager service tracks these kinds of macro-interactions, like, what services are running? What app is currently in the foreground? What can the user actually perceive? For example, if you're running navigation and listening to music and your screen is off, we know that even though the screen is off and you're not interacting with your phone, you care about navigation performance. You care about music performance. You probably don't care about much else at that point.So, we started enforcing affinity controls using the knowledge in activity manager service. We started off really simple, so background services and cached apps could only run on little cores. Foreground services could use some big cores, but not all of them, and the app you're currently interacting with can use any core. When we tried this, it kind of blew us away. It was a double-digit percentage increase in performance per watt on every test we tried. So, basically, informing the kernel scheduler, kind of constraining the kernel scheduler about what's important to the user, enables it to make much better decisions that result in much better power and performance trade-offs.

So, we've been doing that for a long time now, and even on Pixel 1, which is still a big.LITTLE CPU, but it's much less big.LITTLE than other CPUs. It's still of benefit there, so we've used it on everything.

What we did in P was we looked at what was running when the screen was off on the big cores because big cores draw significantly more power than little cores. What we found was there were a lot of things running that were related to the system. So, they were kind of system services that were running. We looked at how many of these were performance critical and it turns out, not many—when the screen is off at least. If they are performance critical when the screen is off, it ends up being bound as a normal foreground service or something else. There's some other chain that informs activity manager that this process is important.

In P, what we did was, when you turn the screen off, these kinds of system services get moved to a more restricted CPU stack. So, rather than being able to use all of the little cores and some big cores, we just restrict them to only using little cores, and it saves some battery. It makes your battery more predictable because if there is ever a case where a system service is going to use some amount of power when the screen is off, now that power draw is reduced dramatically, just because the big cores are so much bigger than the little cores and draw so much more power as a result.

Ars: You said you started with core affinity on Google devices. I always thought of CPU scheduling as something that was up to the individual device manufacturers. Do you think something like this will survive the customization that happens on third-party stuff?

Murray: Yes, we actually do see this used on third-party devices. It's just part of normal Android, so you can build an Android image with support for CPU sets, and then the right stuff just happens. The OEM doesn't have to do anything except set up the CPU sets for their particular processor. It's not some big, invasive thing we had to do. It's a pretty straightforward tweak inside how we manage scheduling from user space.

Ars: So before, did the system just never bother with moving the background tasks around to other cores? It was just a free-for-all?

Murray: I wouldn't say free-for-all (laughing). Prior to 2015, we hadn't looked at a big.LITTLE SoC, in depth, as part of Nexus. OEMs had their own approaches to deal with this, but most of the time that was focused on the kernel scheduler and making decisions purely in the kernel scheduler to try to get the same effects. All we really did was make that explicit and make it easier for whatever kernel scheduler wasn't used—whether it was one of the HMT variations or EAS or whatever. We make it easier for the schedulers to make the right decision, kind of reduce the complexity of the kernel scheduler because you have all this information from the higher levels of the system that you can use instead.

Benjamin Poiesz: To expand on this, if you like, when you have a scheduler seeing like, "Hey, there's a bunch of work." It sees lots of work needs to happen. It doesn't understand is that important work or is that not important work, and the more things Activity Manager can teach the lower subsystems about, "Hey, the user's actively engaged with something," or, "This needs to happen, but please do it as efficiently as you can," then smarter decisions are happening under the hood. That was one of the key things.

The takeaway was: when the screen's off, there's probably not much that needs to happen right away. You could infer, like, "Well, maybe there just isn't that much work being generated, so then CPUs will stay low." But sometimes for different reasons, the subsystems, it'll set up alarms, set up jobs, try to do processing. And there wasn't really a way to articulate, "I have a bunch of stuff to do, but do it whenever." This gives us a better way to do that that's much more implicit, as opposed to asking all of our engineers, "Please flag what's important or not." That's gonna be hard. This makes it much more implicit.

reader comments

106