What the iPhone XS and iPhone XS Max are really doing to your selfies

You may have heard some talk about the so-called and poorly-named "Beautygate," where users are claiming Apple's new iPhone XS and XS Max are applying overly-aggressive skin smoothing algorithms on photos taken with the selfie camera, resulting in fake-looking or doctored images. Let's talk about what's actually happening behind the scenes.

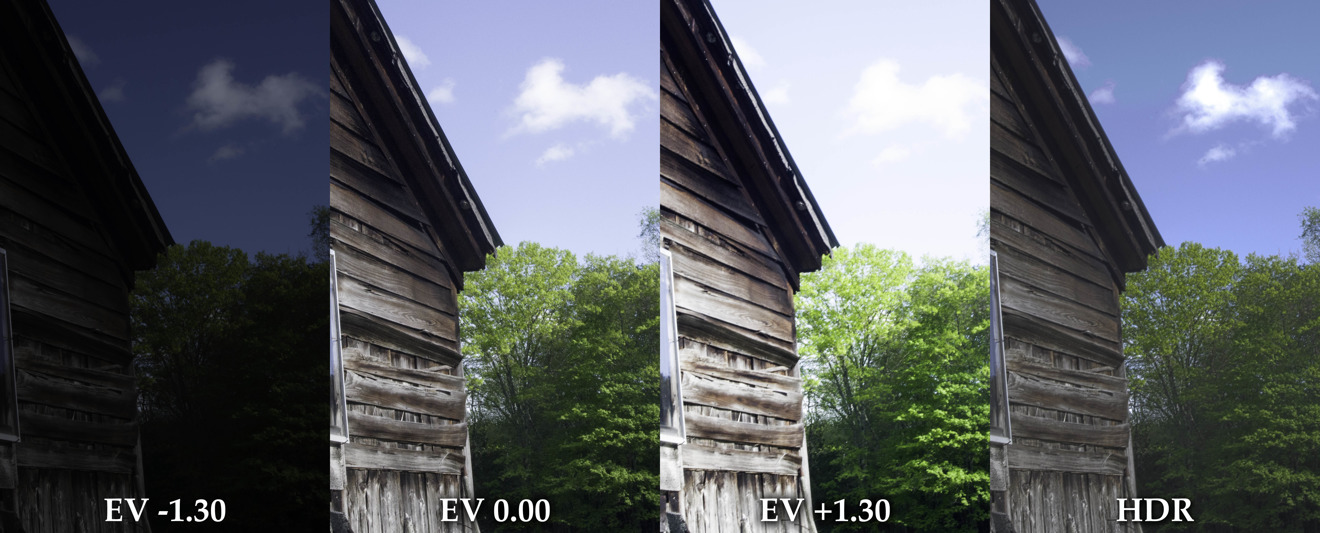

With the new iPhone XS and XS Max, Apple introduced a brand new feature called Smart HDR. The new camera mode is designed to increase dynamic range, which is basically the difference between the darkest and lightest tones that a camera can capture.

In our iPhone X vs XS Max Photo comparison, you can see how far Smart HDR seriously increases the dynamic range on the XS Max.

Comparing similar photos taken on the iPhone X and iPhone XS Max, the X's image shows the face and body of the subject are properly exposed, but the highlights in the background are blown out, which means they're so bright that the details, including the colors, are lost.

On the XS Max, all of the details and texture are visible, and the colors are accurately reproduced. Not only that, but in an examination of the shadows, the areas appear brighter and with more detail.

Turning to the pants on each image, it appears like there's a smoothing effect being applied on the XS Max.

If you look at a selfie photo taken in low light, the effect is even more pronounced. There's two main reasons why this is happening, and they're both related to Apple's new Smart HDR feature.

How Smart HDR works

With a regular HDR photo on last year's iPhone X, the camera will snap three photos, taking one exposed for the face, one for the highlights and one for the shadows. It then blends the best parts of those images into one photo.

Professional photographers sometimes reproduce the technique and manually blend images together in a program like Adobe Lightroom, resulting in a photo with incredible detail and dynamic range.

With Apple's new Smart HDR, it all starts with something Apple calls "zero shutter lag." Basically, whenever your camera app is open, the A12 Bionic processor is constantly shooting a 4 frame buffer. It's effectively taking photos over and over again, very quickly, then holding the last 4 frames taken in the system memory without actually saving them to your camera roll.

When you actually do hit the shutter button, you get a photo instantly, since it grabs one of the frames from the buffer. Not only that, but the A12 Bionic also captures a variety of frames at different exposures at the same time, just like regular HDR.

It then analyzes and merges the best frames together into one photo, which has seriously great dynamic range.

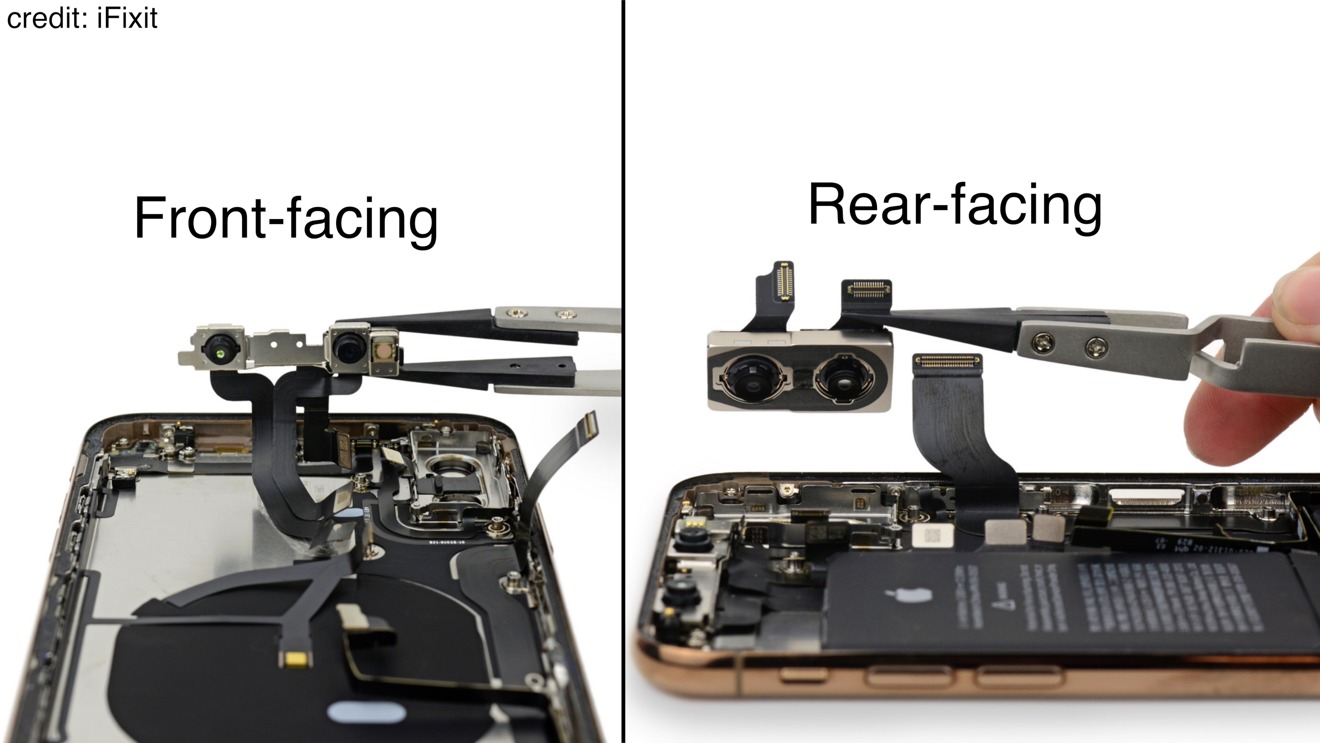

All of this has to happen very quickly, and it's all down to the new front-facing and rear-facing sensors. The new sensors most likely have increased readout speed, making it quicker to capture those frames.

The ability to shoot 1080P at 60 frames per second on the front-facing camera is evidence of a new sensor with a faster readout. This is why Apple probably won't give the iPhone X Smart HDR in a future software update.

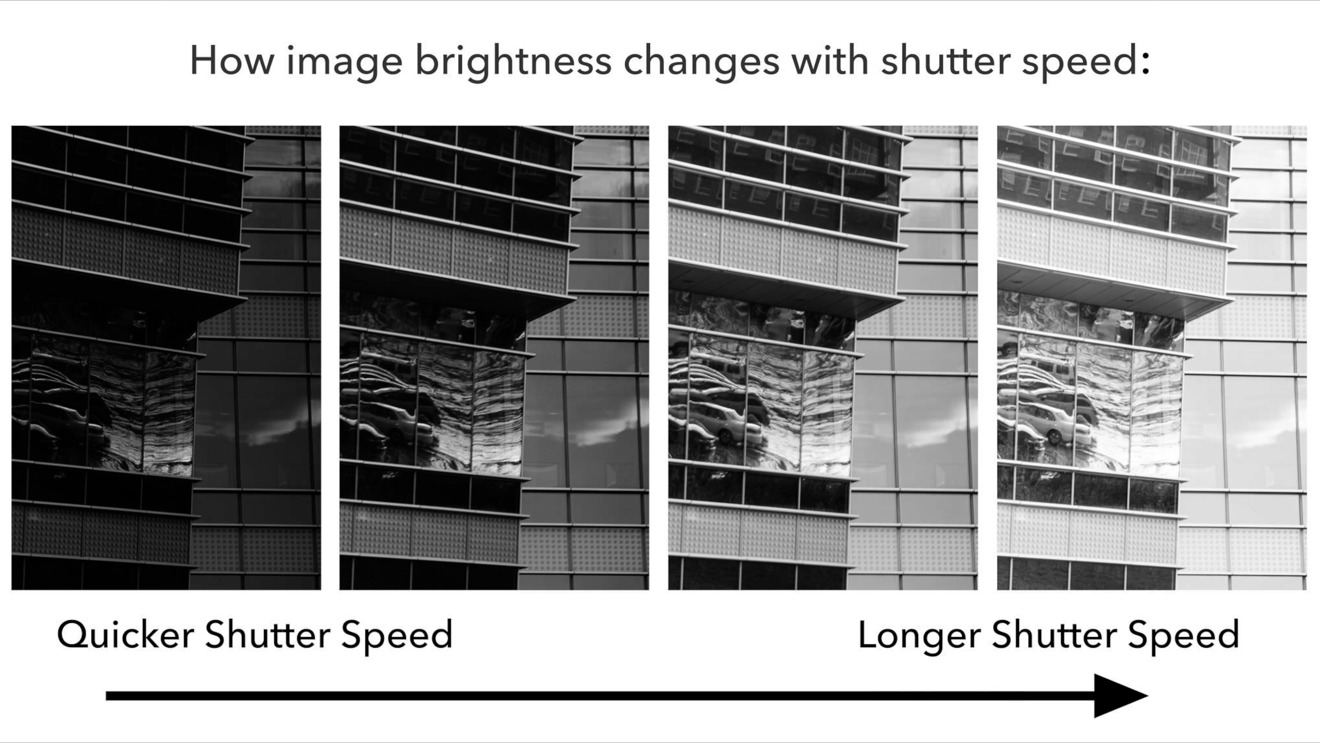

However, there's another limitation: shutter speed, which is basically the amount of time the camera shutters open to allow light into the sensor. With a very fast shutter speed, less light has a chance to get into the sensor.

Since Apple's new Smart HDR requires everything to happen very quickly, the shutter speed needs to be faster, so as not to slow down the process. Due to this, less light gets into the sensor, which can hamper brightness in the image.

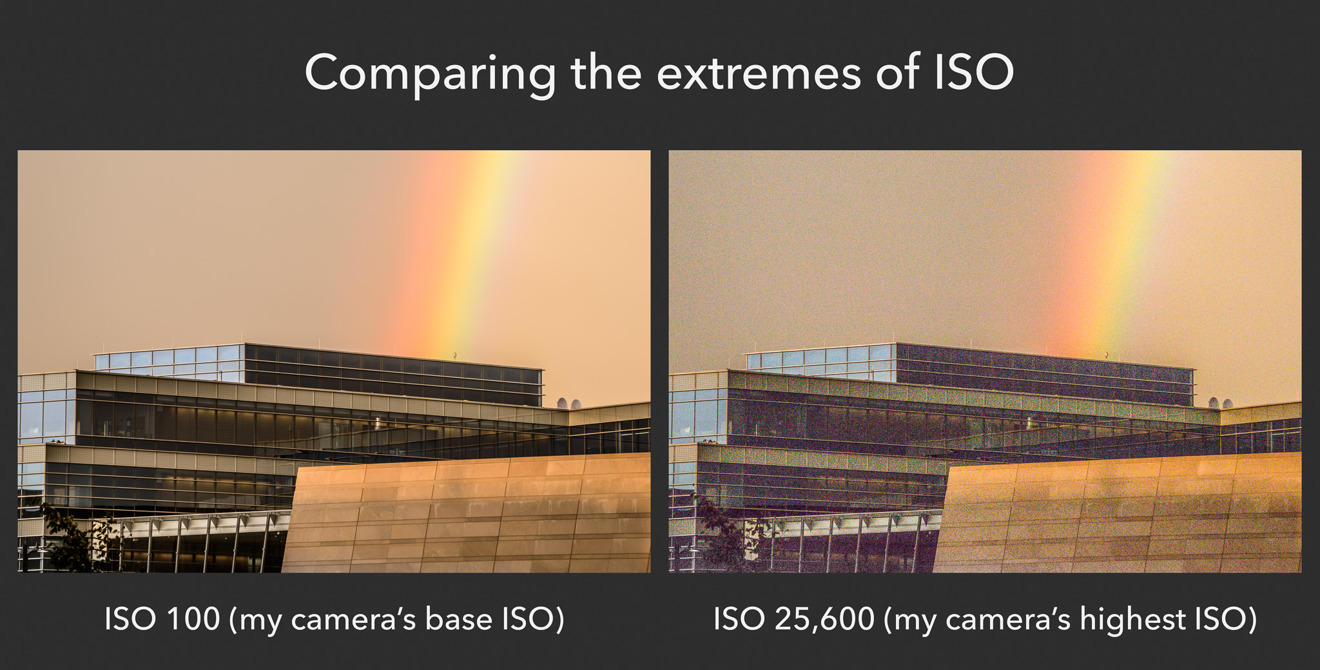

To compensate, the camera increases the ISO, which basically dictates how sensitive the sensor is to light. A consequence of increasing system ISO is that it also increases noise.

According to a deep-dive into the new iPhone XS camera system, Halide developer Sebastiaan de With discovered the new iPhone appears to favor fast shutter speeds and higher ISO levels. He believes the behavior is linked to capturing the best possible content for Smart HDR processing, even when the feature is not enabled.

In bright sunlight, users won't notice much of a difference since the image may already be perfectly exposed. In a low light scenario, the ISO needs to be turned up pretty high, which introduces a lot of noise.

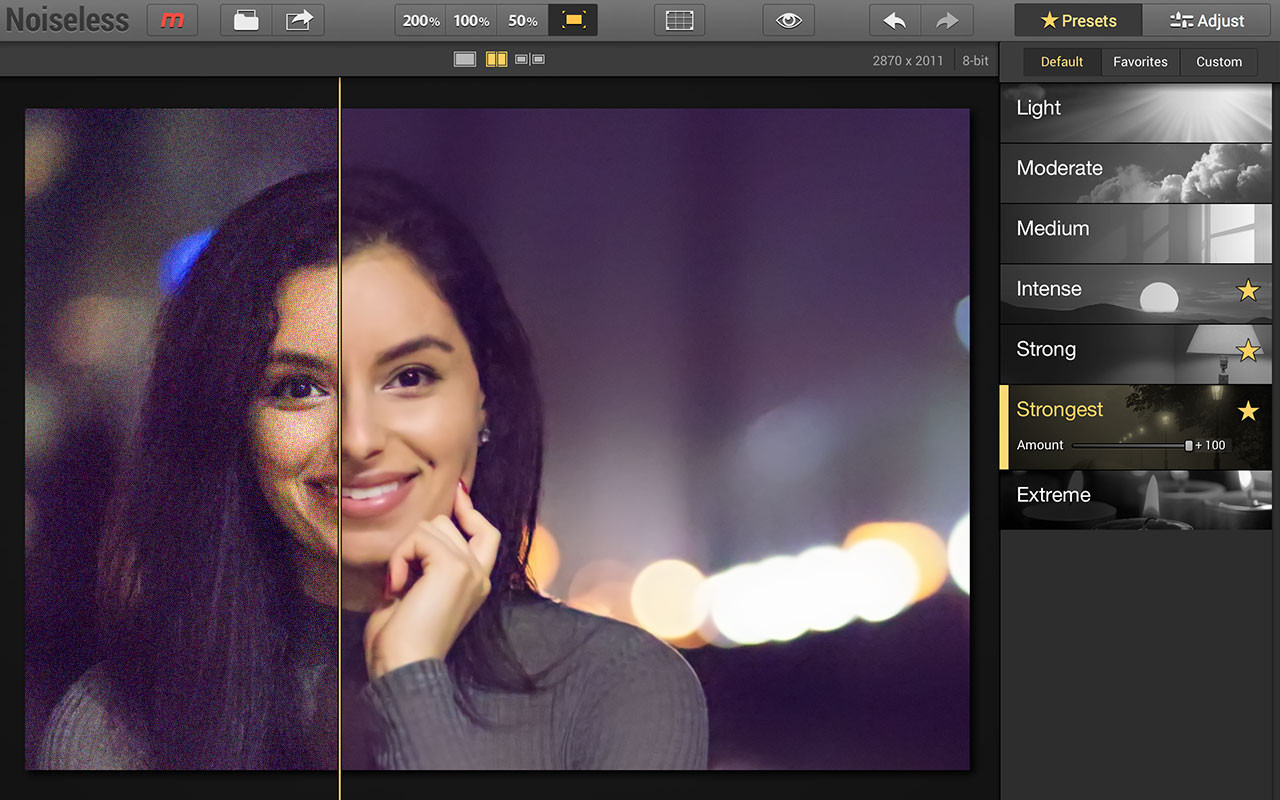

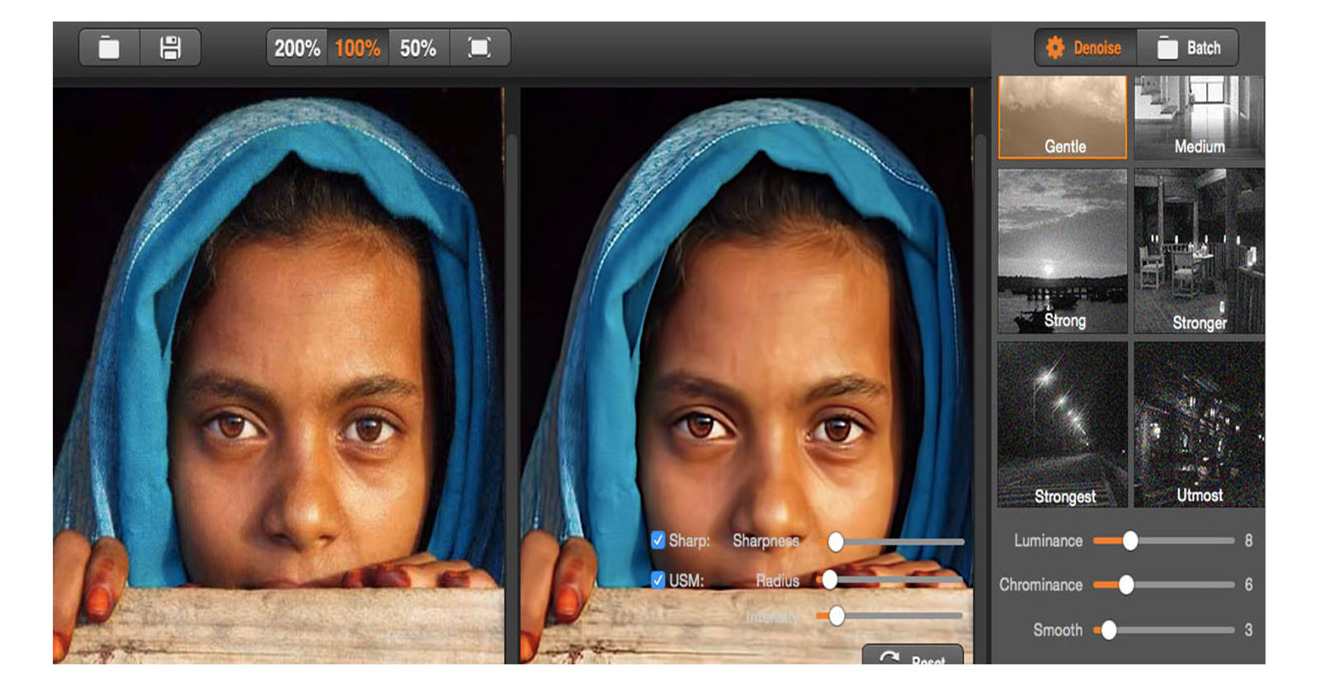

To compensate, Apple adds noise reduction processing to the images.

The most common drawback to noise reduction is that details start to lose their sharpness and look soft, basically what is being called skin smoothing.

The reason the skin smoothing effect is more noticeable on the front-facing camera is because the sensor is a lot smaller than the one on the rear, so even less light has a chance to get in.

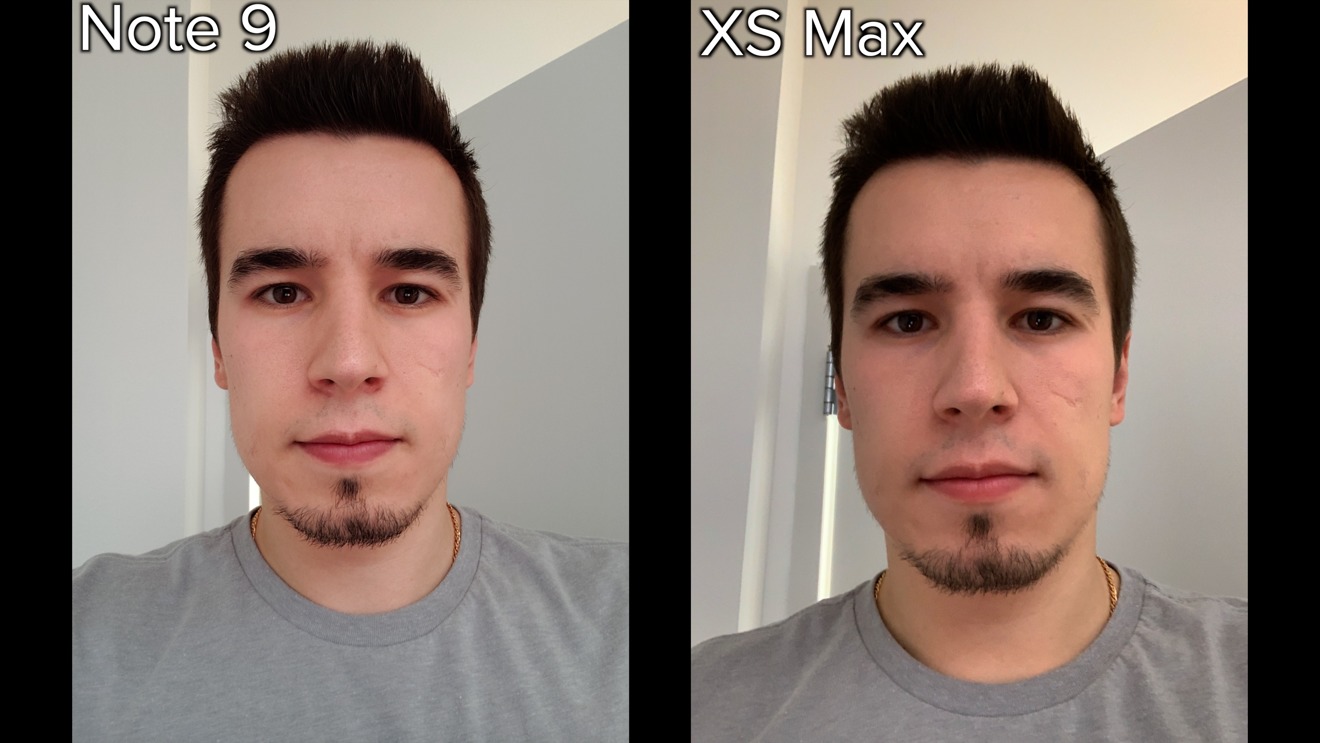

So no, Apple isn't applying a beauty filter. The smoothed out areas are mostly due to added noise reduction introduced as a result of new camera behaviors likely related to the Smart HDR feature. If we compare a low-light photo from the XS Max to something like the Galaxy Note 9, we'll see that the Note 9 is applying noise reduction as well, leading to a similar beauty mode effect.

Pronouncement

This only explains half of the overall beautygate puzzle. The second half deals with why the effect is even more pronounced.

As Smart HDR is taking more frames at different exposures and combining them, it's able to have more high-light and low-light detail than ever before. However, when merging those images together, the photo becomes more balanced and there is an overall decrease in contrast throughout the whole image.

It works the same when shooting video on a professional camera. When filming in a flat profile, there's initially a lot less contrast, but it holds a lot more detail in both the shadows and highlights.

After adding some contrast back in, the image looks just as sharp and has better dynamic range.

Looking at photos from the iPhone X and the XS Max, it seems as though the iPhone X's photo is sharper, but if you look closely, it's actually not. The reason the photo looks sharper is because it has more contrast.

Contrast is basically the difference in brightness between objects in a photo, and in this example, the bottom half isn't any sharper, it just has added contrast.

A similar effect was found when comparing the XS Max to the Samsung Galaxy Note 9. The photos and video on the XS Max lacked contrast and looked less sharp compared to the Note 9.

In this selfie comparison, the iPhone X's image has more contrast, making it look more detailed.

The iPhone XS Max's lack of contrast makes it look like there's some kind of skin smoothing effect being applied, but if we add some contrast back in, that effect starts to melt away.

So it's actually the lack on contrast that makes it seem like the skin is softer when in a well-lit environment.

Changes are possible

Technically, Apple would just need to tweak the software to resolve the issue if it so chooses. That will take care of the contrast part of the puzzle, but as for noise reduction, that's something that happens on basically every phone's selfie camera in low-light conditions.

As for Smart HDR, Apple can tune the feature to make it work slower, allowing the shutter to stay open a bit longer, but of course, that'll reduce the dynamic range performance on the XS and XS Max' new cameras.

Vadim Yuryev

Vadim Yuryev

Chip Loder

Chip Loder

Andrew Orr

Andrew Orr

Marko Zivkovic

Marko Zivkovic

David Schloss

David Schloss

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher