Microsoft has announced two new cloud services to help administrators detect and manage threats to their systems. The first, Azure Sentinel, is very much in line with other cloud services: it's dependent on machine learning to sift through vast amounts of data to find a signal among all the noise. The second, Microsoft Threat Experts, is a little different: it's powered by humans, not machines.

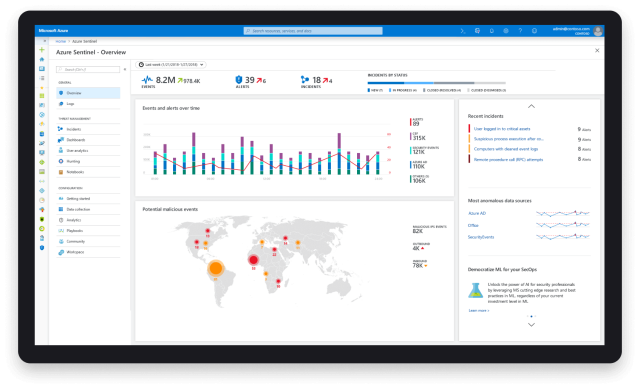

Azure Sentinel is a machine learning-based Security Information and Event Management that takes the (often overwhelming) stream of security events—a bad password, a failed attempt to elevate privileges, an unusual executable that's blocked by anti-malware, and so on—and distinguishes between important events that actually deserve investigation and mundane events that can likely be ignored.

Sentinel can use a range of data sources. There are the obvious Microsoft sources—Azure Active Directory, Windows Event Logs, and so on—as well as integrations with third-party firewalls, intrusion-detection systems, endpoint anti-malware software, and more. Sentinel can also ingest any data source that uses ArcSight's Common Event Format, which has been adopted by a wide range of security tools.

Azure Sentinel is available in preview today and found within the Azure Dashboard. During preview it's free to use, and Microsoft hasn't yet decided how to price it once it goes live.

Threat Experts is a new feature for Windows Defender Advanced Threat Detection (ATP), and it has two elements. Targeted attack notifications use a mix of machine-learning systems and human oversight (using anonymized data) to alert administrators to, especially, targeted attacks—malicious activity that's aimed at a particular organization (say, an employee trying to break into a system to access data they shouldn't see), rather than being part of a broader, mass-target campaign (such as self-propagating ransomware).

The second element is an "Ask a Threat Expert" button in the Windows Defender Security Center. Seeing signs of an attack that your anti-malware isn't trapping and need help investigating? Click Ask a Threat Expert and you'll be put in touch with a real human to help understand what's going on and how to respond and, if necessary, transition to Microsoft's Incident Response service.

The Threat Experts bring human expertise to not merely identify suspicious behavior but to actually investigate it. Machine learning might be good at monitoring logs and events to find signs of, say, lateral movement reusing stolen credentials to explore an organization's network or network connections to unexpected IP addresses. Threat Experts go beyond this to help determine what the initial point of ingress was, the security flaws that enabled that ingress, and how an attacker is achieving persistent access to compromised systems. For now, at least, these are inferences and investigations that people do better than computers.

The big problem with people is, of course, scalability. With the computer-driven Sentinel, Microsoft can just stick it on the Azure Portal and let people give it a try, using Azure's massive compute infrastructure to provide the necessary scaling. Threat Experts requires the use of, well, real computer security experts, and these can't be spun up as quickly as a new virtual machine or container. Accordingly, the preview program is much more limited; interested organizations have to apply to be in the preview and then wait for approval.

reader comments

16