Intel Unleashes 56-Core Xeon, Optane DC Memory, Agilex FGPAs To Accelerate AI And Big Data

Intel Cascade Lake Xeon Scalable Processors Arrive

The star of the show is Cascade Lake. Cascade Lake is the successor to Skylake-SP, and it incorporates a number of key updates and enhancements. The core CPU architecture, however, is foundationally very similar. Cascade Lake features a newly overhauled integrated memory controller that adds support for Optane DC Persistent memory, new Vector Neural Network Instructions, hardware mitigations for a few Spectre and Meltdown variants, and new multi-chip packages that double the maximum number of cores per socket over the previous-gen to 56. The CPU cores themselves are largely unchanged, though.

As mentioned, Intel is launching a many pronged assault targeting many different markets and potential use cases. We will attempt to provide details and some background of the key announcements on the pages ahead, but the slide above breaks down the key products Intel is launching or announcing today. One of the products we won’t delve too deeply into is the dual-port Intel Optane DC SSD. That product is very similar to the existing Optane DC P4800X SSD, but is equipped with dual ports to maximize performance and resiliency / reliability (a picture of the actual drive is embedded on the last page here if you'd like to see it in the flesh).

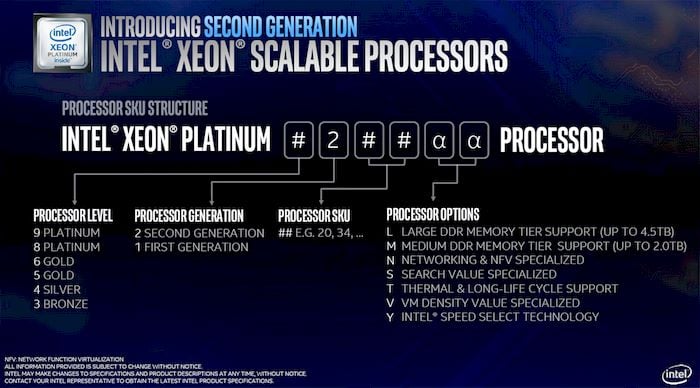

Intel has a dizzying array of products coming that have different configurations and feature sets. To help make heads or tails of all of the model numbers, here is what essentially amounts to a decoder ring for Intel's naming convention. As is typically the case, the higher model numbers usually represent higher clocked processors or those with additional cores. The final few letter designations define which processor options are enabled. An Intel Xeon 8260Y, for example, is a Platinum-class, second-gen CPU, with Intel Speed Select Technology enabled.

There are multiple types of 2nd Generation Intel Xeon Scalable processor being launched today; the most powerful options reside in the 8200 and 9200 series. The Xeon Platinum 8200 series can be considered the spiritual successor to the original Xeon Scalable series processors based on Skylake-SP, which launched in 2017. The 8200 series feature single dies and up to 28 cores (56 threads) per CPU, but are clocked higher than the first-gen Xeon Scalable processors. As mentioned, these new Cascade Lake processors also add support for Optane DC Persistent memory, new Vector Neural Network Instructions (VNNI) dubbed Deep Learning Boost, and they feature hardware mitigations for a few Spectre and Meltdown variants. All of the processors continue to be manufactured using Intel’s 14nm process node, though Intel has tweaked and tuned the dials to maximize frequencies and efficiency. The Xeon Platinum 9200 series takes things a step further by incorporating dual dies onto a single BGA package, for up to 56 cores (112 threads) per socket. That also means double the amount of cache per socket. Whereas Xeon Scalable Platinum 8200 series processors pack up to 38.5MB of smart cache, the 9200 series tops out at whopping 77MB.

The processors also offer support for up to 3TB of memory per socket, with up to 12-memory channels on a 9200 series CPU (6-channels per CPU die). 8200 series processors will feature TDPs ranging from 70 watts on up to 205 watts, while the dual-die 9200 series will scale from 250 watts on up to 400 watts – a breakdown of the entire stack is available on our closing page here.

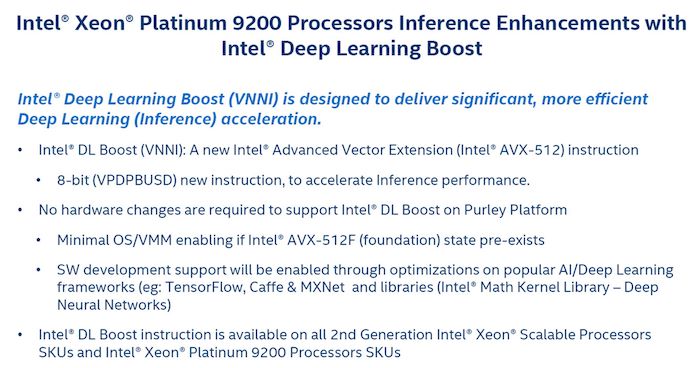

In addition to support for Optane DC Persistent Memory, which we’ll cover more on the next page, a key new feature of these processors is Intel Deep Learning Boost technology. Intel expects AI to be one of the largest workloads in the data center moving forward. And it won’t just be a core data center workload, it’ll spread out to the edge eventually as well.

To better accelerate AI / Deep Learning (inference) workloads, the instruction set in Cascade Lake has been updated with additional vector extensions. DL Boost will be available on all 2nd Gen Xeon Scalable processors and Intel has worked to enabled software optimization for many of the most popular AI / Deep Learning frameworks. When workloads are optimized to use DL Boost, Intel is claiming the performance improvements can be significant...

Generation over generation, Intel claims Cascade Lake with DL Boost has shown performance increases of greater than 2x, with some workloads showing a nearly 4x improvement. Looking back to 2017 through to today's launch though, Intel is also quick to point out that the combination of hardware and software optimizations have drastically changed the performance proposition for DL workloads on Intel server platforms. From a baseline in 2017 to today, DL performance gains in the neighborhood of 30x have been realized with some workloads.

Another new feature coming to some of the Xeons in Intel’s line-up is called Speed Select Technology, or SST. Intel SST gives users the ability to define a higher-priority core, which can maintain higher turbo and base clock states to boost performance, when power and thermals aren’t an issue. SST offers frequency prioritization and ability to select the base frequency for the higher-priority core. The parameters must be defined at boot time, and chosen from a subset of options, but customers can request different profiles if Intel’s pre-defined options don’t meet their needs.

Intel Quick Assist Technology is also coming along with a new family of Xeon D-1600 series processors. Intel Quick Assist Technology is essentially a built-in acceleration engine for crypto ciphers and authentication, public key cryptography and key management, and compression. Each logical Quick Assist accelerator engine can support up to 30Gb/s of throughput.

Xeon D-1600 series processors are lower core count, lower-power cousins of the Xeon Scalable series processors, and are based on the Snow Ridge architecture. Xeon D-1600 series processors are designed for space and/or power constrained environments, like edge network devices, base stations, etc.

We haven’t been able to independently test Intel’s latest Xeon Scalable Series processors just yet, but the company did provide and array of data that shows the latest high-end Xeon Platinum 9242 significantly outpacing AMD’s current-gen EPYC platform in a variety of workloads. AMD does have Rome coming down the pipeline, which features a newer architecture, double the cores, and a new memory / IO configuration, however. This AMD offering will change the landscape once again when they arrive later this year. The initial performance data provided by Intel does look promising though.