AMD’s second-generation Epyc processor, code-named “Rome,” has yet to be released into the wild, but it is already racking up some impressive wins in academic supercomputing.

Last week, Indiana University and Norway’s Uninett Sigma2 each procured a 5.9-petaflop supercomputer powered by the upcoming Rome processors. Both will provide university researchers in their respective geographies access to petascale computing when they become operational in 2020. But that’s where the similarities end.

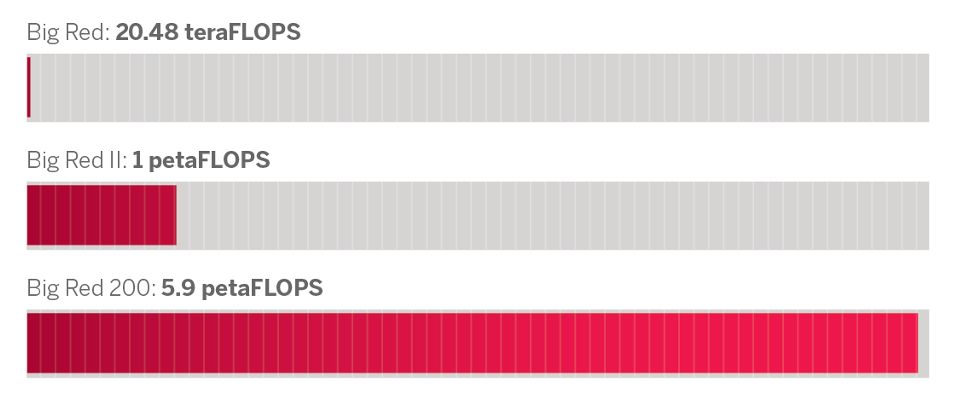

The IU system, known as Big Red 200, will be based on Cray’s next-generation Shasta platform and is being funded to the tune of $9.6 million using money from federal contracts and grants. When it comes into production early next year, it will replace Big Red II, the university’s current flagship HPC system. Big Red II is also an AMD-based Cray machine, in this case a 1.0 petaflops Cray XK7 powered by 16-core AMD Opteron processors from days gone by. The 2013-era system includes Nvidia Tesla K20x GPUs as accelerators.

In that regard, Big Red 200 is very much a modern rendition of its predecessor, with the Opteron chips replaced by the Epyc Rome, the K20x by the current Nvidia Tesla V100, and Cray’s “SeaStar+” Gemini interconnect by Slingshot, which has yet to be given a product name. However, neither Cray nor IU provided any information regarding how many GPUs or CPUs are in the system, or for that matter, the node count, the memory capacity, or any particulars about the storage. That suggests the final configuration is still up in the air.

According to Matthew Link, the associate VP for IU’s University Information Technology Services, the choice of the Epyc Rome CPUs was at least partially based on its generous core count – 64 cores in the top-of-the-line SKUs – and as he puts it, “to give us the most bang for the buck.”

“We have a lot of medical researchers that don’t do massive-scale MPI, but they do a lot of node-level parallelism jobs,” Link told us. “I think being able to service those in a single node is beneficial to them.”

Right now, Rome’s counterpart is Intel’s Cascade Lake-SP Xeon processor, which tops out at 28 cores and is likely to be a good deal pricier than what AMD will charge for its top-bin Rome SKUs. The Cascade Lake-AP line offers up to 56 cores, thanks to packaging two dies in the processor, but at 400 watts per socket, these are likely only be used in special-purpose servers. By the end of the year Intel hopes to have the Cooper Lake-SP Xeon out the door, but since it will be manufactured on the same (or similar) 14nm process technology as Cascade Lake, it’s not likely to offer that many more cores or significantly better price/performance compared to its predecessor. Although we could be wrong about that.

Big Red 200 is being positioned as an “AI supercomputer,” which these days could ostensibly apply to any decent-sized HPC cluster outfitted with V100 GPUs. In this case, the machine’s AI smarts will be used to support medical research in areas like molecular genetics, medical imaging, and brain physiology. The system is slated to be the key computational resource for the university’s Precision Health Initiative, a biomedical research program that will explore disease prevention via a range of genetic, environmental, and behavioral factors.

The AI capabilities will also be applied to research in environmental science, such as studies in climate change, as well as in cybersecurity applications. Regarding the latter, the university’s Center for Applied Cybersecurity Research will be one of the early users of the system

Big Red 200 is scheduled to be up and running on January 20, 2020, the university’s bicentennial anniversary.

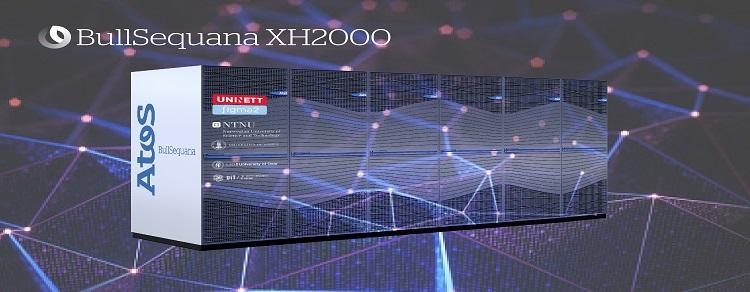

The Norwegian supercomputer is a different animal altogether. It’s being purchased by Uninett Sigma2, which manages the country’s HPC infrastructure for research projects being performed at the University of Bergen, the University of Oslo, the University of Tromsø and the Norwegian University of Science and Technology (NTNU). Although also powered by Rome processors, here the delivery vehicle for the AMD silicon is an Atos-built BullSequana XH2000.

It will be comprised of 1,344 nodes, each equipped with two 64-core 2.2 GHz Epyc processors (with no GPUs), and hooked together with Mellanox 200 Gb/sec HDR InfiniBand. Total memory capacity is 372 TB, backed by 2.5 PB of storage from DataDirect Networks using the Lustre parallel file system. The whole machine is projected to draw just under 1 megawatts (952 kilowatts) when running at full tilt.

When installed at NTNU in the spring of 2020, the BullSequana XH2000 will provide more than five times the theoretical performance of Uninett Sigma2’s previous big system, Fram. At 1.1 petaflops (peak), that Lenovo-built machine is currently the fastest supercomputer in Norway. It is powered by more than 2,000 “Broadwell” Xeon processors from Intel and is equipped with 78 TB of main memory. Fram started production in 2017.

According to Atos, most of the energy used to cool the BullSequana machine will be recovered by recycling the hot water from the XH2000’s Direct Liquid Cooling system to heat buildings on the NTNU campus in Trondheim. To further boost its green credentials, the system is to be powered exclusively by hydroelectricity.

According to Uninett Sigma2 general manager, Gunnar Bøe, the new supercomputer will be used to further research in areas like climate and weather research, drug discovery, language research, psychology, and other disciplines in the humanities. “Sigma2’s new supercomputer is a step towards finding the answers to the major challenges facing society,” says Bøe.

The Big Red 200 and Uninett Sigma2 systems are not the only Rome-powered supercomputers that are headed to HPC centers at academic institutions. Both of those systems will be dwarfed by a 24 petaflops supercomputer to be installed at the High-Performance Computing Center of the University of Stuttgart (HLRS) later this year, which is manufactured by Hewlett Packard Enterprise. That system, called Hawk, will be comprised of 5,000 dual-socket nodes. It will support academic researchers and industry specialists in Germany in areas such energy, climate, health, and mobility. Hawk was announced in November 2018 and is expected to be up and running before the end of this year.

Be the first to comment