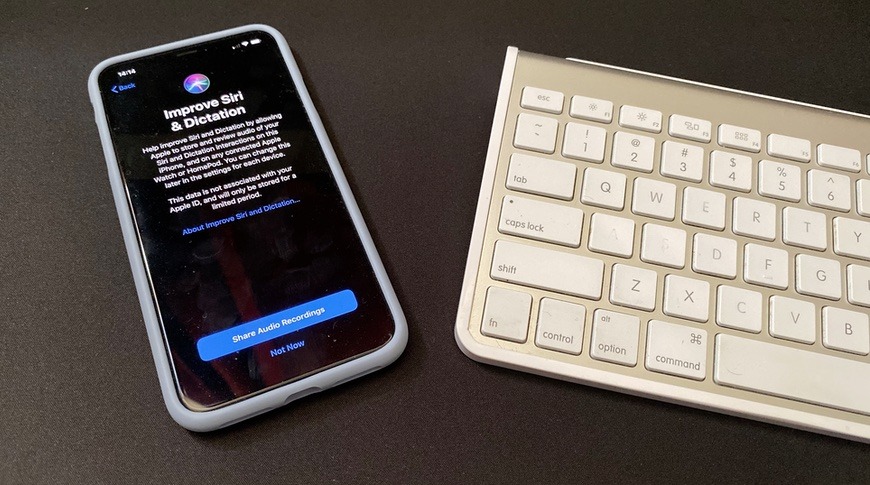

Siri quality assurance opt-in option & record deletion in the iOS 13.2 beta

Apple has started to ask users if they wish to take part in the Siri quality assurance program in the latest beta for iOS 13.2, a change in policy following criticism about the iPhone maker using contractors to listen to a selection of Siri audio recordings.

Released on Thursday, the second developer beta for iOS 13.2 includes a number of features relating to Deep Fusion photography and new emoji. The update undergoing testing also includes some privacy-focused changes, specifically surrounding Siri.

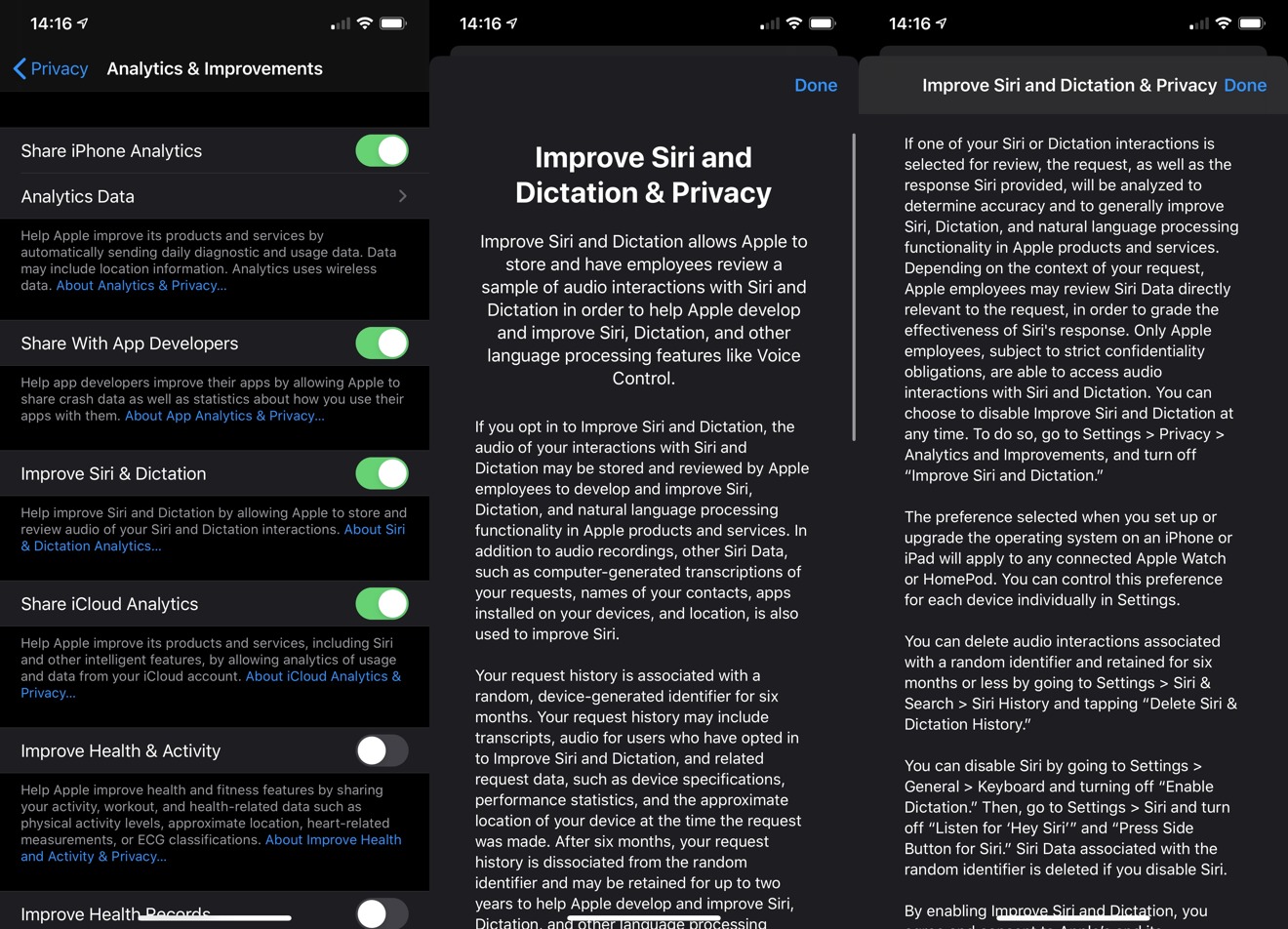

Apple advised in August it was going to add an option in iOS that would allow users to provide anonymized audio recordings to the Siri quality assurance program, following criticism of the program's existence without apparently disclosing it to users.

A report in July revealed details of a Siri grading program, where contractors were paid to listen to snippets of Siri queries uploaded from the iPhone, HomePod, and other devices. By listening, the contractors were able to improve Siri by advising of when the assistant was triggered despite no verbal prompting or misheard a query, with the aim of minimizing such instances in the future.

The report alleged it was possible for some users to be identified by the content of recordings, despite steps taken by Apple to anonymize the digested data.

Shortly after the allegations surfaced, Apple suspended the grading program while it went under review, as well as assuring the addition of an opt-in or opt-out element for the scheme in a future software update.

At the end of August, Apple completed its review and confirmed changes would be made, including the option to opt in for audio sample analysis, a process that could be opted out from at any time, and that only Apple employees would be allowed to listen to the samples. Apple would also no longer retain audio recordings of Siri interactions in favor of using computer-generated transcripts to improve Siri, and would also "work to delete" any recording which is determined to be an inadvertent trigger of Siri.

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic

Amber Neely

Amber Neely

Christine McKee

Christine McKee

Mike Wuerthele and Malcolm Owen

Mike Wuerthele and Malcolm Owen

William Gallagher

William Gallagher