Nvidia wins new AI inference benchmark for data center and edge SoC workloads

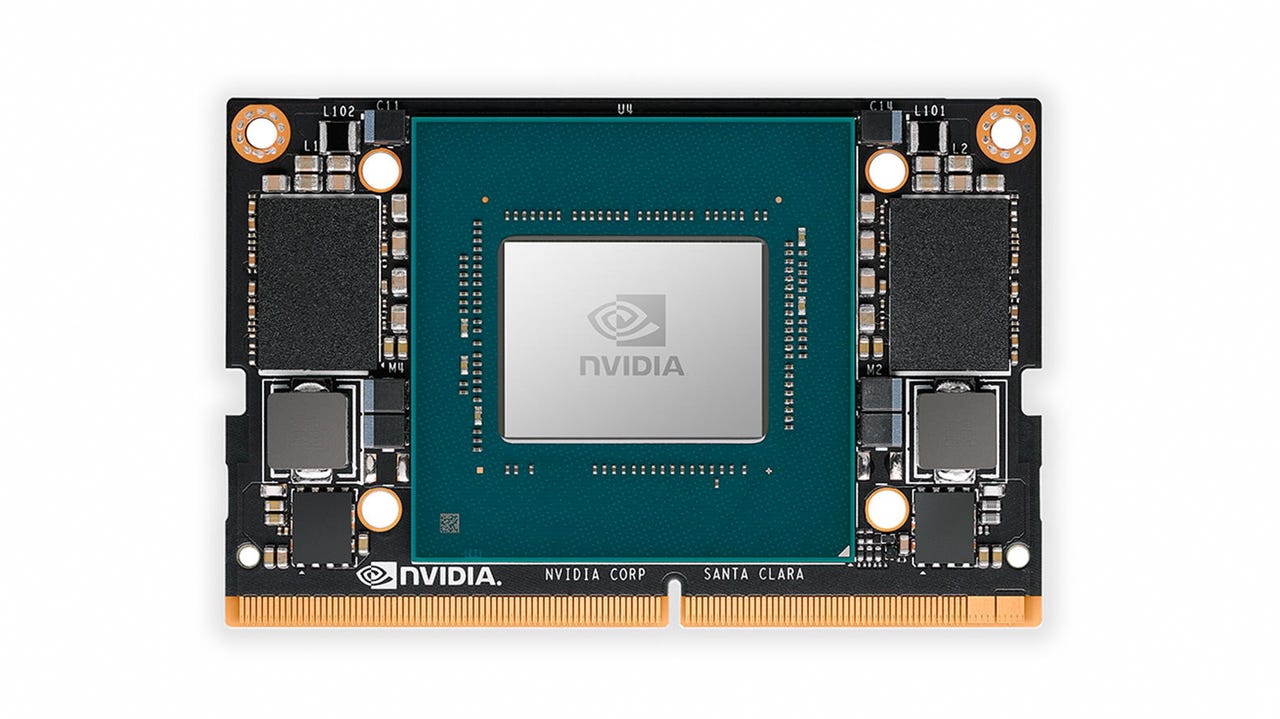

The Jetson Xavier NX

Nvidia is touting another win on the latest set of MLPerf benchmarks released Wednesday. The GPU maker said it posted the fastest results on new MLPerf inference benchmarks, which measured the performance of AI inference workloads in data centers and at the edge.

Featured

MLPerf's five inference benchmarks, applied across four inferencing scenarios, covered AI applications such as image classification, object detection and translation. Nvidia topped all five benchmarks for both data center-focused scenarios (server and offline), with its Turing GPUs.

Meanwhile, the Xavier SoC turned in the highest performance among commercially available edge and mobile SoCs that submitted for MLPerf under edge-focused scenarios of single-stream and multi-stream.

"Modern AI inference is really hard," Paresh Kharya, director of product marketing for Nvidia's accelerated computing business, told reporters this week. "The diversity of neural networks getting deployed today is immense, and the complexity is also immense. And as we move into the more complex and more interesting use cases like conversational AI, the complexity of these models is just increasing tremendously."

MLPerf is a broad benchmark suite for measuring the performance of machine learning (ML) software frameworks (such as TensorFlow, PyTorch, and MXNet), ML hardware platforms (including Google TPUs, Intel CPUs, and Nvidia GPUs) and ML cloud platforms. Several companies, as well as researchers from institutions like Harvard, Stanford and the University of California Berkeley, first agreed to support the benchmarks last year. The goal is to give developers and enterprise IT teams information to help them evaluate existing offerings and focus on future development.

As for the latest inference benchmark, Nvidia credits the programmability of its platform across a range of AI workloads for its strong performance.

"AI is not just about accelerating a neural network phase of the AI application, it is about accelerating the whole end to end pipeline," said Kharya. "At Nvidia, we are focused on providing a single architecture and single programming platform and optimization for all of the range of products that we offer."

Nvidia also unveiled the latest member of its Jetson series aimed at autonomous and embedded systems. The company announced the launch of the Jetson Xavier NX, described as providing Xavier performance in nano size. The module, which clocks in at about the size of a credit card, delivers up to 21 TOPS and consumes as little as 10 watts of power.

Nvidia said the Jetson Xavier NX is ideal for robotics and embedded applications with complex power and size constraints, including drones, high resolution cameras and sensors, video analytics systems. The system will be available in March 2020 for $399.

RELATED STORIES:

- US Postal Service adopts Nvidia AI to improve delivery systems

- Nvidia claims world records in conversational AI training

- Google, Nvidia tout advances in AI training with MLPerf benchmark results

- Nvidia intros EGX compute platform for edge AI

- Nvidia brings Arm support to its GPU platform for supercomputing

- Nvidia unveils GeForce RTX SUPER gaming GPUs

- Nvidia announces 10 new RTX Studio laptops and mobile workstations

- Nvidia expands colocation program around the globe