A7: How Apple's custom 64-bit silicon embarrassed the industry

After delivering just the first three generations of its custom ARM Application Processors between 2010 and 2012, Apple had already reached parity with market-leading mobile chip designers, even while breaking from the Cortex-A15 road map established by ARM to launch its own new Swift core. Apple's next moves embarrassed the industry even further while setting the stage for initiatives that are playing out today.

Peak Samsung

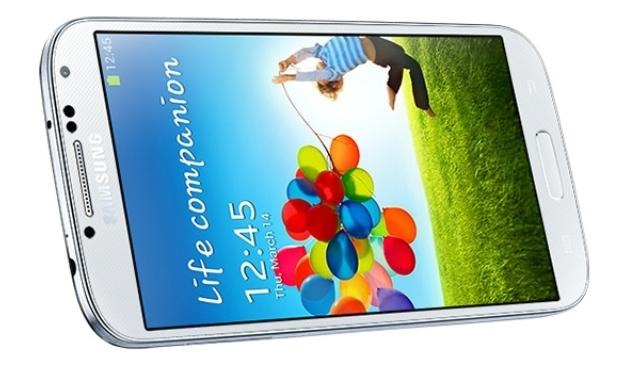

Following Apple's A4, A5, A5X and the A6 powering iPhone 5 at the end of 2012, in the spring of 2013 Samsung ended up being the first to market with a big.LITTLE Cortex-A15 SoC design, which it sold as the Exynos 5 Octa and used in its Galaxy S4 phone. Samsung touted its eight cores as being an obvious benefit over Apple's paltry two cores on the A6 and A6X.

Pundits weighed in with thoughts on how Samsung's new chip would give it broad advantages in performance and also in manufacturing costs because Apple was paying Samsung to build its A6 and A6X while Samsung was building Exynos 5 Octa both for itself and with the intent to sell to others. Journalists had a hard time evaluating which company was leading chip design because Apple was keeping many of the details of its chips secret. That led them to trust whatever Samsung was saying.

The Galaxy S4 reached a new peak in lifetime sales for Samsung of 80 million units, making it the most successful Android model ever in terms of volumes sold. It had also introduced LTE-Advanced ahead of Apple and others, focusing on its modem speed rather than its CPU. At the same time, Samsung also overclocked its Exynos 5 Octa to deliver benchmarks that exceeded Qualcomm's Snapdragon 600 — with a little help of some cheating — and it decided to switch from ARM Mali to PowerVR graphics on par with Apple's A6, albeit underclocked.

It appeared Samsung was creating a luxury tier smartphone of its own, a task that had only previously been accomplished consistently, in volume by Apple. Samsung was also touting many of its ideas, including an infrared blaster that enabled the Galaxy S4 to serve as a TV remote control. It also used infrared to support AirView gestures made in front of the display, Eye Tracking, and a Quick Glance proximity sensor to light up the screen to check notifications. The Galaxy S4 was also the first model to diverge from just "slavishly copying" Apple's industrial design.

These factors caused much of the tech media to conclude that Apple's iPhone empire was being Disrupted by more agile and innovative Android competition. It was also popular to suggest at the time that Android was looking more modern — thanks in part to Samsung's early use of its own vibrantly oversaturated OLED panels. Android was also seen as better integrated with Google's latest services, while Apple's iOS 6 was plagued with issues like the new Apple Maps actually killing people — journalists actually wrote that people driving into the desert without any water were potentially lethal victims of Apple's map errors — and that iOS's overall appearance just looked dated.

Narratives in flux

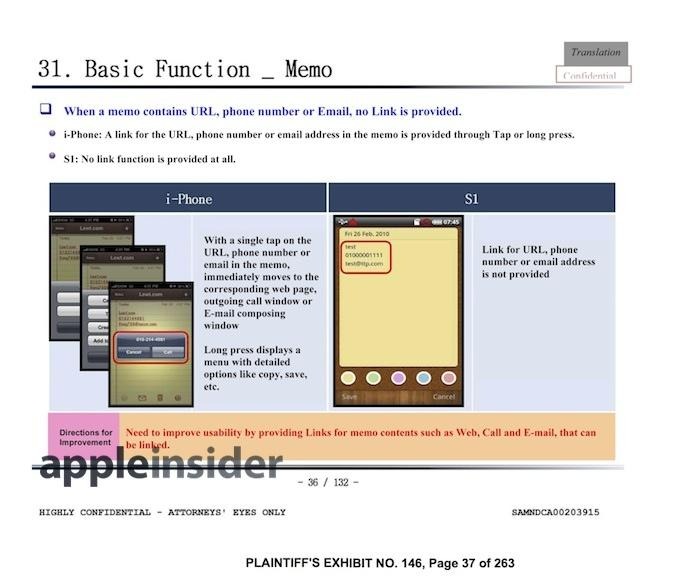

However, flaws quickly started showing up in Samsung's narrative of having distant technology lead over Apple. As various patent infringement suits between the two companies dragged on, it was revealed how much of Samsung's work was directly taken from Apple. Further, the more Samsung tried to pursue its technical initiatives, the more lost it got. Even the tech media that generally published positive press releases without any criticism was beginning to dismiss Samsung's unique product features as gimmicky and 'rough around the edges.'

It was also increasingly becoming clear that it wasn't Apple being Disrupted; if anything, it was the generic PC market that was being gutted by the incredible expansion of iPad as a new product category. For some reason, while Samsung appeared to be catching up to Apple in phones, it wasn't performing well in tablets at all. That was despite, or perhaps because of, its pursuit of building an incredible array of different tablets and netbooks running everything from Chrome OS to Android to Windows.

Just barely into 2013, it was widely held among Android Enthusiasts that Samsung was rising up to fight back against the iPhone establishment, a sort of patron saint for Android. That was crazy because it had only been a few years since Samsung's rather comfortable existence as a large commodity handset maker building Symbian, Windows Mobile, and JavaME phones had been defeated and embarrassed by Apple's new iPhone.

It was as if bloggers were performing a version of "The Empire Strikes Back," where Darth Vadar leads a scrappy band of resistance fighters.

The first to failure

Samsung couldn't possibly have seen itself as an upstart rival. It was Apple that had pushed into Samsung's territory with the introduction of the iPhone, many years after Samsung's first smartphones debuted. Samsung had also long worked with Microsoft to develop Tablet PCs. It must have been devastating to see a new company — and a primary component customer — pull out a very thin new tablet with long battery life and buttery-smooth animated touch navigation, delivering a product that made Samsung's Tablet PCs look like a clumsy old joke.

Samsung had always been a copycat in design, but its copying was an attempt to follow ideas that had been proven successful. Investing in original ideas is very risky — it costs a lot more to get started and everything is on the line if the idea fails.

Apple made the original invention look effortless: desktop Macs, iPod, the new iPhone and now iPad. Those were all huge investments in a complete idea, and there was little margin allowing for failure. Samsung didn't want to risk as much with radical big thinking because it had huge supply lines to maintain and existing customers it had to service.

Samsung's conservative approach did indeed limit its losses. Google's forays into Google TV, Chromebooks, and Honeycomb Android tablets had all turned out to be major flops. Microsoft was in the process of failing miserably in its multi-billion dollar campaigns to launch the "Metro UI" for Zune, KIN, Windows Phone, Surface RT, and Windows 8. Launching an entirely new user interface was vastly more difficult than Apple had made it look.

After watching Apple take off in mobile devices with iPhone and then iPad, Samsung belatedly stepped up its efforts to catch up to Apple in the advanced silicon needed to power new devices. Exynos 5 Octa was supposed to establish that Apple wasn't so far ahead as it might appear.

However, it turned out that Samsung's Exynos implementation of Cortex-A15 had some issues. Problems began appearing in versions of its Galaxy S4 using Exynos 5 Octa chips. AnandTech described the issue as "a broken implementation of the CCI-400 coherent bus interface," with "implications [that] are serious from a power consumption (and performance) standpoint."

The site added that "neither ARM nor Samsung LSI will talk about the bug publicly, and Samsung didn't fess up to the problem at first either - leaving end-users to discover it on their own." Despite being a serious issue, Samsung's silicon problem wasn't widely reported.

Fake failures, real success

Apple, on the other hand, was hit with one overblown "crisis-gate" media circus after the next, without any apparent impact. Apple's products increasing came to be viewed as higher quality, with notable exceptions too rare to be of much concern.

In the years following Steve Jobs' death, Apple faced the incredibly difficult task of maintaining the incredible pace of Jobs' last decade of introducing iPod, macOS X, iPhone, iOS, and iPad. Additionally, it had to chart out a new future that required some breaks from the past, while retaining the recognizable familiarity of its brand— but without alienating its existing user base.

In the previous fall of 2012, Tim Cook's new Apple released a smaller iPad mini that invited "Steve Jobs would never!" comments from the press, despite it having none of the issues of the "tweener" 7-inch tablets Jobs had criticized as being "dead on arrival" in 2010.

At the beginning of 2013, Buzz Marketing Group orchestrated a viral "Are iPhones uncool to kids?" campaign that helped create millions of Google search results surrounding the talking points that "Teens are telling us Apple is done. Apple has done a great job of embracing Gen X and older [Millennials], but I don't think they are connecting with Millennial kids [who are] all about Surface tablets/laptops and Galaxy."

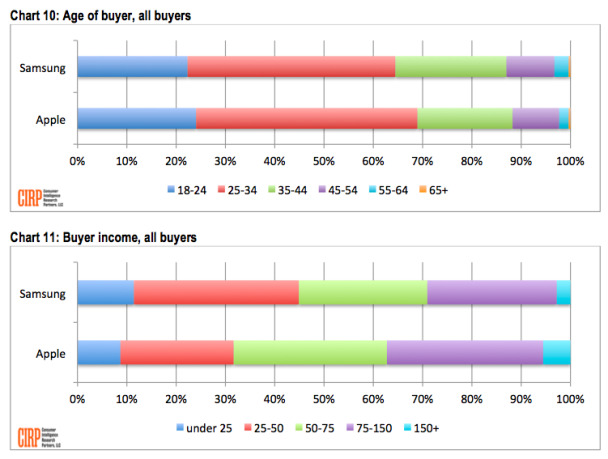

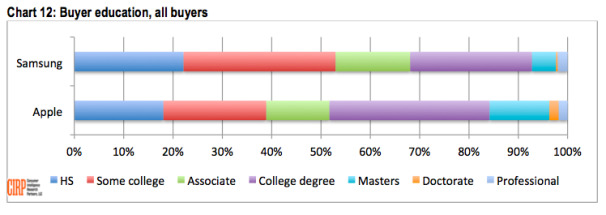

Samsung created its ads suggesting that iPhones were for Olds and young people were hip to its copycat brand. Yet data from Consumer Intelligence Research Partners showed that iPhone users were not more likely to be affluent and better educated, but were also more popular among younger users, while Samsung was the more popular brand among older people.

In the summer of 2013, Apple unveiled iOS 7, a boldly fresh new refinement of the user interface and appearance of Apple's iOS, complementing the clean lines of its iPhone 5 and breaking from the glossy, "lickable" skeuomorphic photorealism that had defined Apple's look in the 2000s.

After the backlash that greeted the rehash of Windows Vista and crickets that followed the facelift of Windows Phone, we held our breath in anticipation of how the global public would respond to such a fundamental shift on iPhones, but it turned out that Apple's younger customers desperately wanted the fresh and new, even if older media critics pined for the past and worried aloud about its new "buttonless buttons" and risk of vertigo from its use of parallax animations.

That fundamentally describes much of the media's conservative doubts about everything Apple does, despite its increasingly solid record of success in knowing what consumers want— and how to deliver it. And in contrast, the tech media was generally liberal in throwing excited support behind competitive efforts from Google, Samsung, Microsoft, Xiaomi, and even Huawei, despite their terrible records of launching consumer products that were good, popular, original, and enduring.

The new iOS 7 wasn't the only surprise Apple would drop on the mobile industry in 2013.

A7 drops out of nowhere

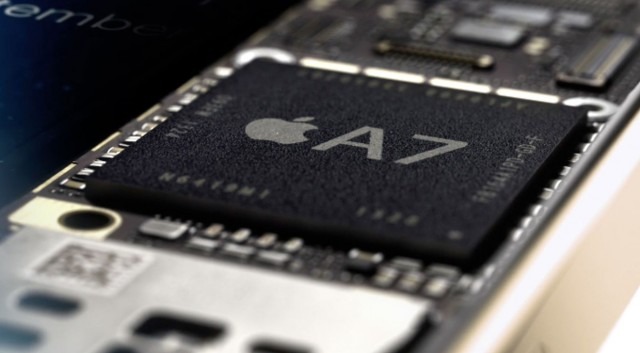

Apple ultimately didn't deliver a Cortex-A15 design as expected. Instead, as Samsung was trumpeting "8 cores" and trying to cover up its Exynos chip design flaws, Apple casually announced at the introduction of iPhone 5s in late 2013 that it was delivering the world's first 64-bit mobile SoC: the new A7.

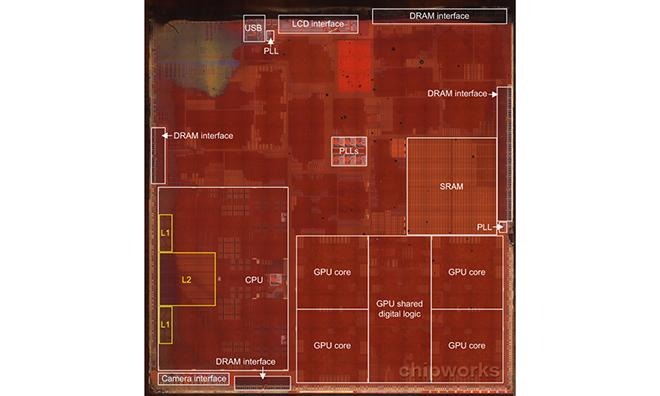

In addition to its dual-core 64-bit "Cyclone" CPU cores, A7 also delivered the Secure Enclave used to protect Touch ID biometric data, and appeared to be the first to use Imagination Technologies' advanced new PowerVR Series6 Rogue GPU architecture, providing new support for OpenGL ES 3.0.

No other mobile handset chip designer even had 64-bit CPU cores on its near term road map. Anand Chandrasekher, the senior vice president and chief marketing officer at Qualcomm, downplayed the importance of Apple's 64-bit A7, stating in an interview, "I think they are doing a marketing gimmick. There's zero benefit a consumer gets from that."

Tech journalists were also quick to dismiss Apple's announcement of the 64-bit A7 as nothing to worry about. Stephen Shankland warned his CNET audience, "don't swallow Apple's marketing lines that 64-bit chips magically run software faster than 32-bit relics," and claimed that "64-bit designs don't automatically improve performance for most tasks."

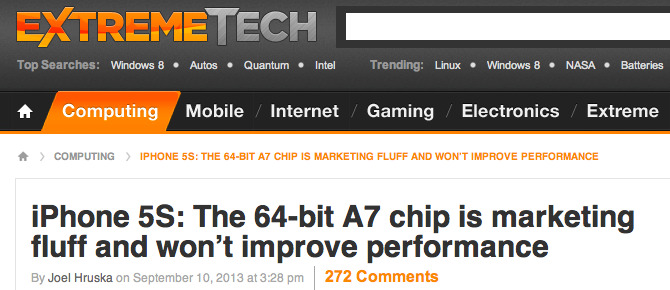

An even more incendiary report by Joel Hruska of ExtremeTech insisted "the 64-bit A7 chip is marketing fluff and won't improve performance." Hruska even boldly claimed that "the major reasons for adopting 64-bit architectures simply aren't present in mobile devices."

Days later, Qualcomm officially announced that Chandrasekher's comments were "inaccurate," and ultimately removed him as CMO. The journalists who wrote that Apple's 64-bit SoC was "marketing lines" and "fluff" kept their jobs. There wasn't even a correction posted on the false articles.

It was later revealed that inside Qualcomm, the launch of Apple's A7 had "hit us in the gut." A source inside the company stated, "we were slack-jawed, and stunned, and unprepared," and added that "the roadmap for 64-bit was nowhere close to Apple's, since no one thought it was that essential."

Yet again, Apple had a series of strategic goals it was delivering in silicon that were unrelated to what the rest of the industry was doing. Apple also had clear insight into whether or not a 64-bit SoC would be beneficial in accelerating apps. Qualcomm didn't run an App Store and wasn't maintaining a consumer mobile operating system.

Apple's transition guide told its iOS developers, "Among other architecture improvements, a 64-bit ARM processor includes twice as many integer and floating-point registers as earlier processors do. As a result, 64-bit apps can work with more data at once for improved performance.

"Apps that extensively use 64-bit integer math or custom NEON operations see even larger performance gains. [] Generally, 64-bit apps run more quickly and efficiently than their 32-bit equivalents. However, the transition to 64-bit code brings with it increased memory usage. If not managed carefully, the increased memory consumption can be detrimental to an app's performance."

It was quite incredible that a variety of bloggers, armed only with a very surface grasp of what "64-bit" meant, were insisting to their readers that Apple had not only squandered its lead in silicon to deliver a meaningless advancement for marketing purposes but was also telling its developers to waste their time optimizing their code to run on new silicon that wouldn't deliver any benefit at all. Suggesting that Apple's entire strategy was a lie was a breathtaking level of arrogance, but one that would keep getting repeated.

The wider architecture of A7 also allowed Apple to once again use the same chip to deliver iPad Air, its slimmed-down, fifth-generation Retina Display iPad. A slightly slower version powered a new Retina Display iPad mini 2. Both models took iPad upward in features just as Google was doubling down on its ultra-cheap Nexus 7 strategy for Android. The result associated iPad with a premium experience and linked Android with low-quality and problematic budget devices.

The rise of Metal

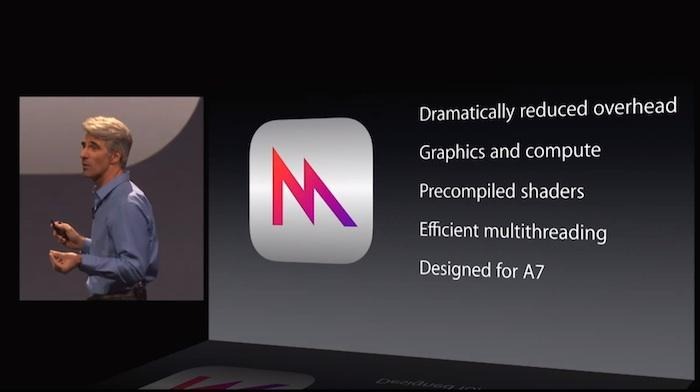

Beyond its new 64-bit CPU core, new A7 also incorporated an advanced new class of GPU based on Imagination's PowerVR Rogue architecture. It was designed to support OpenGL ES 3.0, including support for "GPGPU," general-purpose, non-graphical compute tasks. This would theoretically support OpenCL, which Apple had early introduced on the Mac and shared as open source.

However, rather than porting OpenCL to iOS as a public API, Apple had started work on what would become Metal, a new optimized API for both graphics and GPGPU that Apple had under development. Apple would ship tens of millions of A7 devices before unveiling its new software the next year designed to dramatically enhance the graphics and compute abilities of apps running on the A7 and its successors.

As with the original App Store, or the development of A4, iPad, Retina display, the unique Swift core of A6, and the 64-bit CPU design of A7, the development of Metal remained a secret until Apple was ready to deploy it, catching industry analysts, its competitors, and even is own developers by surprise.

LLVM compiler optimized code for Apple's silicon

Apple's A7 advancements in 64-bit CPU and Metal-accelerated graphics were both enabled by another low-level layer of technology Apple uniquely invested in early on: the code compiler that optimized software to run on its silicon and with its OSs.

In 2008 AppleInsider described the LLVM Compiler as Apple's open secret after the company detailed its plans for replacing the standard GCC with an entirely new, advanced code compiling architecture based on work originating at the University of Illinois in a research project by Chris Lattner.

LLVM played an important role in enabling Apple to support new silicon designs and to optimize existing third party code to take full advantage of them, including the shift to 64-bit and Metal graphics. And while an original intent of LLVM was to bring the Mac's Objective-C language up to par with other languages in compiler sophistication, its development ultimately resulted in Swift, a new language that borrowed the same codename of Apple's unrelated A6 core design. The Swift language was created by Lattner's group to support new, modern programming features that would be difficult to shoehorn into Obj-C.

Apple shared the Clang LLVM front end compiler for C, C++, and Objective-C as an open-source project in 2007, but it wasn't 2012 that it started to be widely adopted by various Unix distributions. It didn't become the default compiler for Android until 2016. In part, that's because Google wasn't creating Android to be a great product, but rather to simply serve as a good enough product to facilitate the development of a mobile platform it could tap into as an advertising platform without any restrictions imposed by Microsoft or Apple.

Again, while Apple was hyper-optimizing iOS code to compile specific to the subset of silicon it was designing and building, Android was hosting apps running in a Java-like runtime designed specifically to be generic enough to run anywhere— the opposite of being optimized.

Nvidia graphically beat to 64-bits

Apple's ability to "walk right in" on silicon design starting in 2008 and ultimate beat the entire industry to a 64-bit mobile architecture was particularly embarrassing to Nvidia. Advanced chip design was effectively Nvidia's core competency dating back into the 1990s.

In 2006, Nvidia had acquired Stexar, a startup that had been working on x86-compatible silicon using binary translation. Nvidia had planned to use the technology to deliver a chip that could run both x86 and ARM instructions, but after failing to get a license from Intel, the company refocused on using it to deliver a 64-bit ARM processor it called Project Denver. The company first publicly announced its Denver plans in 2011, after five years of internal work involving silicon experts with experience at Transmeta, Intel, AMD, and Sun.

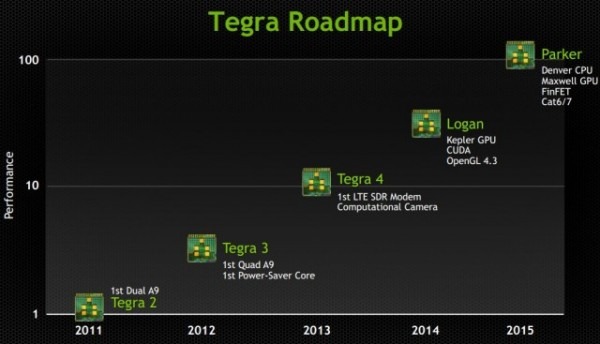

Nvidia announced that Denver would "Usher In New Era Of Computing," stating that "an ARM processor coupled with an NVIDIA GPU represents the computing platform of the future." It imagined that its Denver 64-bit ARM architecture would soon power mobile computers, PCs and even servers. The company laid out advanced plans to release Tegra 5 "Parker," its 64-bit Denver CPU with Kepler graphics, by 2015.

Despite all of Nvidia's public 64-bit grandstanding, Apple waltzed right past Nvidia without any advanced warnings and delivered a functional 64-bit processor small and efficient enough to run iPhone 5s and powerful enough for its high-end new iPad Air tablet. Nvidia's first 64-bit Denver-based chip wouldn't ship until a full year later, at the end of 2014, in Google's poor-selling Nexus 9 tablet. It was too big and hot to put in a phone.

Apple's custom A4-A6 silicon work had trounced Nvidia's own Tegra efforts over four generations, and now A7 was embarrassing the project Nvidia had been crowing about for years. Apple's increasingly powerful custom ARM CPU cores were also being paired with Imagination's PowerVR GPUs, a direct competitor to Nvidia's mobile graphics architectures.

Apple's ability to design better silicon and then ship it in vastly higher volumes meant that rather than Denver helping Nvidia to establish its GPU as "the computing platform of the future," Apple's A7 and its successors would sideline Project Denver and Nvidia's mobile GPUs into a minor, inconsequential niche.

On top of this, Nvidia's desktop GPUs had been failing in Apple's MacBook Pros, prompting Apple to remove Nvidia from its entire Mac lineup. Before Denver shipped, Apple would also release its new Metal API, which took direct aim at Nvidia's CUDA platform, seeking to establish itself as the way to optimize code for mobile graphics. A year later, Apple would expand Metal to its Macs. This year, Apple is launching Mac Pro as part of its Metal strategy for high-end workstation graphics, a move that would have been unthinkable just a few years ago.

The foundations of Apple's Mac Pro strategy playing out today were built and paid for by hundreds of millions of iPhone and iPad sales that occurred years ago, enabling the company to advance ahead of established rivals in silicon, in compilers, in graphics APIs, and operating systems— all of which were largely taken for granted back in 2013. If Apple found it so easy to sidestep Google, Microsoft, Samsung, Nvidia, Qualcomm, and Texas Instruments six years ago, imagine how it can wield its current technology to achieve advances in other areas today. Most tech thinkers have been entirely incapable of even fathoming this concept, and investors still value Apple as if it were a commodity steel mill about to go out of business.

Samsung thrown for a loop by 64-bits

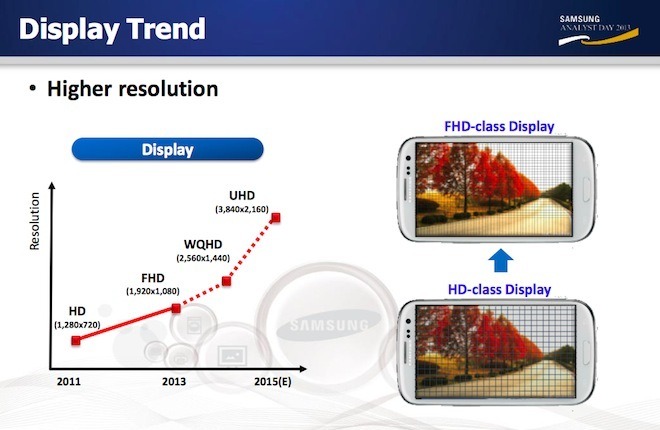

It wasn't just Qualcomm and Nvidia who were thrown into a panic by Apple's aggressive silicon moves. Just a few months after Apple's introduction of the A7, Samsung addressed investors' 64-bit concerns at its 2013 Analyst Day event. The company laid out various visions for the future, promising that it would "play a key role in the premium smartphone market."

That included detailed plans to introduce mobile devices with incredibly high UHD 3840x2160 resolution displays by 2015, although even its newest Galaxy S10 and Note 10 don't use such panels even today in 2019. The company had less to say about its roadmap and timeline for 64-bit SoCs.

On that subject, Dr. Namsung Stephen Woo, the president of Samsung's System LSI fab, stated, "many people were thinking 'why do we need 64-bit for mobile devices?' People were asking that question until three months ago, and now I think nobody is asking that question. Now people are asking 'when can we have that? And will software run correctly on time?'"

Woo added, "let me just tell you, we are... we have planned for it, we are marching on schedule. We will offer the first 64-bit AP based on ARM's core [reference design]. For the second product after that we will offer even more optimized 64-bit based on our own [custom core design] optimization. So we are marching ahead with the 64-bit offering."

Despite being behind with no clearly defined timetable for delivering anything, Samsung's chip fab president still claimed to be "at the leader group in terms of 64-bit offerings." It would ultimately ship its first custom core design two years later. Those two years would end up pivotal in the mobile silicon race, as the next segment will detail.

Daniel Eran Dilger

Daniel Eran Dilger

William Gallagher

William Gallagher

Andrew Orr

Andrew Orr

Sponsored Content

Sponsored Content

Malcolm Owen

Malcolm Owen

Mike Wuerthele

Mike Wuerthele