It began, as so many Apple stories do this close to a product unveiling, with a rumor.

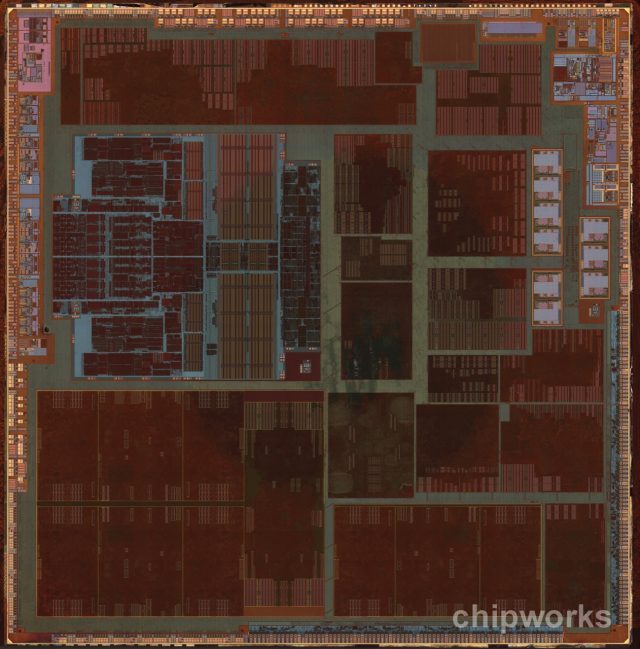

This rumor was about the new A7 system-on-a-chip, which is thought to be part of the new flagship iPhone that Apple will apparently be unveiling on September 10. Apple very rarely talks about the specifics of its custom SoCs, even after it announces them. We're usually told how many percentage points faster it is than the previous chip and then we're left waiting for someone like Chipworks to get a good die shot of the SoC before we know more about what's going on there.

The rumors in question say that the A7's CPU will be "about 31 percent faster" than the CPU cores in the current A6, a number that has prompted some mild hand-wringing about "flagging innovation." The A5's CPU was twice as fast as the A4's, and the A6 doubled that theoretical performance again. In light of those advancements, isn't 31 percent a bit disappointing?

Assuming those A7 numbers are accurate, they don't actually represent a "huge failure" on Apple's part, even if they aren't as high relative to the previous generation of hardware. Let's talk a little about how Apple could hit those numbers, why they aren't higher, and just what you can expect from a next-generation Apple SoC.

The maturing of a market

Thirty-one percent. It doesn't sound like a big generational jump given the 100 percent leaps between the A4 and A5 and the A5 and A6. Assuming these numbers are correct, it's a safe bet that Apple will be continuing to use a dual-core CPU configuration in the A7 rather than moving to a quad-core configuration.

If you want to see how Apple might realize these performance improvements, there's no better example than Qualcomm. Qualcomm created its current Krait 300 and 400 architectures by making relatively minor tweaks to the Krait 200 (née Krait) architecture in last year's Snapdragon S4 Plus and Pro SoCs. By making similar tweaks to its custom Swift CPU architecture, edging clock speeds upward slightly (as Qualcomm did in the jump from the S4 Pro to the Snapdragon 600), and increasing memory speeds (a jump from DDR2 to DDR3 seems realistic), the A7 could hit that 31 percent number without significantly increasing its power draw.

And, really, a 31 percent jump between generations is pretty good. It's larger than the performance jump that Intel delivered between Sandy Bridge and Ivy Bridge or between Ivy Bridge and Haswell—the days when CPU performance could double in a single year have long been over for desktop and laptop CPUs. And while we've grown accustomed to rapid progress in phone and tablet chips, we'll begin to see things slow down over the next year or two. Even with advances in manufacturing technology, it's simply not possible to continue with the kind of performance increases we've seen since the iPhone launched in 2007 without running up against power, thermal, and space constraints. If the A7 is 31 percent faster than the A6, it doesn't represent some kind of failure on Apple's part—it's just the new normal.

Four cores are better than two (but you don’t always need them)

But what of the specification race? Won't the newest iPhone fall behind if it uses a dual-core CPU when most of its competitors are using a quad-core CPU? Again, a peek over to the Android side of the fence is instructive here.

Consider the Moto X. It uses the same speedy Adreno 320 GPU as other high-end phones like the Galaxy S 4 and the HTC One, but it cuts the number of CPU cores from four to two. Despite this, we found the Moto X to be perfectly speedy in our review, and AnandTech has dug even deeper into the way the chip behaves under a sustained load.

In essence, the dual-core SoC in the Moto X can run at its highest-rated 1.7GHz frequency for longer than the Snapdragon 600 SoC in the Galaxy S 4 can run at its top speed of 1.9GHz; the quad-core chip throttles down to a lower speed during periods of sustained activity. Thus, the dual-core chip may actually complete lightly threaded tasks just as quickly or slightly more quickly than the quad-core chip. iOS is much more restrictive than Android is when it comes to background tasks—even if you ran iOS on a quad-core CPU, you might not notice much of a difference in performance because of the way the operating system works.

None of this is to say that a dual-core chip is better for performance than a quad-core CPU. Given properly optimized code, more, slower cores are going to get things done more quickly than fewer, faster cores. Operating systems like Android that are more permissive when it comes to background tasks are also going to benefit from having more cores to work with. It does mean that a hypothetical next-generation iPhone with a hypothetical dual-core A7 won't necessarily feel slow compared to Android flagships just because it's missing two CPU cores, though.

Finally, it has been said before but it's worth saying again: Apple's tight integration between hardware and software can help mask its generally lower CPU performance relative to competing Android handsets. If a particular thing runs poorly on an A4 or an A5, Apple can easily implement a fix that smooths things out for that particular device. This is what keeps devices like the iPad mini feeling responsive despite using an SoC that's two-and-a-half years old—such is the benefit of supporting a limited pool of hardware.

The GPU

All of this CPU talk overshadows an area where Apple has always devoted most of its attention anyway: the GPU. You see it over and over again in the company's recent chips—the A5, the A5X, the A6, and the A6X have all focused on providing "good enough" CPU performance with category-leading (at the time) GPU performance.

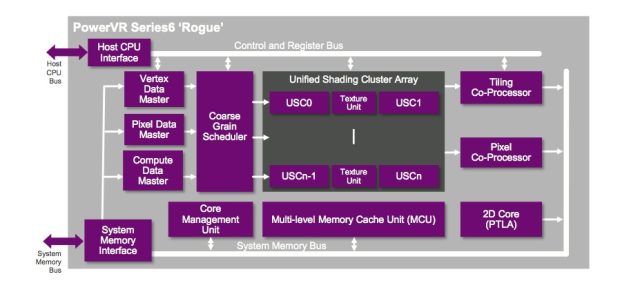

Assuming Apple doesn't switch GPU providers for the A7, the timing is right for Apple to make the jump to Imagination's next-generation Series 6 GPUs, codenamed Rogue. Imagination has made lofty claims about both the performance ("can deliver 20x or more of the performance of current generation GPU cores targeting comparable markets") and efficiency ("around 5x more efficient than previous generations") of its Rogue architecture. Without more information about which GPUs Imagination is comparing its architecture to (and without information about which of the available Series 6 GPUs Apple might use) we can't make direct comparisons between a hypothetical Series 6-packing A7 and the current A6 (which uses a tri-core PowerVR SGX 543MP3 GPU). However, assuming Apple takes this step with the A7, expect GPU performance to leap forward where CPU performance merely hops.

The Series 6 GPUs also support a number of new APIs, all of which could increase performance (and the visual quality) of the iOS user interface as well as its applications and games: OpenGL ES 3.0 (recently added to Android in version 4.3), OpenGL 3.x and 4.x, and OpenCL 1.x (the latter of which could bring GPU-assisted computing to iOS for the first time). Unified shaders help to reduce the size of the GPU relative to something like the GPU in Nvidia's Tegra 3 or Tegra 4 SoCs, which still require the use of different kinds of shaders to perform different tasks.

And, of course, expect Apple to pack a hypothetical next-generation, full-size iPad with some kind of "A7X" variant similar to the A5X and A6X. In both cases, Apple preserved the same CPU configuration as in the standard A5 and A6 (dual-core Cortex A9 for the former, dual-core Swift for the latter) while bolting on a much larger GPU and a wider memory bus to feed the iPad's higher-resolution screen. iPhones and iPads can only share an SoC when they also share comparable screen resolutions (the original iPad and the iPhone 4; the iPhone 4S, and the iPad 2 and iPad mini). Retina iPads just need more performance than the standard SoCs can comfortably provide.

Specifics to follow

What we've laid out here is what we believe to be the most likely scenario based on the timing, Apple's past behavior, and the (admittedly scant) information available. Whatever the specifics, you can expect Apple to employ the same general approach to the A7 that it has applied to everything since the A5: give it a GPU that can hold its own in the spec race along with a CPU that isn't at the top of the range but is more than good enough for iOS.

There are plenty of other suppositions we could explore: what if Apple gains additional thermal and power headroom because it switches manufacturing processes? (Possible, but in the past Apple has tried new processes in lower-volume products first.) What if the company switches from Imagination's GPUs, or if its recent GPU hires mean that we get a custom GPU architecture? (Unlikely; many of those hires were made very recently, and chip design takes time.) What if Apple goes with a 64-bit architecture? (Again, possible, though I've yet to see a compelling argument made in favor of it.) All of these factors could add interesting wrinkles to the A7, but we wouldn't expect any of them to change the chip's basic composition.

I should stress that virtually none of the preceding speculation is based on anything I know for sure—Apple doesn't tell me about its chips or devices ahead of time. We don't even know for sure that Apple will actually be having a media event on September 10. We don't know that it will launch an iPhone at that event, if the event does in fact happen, and we certainly don't know whether that phone will include a new chip or anything about that hypothetical new chip's capabilities. As usual, we'll keep following the chip as more (or as any) facts are unveiled, and we'll dig into how it performs when we have Apple's next iPhone in our grubby hands.

reader comments

95