Things IBM invented or that wouldn’t exist without it:

Punch cards

The US Social Security System

The hard drive

SABRE global travel reservation system

Barcodes

The Apollo Program’s computers to land the first humans on the moon

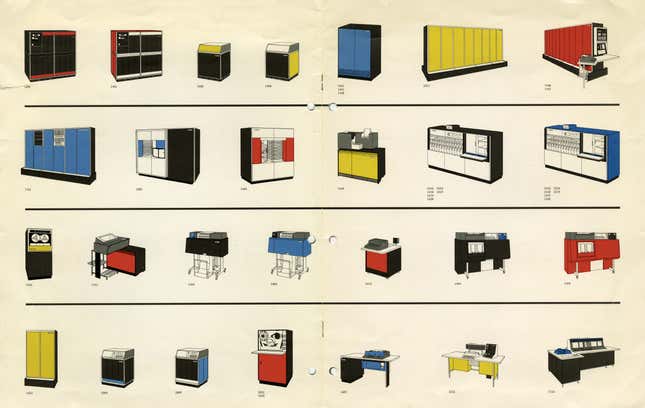

The mainframe computer

The magnetic strip on credit cards

The personal computer

LASIK laser eye surgical tool

The floppy disk

Wi-Fi

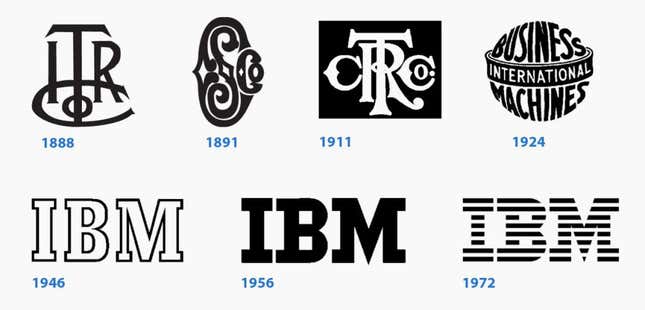

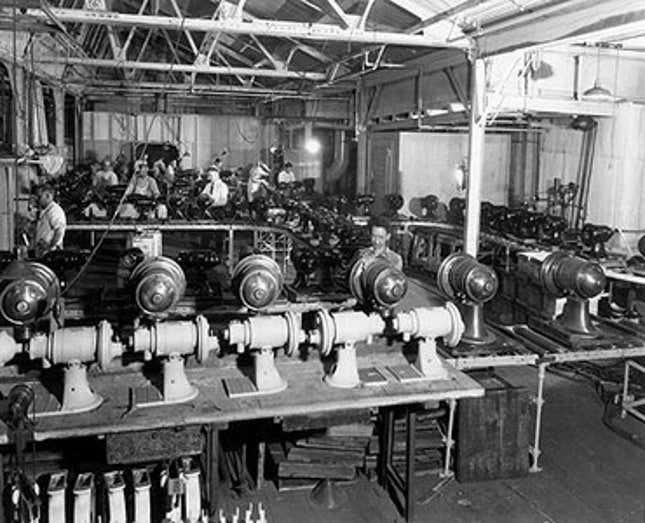

IBM has shed its skin so many times it’s hard to believe that this 104-year-old company started life making meat grinders and cheese slicers. Since then, its core business has at different times been punch card machines, clocks, mainframes, and personal computers, and it’s now essentially a $93 billion-a-year enterprise software company, helping equally monolithic firms manage their businesses slightly more effectively than before.

Yet IBM’s revenues have fallen each of the last 14 quarters and its stock price is knocking around at a five-year low—lagging behind not only tech darlings like Google and Apple but older, stodgier rivals like Microsoft, Oracle, and HP. Critics say it adapted too late to technological shifts such as cloud computing. Prophesying the company’s inexorable decline has become something of a journalistic sport.

Part of IBM’s response to this doomsaying is contained in a building that looks like a three-story-high flying saucer, perched on top of a hill in the middle of 240 acres of grassland and forest in upstate New York. Its designer was Eero Saarinen, the man behind St. Louis’ Arch and the TWA terminal at JFK Airport, and its curved, glass façade is as imposing and striking in 2015 as it was when it opened decades ago.

This is IBM’s Thomas J. Watson Research Center in Yorktown Heights—the crown jewel of its network of research facilities. It’s a world away from the corporate headquarters, just 11 miles down the road in Armonk. Inside, tucked away in tiny laboratories, IBM has scientists working, as it has for the last 54 years, on projects that could give it another world-altering technology, like those in the list above.

The most public success to come out of the labs in recent years is Watson, the cognitive intelligence system that once beat a bunch of smart humans on the quiz show Jeopardy. (Like the research center, Watson is named for IBM’s first two CEOs, not for Sherlock Holmes’ sidekick.) In April, CEO Ginni Rometty told Charlie Rose that Watson was her “moonshot.” Watson has the power to wrangle all of the world’s data into usable information. It can reason and learn, rather like a human can—just faster.

Yet Watson is by no means the most cutting-edge of IBM’s projects. In Yorktown Heights, IBM’s material scientists, physicists, electrical engineers and chemists are working on dozens of projects. These include artificial intelligence that might eventually mirror the human brain; atom-sized carbon nanotubes that could conceivably revolutionize computer chips; and quantum computers that may one day rewrite the rules of computing altogether. Any one of these fantastical-sounding projects, which have been years, sometimes decades in the making, could literally transform the power of computing to enhance human intelligence if IBM’s scientists can crack them.

The reason IBM can tackle these projects is the reason it was able to invent punch cards, and the hard drive, and everything else: patient, methodical and precise scientific research. IBM spent $5.5 billion on research and development in 2014 alone, and unlike with some of the modern tech giants, there’s no pomp and circumstance around each newly launched “project,” no elevation of designers to the status of demigods, no race to build the next consumer blockbuster. This is science for science’s sake. It’s how scientific breakthroughs are made. For IBM, there has always been something peeking up at the horizon’s edge, some initiative that will one day usurp the rest of the business and ensure profitability for another decade or two or five.

The question is, will that be enough this time?

Watson has nothing on what’s coming next

At a recent Watson conference in New York, a man who’d flown in from Denmark sat next to me. “In other years I wouldn’t have thought to come to this,” he said, “IBM was a company that made mainframes. But this is interesting.”

Watson is certainly cool.

Watson was born eight years ago. In February 2011, the Watson research center’s mid-century auditorium was transformed to look like the set of Jeopardy. Watson was to take on two of the game show’s most successful human competitors of all time—Ken Jennings and Brad Rutter—and it was easier to bring host Alex Trebek and his set to suburban New York than take Watson to California. After a few early missteps, Watson showed its strength and ended up crushing the human competition.

At the time, Watson was the size of a master bedroom, with a box the size of a fridge for a face. It comprised 750 servers and 16 terabytes of memory on 2,880 cores, and cost $3 million to build. Its initial developers wanted to create something on the scale of IBM’s Deep Blue, the supercomputer that beat Garry Kasparov at chess in 1997. They chose Jeopardy, because to them, the answers-as-questions-style of trivia featured on the game show represented a “unique and compelling AI question,” as it required understanding context, puns and lateral thinking—things that humans tend to be better at than computers. To win, they needed to build a system that would be able to answer about 70% of the questions thrown at it, with more than 80% certainty that the answer it had was correct, in less than three seconds. Because that’s what a human Jeopardy champion can do.

Nowadays Watson, inasmuch as it lives anywhere, lives on three server blades in the trendy East Village neighborhood of Manhattan. Its brain is mostly in the cloud. The company announced that it would form a business group for Watson in January 2014, ensconcing it in a sparkling new office and pledging to invest more than $1 billion in the technology as it shifts from lab experiment to revenue generator. It shows Watson to prospective clients out of a glowing, cylindrical immersion room on the building’s fourth floor. In the last two years, IBM has partnered with over 350 organizations and 100 universities to turn Watson into an all-seeing, all-knowing computing system.

At its core, what Watson does is extract meaning and sentiment from the way humans communicate, and distill that into analysis. It can find patterns in data in minutes, through sheer speed and lexical understanding that a human couldn’t hope to achieve in decades. And Watson gets better as it ages, learning more with each piece of information it scans.

This year, Rometty launched another new business unit, staffed by 2,000 consultants and called Cognitive Business Solutions, partially to help businesses figure out how to use Watson. Right now, it’s popping up in all sorts of fields, from corporate M&A analysis to fantasy football picks and food pairings. Various iterations of Watson are being used to diagnose cancer, answer questions about Singapore’s tax code, and, yes, suggest where to get a good taco in Austin, Texas. Watson can analyze your personality, come up with new dinner ideas, and help kids have a conversation with their toys. Now Watson can see you, too.

Applying Watson’s immense analytical capabilities to diagnosing illness and disease shows early promise (although IBM is careful to present Watson as a partner for doctors, not a replacement). The Watson Group has partnered with the CVS drugstore chain, and Memorial Sloan-Kettering hospital, best known for its treatment of cancer. It also bought Merge Healthcare, a medical imaging company, to develop its medical diagnosing prowess. Rob High, the Watson Group director, told Quartz that Watson can fill in the gaps that doctors can’t get to. “Trying to keep up with everything produced in the medical profession is virtually impossible,” High said. “You’re having to make decisions based on things you learned years ago.”

The group is opening up various APIs (basically, protocols that let other computers tap into Watson’s software) to companies that want to use its natural-language processing abilities, as well as its indexing and dot-connecting powers. It hopes to show off the power of Watson with its API projects to sell into larger companies. Rajeev Ronanki, an analyst with Deloitte, said he believes that Watson could end up being the brain that powers IBM’s other business products, like database software and infrastructure management—something Rometty has hinted at recently. For example, Watson could run a city’s traffic system or manage municipal energy consumption, and ”it could make decisions on where to better invest public funds,” he said.

Even though IBM is reticent to say so, it’s built a machine-learning computer that could one day form the backbone of a fully-fledged artificially intelligent computer—one that can think and act at least as well as a human can.

Watson reborn

Up in Yorktown Heights, researchers are still exploring AI computing systems. But for them, Watson is old hat. There’s a new name the researchers are bandying around: Celia.

Celia stands for Cognitive Environments Laboratory Intelligent Assistant. While IBM’s sales team is working on selling Watson into companies’ workflows, this research team is working on integrating Celia into the workplace itself, like a digital employee. In the future, Celia—which is a bit like a mix of Watson’s analytical prowess, Siri’s conversational tone, and Minority Report’s user interface—may well become the gold standard for how corporate strategy is dreamt up and executed in boardrooms across the world.

The system essentially takes the Watson concept and adds a human element on top of it. For the most part, to interact with Watson, you need to type something into a computer. Celia is meant to mimic how we would interact with another human. An executive could ask Celia a question like, “What small-market-cap companies in the semiconductor industry would be a good fit for us to buy?” and Celia would return a list of companies that make sense, as well as explain her reasoning for choosing those companies.

“We are inspired by how humans can reason through a problem—minus the emotional bias,” High said. In the case of an M&A discussion, Celia might show that a certain acquisition makes the most sense, even if the CEO is not a fan of the other company’s CEO, or the board doesn’t like its brand ethos, or other human idiosyncrasies that wouldn’t have any real bearing on a business deal. Watson is close to this potential now, but Celia could bring it into the boardrooms, offices, and homes of the future.

It doesn’t take a giant leap of imagination to see how this could be applied to other areas. Doctors could talk through diagnoses with Celia, receiving answers instantly synthesized from thousands of pieces of medical data. Researchers could work through ideas for new drugs, or how to build a high-rise tower, or even plan an entirely new city, and Celia would instantly give them suggestions in plain English that they couldn’t have worked out in days. Customer-service lines could be replaced by machines that actually give helpful answers.

In short, Celia could be like the digital assistant in the movie Her—a useful, conversive part of our daily lives—though hopefully without the propensity to fall in love or the desire to run off and form the singularity.

Computers thinking like we do

But advanced as they are, both Watson and Celia are still traditional, logical computers.

In August 2014, IBM announced that it had built a “brain-inspired” computer chip—essentially a computer that was wired like an organic brain. There have already been great advances in software inspired by the human brain, such as complex neural networks—the AI systems that have been used to paint like humans, see the world, and have robots learn to walk as a child would. But IBM’s work could create far more efficient computers to run these programs, ones that require significantly less power, making it much easier to scale them. Progress has been impressive: Earlier this year, the team showed off a digital brain that was as complex as that of a rodent’s.

While current chips are excellent at analyzing information, these new types of chips are better suited to finding patterns in information—like the right side of the brain. Dharmendra Modha, the lead on the project, told Quartz that he sees the future of computing being composed of traditional and synaptic computers, working together in a sort of left brain-right brain symbiosis to create systems that were previously unimaginable.

Modha said his goal is to build a “brain in a shoebox,” with over 10 billion synapses, consuming less than 1 kilowatt of power—no more than a small electric heater. He thinks this will be possible in less than a decade.

If IBM were to ever get to a point where it could create a computer that was wired like the human brain—which has over 100 billion synapses—wouldn’t it be creating an actual electronic version of a human brain? That’s hard to answer; no one really knows whether you can truly build a fully-fledged AI brain using silicon.

Whether its researchers are explicitly working toward this goal or not, IBM could be on the path to building an artificial intelligence system the likes of which the world has never seen. The power of Watson, the responsiveness of Celia, and a left-brain/right-brain supercomputer working together could put us humans firmly in the passenger seat of intelligence, with IBM’s supersystem’s brain blazing by us in terms of pure thought power.

But for now, that sort of computing ability is still firmly in the realm of fiction. Modha says a computer like this is still likely decades away.

“The complexity and beauty of neurons in nature dwarfs the imagination,” Modha said. ”We are but scratching at the surface of mother nature’s patent fountain.”

Celia can but dream.

The future will be hard to see

Dario Gil, the vice president for research and development at IBM, told Quartz that the company is trying to sustain Moore’s Law on two fronts. On the one hand, there are teams across IBM’s research facilities working to make semiconductors smaller, so that more processing power can be packed onto a chip. The company announced in July that it had built a prototype chip with components just 7 nanometers wide—11,000 times narrower than a human hair. (Chip component sizes shrink every couple of years or so; current commercial chips are 14 nm, and Intel is hoping to bring 10 nm chips to mass production by 2017).

But eventually, it won’t be possible to make the features on silicon chips any smaller. Mukesh Khare, IBM’s head of semiconductor research, told Quartz that his team will try to get chips down to 5 nm, but it will be difficult. “The thing we can’t scale is an atom,” he said.

The semiconductor industry is a $335 billion dollar industry, almost entirely based on silicon chips. So what happens when silicon can’t be relied on to make the computer chips of the future?

In the physical sciences lab at Yorktown Heights, scientists are trying to turn strings of carbon only 1 nm wide into the next generation of chips. IBM has been working on these “nanotubes” for over 20 years. Their atomic structure makes them good electrical conductors, which means they could make considerably smaller, lower-power computer chips than what’s on the market today, effectively bolstering Moore’s Law for a few more decades.

Carbon nanotubes are made using various complicated methods. But each essentially involves the same process: Growing the tubes, by having carbon in various states—such as graphene, or a hydrocarbon gas—react with a catalyst to build them up.

The difficulty, explains George Tulevski, a researcher on the nanotubes team, is that, “In a silicon transistor, you have several billion devices, and all those devices operate exactly the same.” The current methods for growing nanotubes, however, result in tubes with tiny variations in diameter, and therefore in their electrical properties. What Tulevski’s team is trying to do is create thousands—and eventually billions—of carbon nanotubes that function exactly the same way.

In early October, IBM announced that it had solved another of the key obstacles to using carbon nanotubes as transistors: How to connect tubes together, allowing current to pass from one to another. Shu-Jen Han, another member of the nanotube team, said he believes that this “definitely shows the possibility for carbon nanotube chips” in the future. The team’s work, he said, had “filled in the one of last missing pieces.”

Beyond replacing silicon chips, the team’s work might also pave the way for nanorobots—impossibly small robots that can be injected into the body and programmed to cure cancer, rid us of disease altogether, or perhaps even self-replicate to build new structures on the fly. Carbon nanotubes, as well as being electrically conductive, are also super-strong. Moreover, they happen to be super-flexible, meaning devices built out of carbon nanotubes wouldn’t need the rigid bases—called substrates—that silicon chips need, Han said. Future computing devices could be structured to fit the bends, stretches and twists of the human body. Wearable computers could feel as unobtrusive as ordinary clothing.

This isn’t going to happen overnight, however. Nanotube chip and nanobot research is still just that—research. As with the brain-inspired computer, practical applications are likely still a few decades away. For now, Tulevski and his team will keep trying to get their tubes in a row.

A quantum leap

All this research may lead to computers that are smarter, friendlier, and more power-efficient than those that have gone before. But another slice of IBM’s research is about an entirely different conception of what a computer might be.

Traditional computers, like the one you’re probably reading this story on, are basically collections of billions of transistors. Transistors are electrical devices that can be turned on or off. That on or off state gets represented as a 1 or a 0 on a computer. Each letter, number, tweet, photo, Excel spreadsheet and webpage is made up, ultimately, of a series of 1s and 0s, called bits. They’re the foundation for how all digital electronic devices work.

But it’s possible to build a transistor that essentially consists of just a single atom, known as a “qubit.” Governed by the rules of quantum physics, it can be in a “superposition,” a kind of combination of 1 and 0 at the same time.

A computer made of qubits can, in essence, do all the steps of a calculation simultaneously, rather than in sequence like a traditional machine. It can therefore work far faster, crunching problems that would require unfeasible amounts of computing power today. A quantum computer could smash conventional encryption—the kind that protects your email or your online bank account from hackers. More optimistically, it could model the chemical reactions of complex molecules—helping design new drugs, for instance.

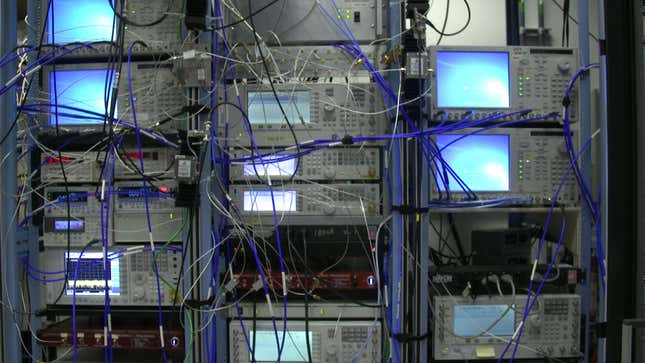

Building this kind of machine is hard, however. Anything that disturbs a qubit causes it to “decohere”, or lose its superposition, leading to errors in the calculation. So although qubits themselves are tiny, they require a room-sized mass of equipment to keep them cooled to close to absolute zero as well as process the information coming out of them. IBM has several such room-shaped machines, and the biggest of them houses only eight qubits—a small prototype.

IBM is not alone in its quest. Microsoft, NASA, Google, and countless universities are working on quantum computers too. One company, Canada’s D-Wave, which uses a different process to IBM’s, claims to have already built a commercially viable computer with 1,000 qubits.

Some scientists aren’t convinced that D-Wave’s machine really is a quantum computer. Even if it is, it’s not clear it’s working any faster than a conventional one. But it has inspired the US government to start its own quantum-computing research based on the same method. D-Wave’s CEO, Vern Bronwell, told Quartz he thinks the path IBM has chosen to pursue for quantum computing is “very, very difficult,” and—yet again—will take decades before it’s practically useful.

That being said, IBM’s work has attracted interest from the same government body. IARPA, the US Intelligence Advanced Research Projects Activity—think DARPA for the CIA—today (Dec. 8) awarded IBM a multi-year grant to develop its quantum research in the hopes of building the first true quantum computer. It’s a significant vote of confidence in the direction IBM’s taking.

Patience is a virtue

To IBM, the fact that such technologies take so long to bring to fruition is a strength, not a weakness.

Tulevski, on the nanotubes team, said that he’s never felt any pressure to speed up his work in the hopes of monetizing it—something that other corporate research institutes, like Bell Labs, are having to deal with.

“I think that’s what’s very unique about our lab. We really are committed to basic science and basic research,” he added. “It’s not to monetize at all—it’s to make progress, to keep showing that what you’re doing in five, ten years, will have an impact on technology, on our business.”

Bernie Meyerson, IBM’s chief innovation officer, said the sort of research being done at Yorktown Heights is more than window dressing: “Actually it is essential,” he said. “The last thing you want to be doing is be standing in a tunnel, look up and see the light at the end of the tunnel, and discover that it’s actually an oncoming train.”

Meyerson asked me rhetorically what the cheese slicers and meat grinders that IBM started out making could possibly have in common with data processing or computer chips. They all require high-precision scales, he said, responding to his own question: “Because of course, the more precisely you measure things, the better things work.”

But in addition to a dedication to precision, you learn about your products’ place in the wider world. Making better meat grinders, clocks, microphones, scoreboards, typewriters, and punch cards requires you to learn about metallurgy and material durability, Meyerson said: “The materials you make these things out of determine their lifespan.”

Precision also translates into efficiency. IBM’s punch card system, which used cards made partially out of silica to withstand countless punches and insertions, would wear down the slots they were inserted into. IBM needed to make systems that could hold up after hundreds of thousands of clockings-in and out.

It’s not wildly different from ensuring you have systems in place to prevent your cathode-tube computing machines or your server farms from overheating. It comes from building up a history of expertise, of trial and error, on how materials interact together, whether they are slicing cheese or parsing electrons. And a lot of those areas of expertise are just as relevant when talking about efficiently running a business with Watson in 2015 as they were with punch card systems in the 1960s. It’s just about organizing the data you have as well as you can, and finding the signal through the noise.

“One of the things about IBM that’s sort of unique is that our history serves our future,” Meyerson said. “Yet we worry about the future where a discontinuity is needed to address it”—meaning, reaching a barrier, like the minimum possible size of transistors, which requires an entirely new discovery, like nanotubes. “And both of them together is why you’re successful, not one or the other.”

Will science save IBM?

So will the research division provide the answer to the company’s revenue decline and stagnating stock price? Will we ever have access to a future version of Watson, running on a quantum computer, powered by carbon nanotube chips, and built by IBM?

“I would say it’s possible—if it happens, it’ll be by accident,” Robert Cringely, a former InfoWorld and Forbes columnist, told Quartz. “But then, many research breaks are accidental.”

Cringely believes that IBM can’t get out its own way, and that the current revenue downturn the company is experiencing foretells something worse. He wrote a book entitled The Decline and Fall of IBM in 2014, in which he relates what may be an apocryphal joke told by former CEO Lou Gerstner that “good ideas don’t come out of IBM research—they escape.”

Cringely doubts that IBM can monetize any of the moonshots it’s working on—even Watson. “IBM is perfectly happy to sell consulting services with Watson to help them figure out what to do with it, but even they don’t know what to do with it,” he said.

Ronanki, the Deloitte analyst, told Quartz that he believes that in the next five to seven years, cognitive computing like Watson—and whatever follows it—is going to be a $5-10 billion market. “IBM is well positioned to have a significant share of that,” he said. “But it’ll depend on how quickly they can get demonstrable results.”

And IBM could use those results: The near-term future for the company is not exactly rosy. IBM’s most recent earnings report missed Wall Street’s revenue target, and analysts forecast another year-on-year decline. RBC Capital analyst Amit Daryanani told CNBC in October that the company was on the right track by shifting its focus to what Rometty has called “strategic imperatives”—forward-looking endeavours like cloud computing, analytics and IT security. But he added that those businesses have lower profit margins than IBM’s core businesses, like offline software, mainframes, and business services. “I generally believe they’re doing all the right stuff,” Daryanani said, suggesting it might just be slow going in the near future as IBM shifts away from its legacy businesses.

In short, Big Blue’s corporate chiefs must make sure that near-term bets on things like the cloud and security can quickly produce more revenue, as their people figure out how to turn Watson into a significant money-maker in the next handful of years. Watson could then conceivably buy more time for all those big, blue-sky projects IBM’s scientists are plugging away at. It’s going to take a while—if it happens at all.

There’s a similar conundrum with IBM’s quantum computing efforts. Things like cracking codes, building impossibly secure computer networks, or making air traffic control effortlessly efficient are just the beginning of what they might be used for. MIT professor Scott Aaronson suggests that in the same way traditional computers have replaced wind tunnels for aircraft and car designers, quantum computers could replace the gigantic particle accelerators that attempt to simulate the conditions in the few seconds after the Big Bang. “It looks likely that a single device, a quantum computer, would in the future be able to simulate all of quantum chemistry and atomic physics efficiently,” Aaronson told PBS. The question is whether, by the time quantum computers are capable of such feats, IBM will still be in a position to profit from them.

Back in 1961, when the Watson facility was built, Saarinen requested over 1,000 durable evergreen and maple trees be planted on the grounds. The building’s foyer is constructed almost entirely out of local Westchester fieldstone—4,000 tons of it were used throughout the building. Rather like Paul Rand’s iconic IBM logo, developed around the same time, there was foresight in how durable a place Saarinen was building.

Walking the halls of the research center now, there’s a sense of calm that might not be as apparent down the road at Armonk, or in Astor Place. The building is dotted with Eames lounge chairs and old pre-mainframe IBM clocks. When Quartz visited the lab, there was a quiet energy throughout the building. Scientists scurried across the linoleum floors from lab to lab, janitors and maintenance men fixed up various pipes and pathways, and mainframes buzzed in the background. The old clocks kept ticking.

Today, Xerox’s PARC lab and Nokia’s Bell Labs are shells of their former selves, but at IBM, throughout all the shifts in business strategy, and all the technologies that were discovered and developed, the sun rose and set over the spaceship, the saplings grew into trees, and the fieldstone just sat there.

The company is at a defining moment. If it can keep plugging along, trying to sell clouds to giants, Watson might take off and help break the revenue slump. And the researchers in IBM’s labs will be able to continue their work, building to their next breakthrough.